Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HBase blueprint configuration not working

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HBase blueprint configuration not working

- Labels:

-

Apache Ambari

-

Apache HBase

Created on 12-05-2017 11:35 AM - edited 08-17-2019 07:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello I have the following blueprint:

- cluster_configuration.json

{

"Blueprints": {

"stack_name": "HDP",

"stack_version": "2.6"

},

"host_groups": [

{

"name": "namenode1",

"cardinality" : "1",

"components": [

{ "name" : "HST_AGENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "ZKFC" },

{ "name" : "ZOOKEEPER_SERVER" },

{ "name" : "HST_SERVER" },

{ "name" : "HBASE_CLIENT"},

{ "name" : "METRICS_MONITOR" },

{ "name" : "JOURNALNODE" },

{ "name" : "HBASE_MASTER"},

{ "name" : "NAMENODE" },

{ "name" : "APP_TIMELINE_SERVER" },

{ "name" : "METRICS_GRAFANA" }

]

},

{

"name": "namenode2",

"cardinality" : "1",

"components": [

{ "name" : "ACTIVITY_EXPLORER" },

{ "name" : "HST_AGENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "ZKFC" },

{ "name" : "ZOOKEEPER_SERVER" },

{ "name" : "HBASE_CLIENT"},

{ "name" : "HISTORYSERVER" },

{ "name" : "METRICS_MONITOR" },

{ "name" : "JOURNALNODE" },

{ "name" : "HBASE_MASTER"},

{ "name" : "NAMENODE" },

{ "name" : "METRICS_COLLECTOR" }

]

},

{

"name": "namenode3",

"cardinality" : "1",

"components": [

{ "name" : "ACTIVITY_ANALYZER" },

{ "name" : "HST_AGENT" },

{ "name" : "MAPREDUCE2_CLIENT" },

{ "name" : "YARN_CLIENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "ZOOKEEPER_SERVER" },

{ "name" : "HBASE_CLIENT"},

{ "name" : "METRICS_MONITOR" },

{ "name" : "JOURNALNODE" },

{ "name" : "RESOURCEMANAGER" }

]

},

{

"name": "hosts_group",

"cardinality" : "3",

"components": [

{ "name" : "NODEMANAGER" },

{ "name" : "HST_AGENT" },

{ "name" : "MAPREDUCE2_CLIENT" },

{ "name" : "YARN_CLIENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "HBASE_REGIONSERVER"},

{ "name" : "DATANODE" },

{ "name" : "HBASE_CLIENT"},

{ "name" : "METRICS_MONITOR" },

{ "name" : "ZOOKEEPER_CLIENT" }

]

}

],

"configurations": [

{

"core-site": {

"properties" : {

"fs.defaultFS" : "hdfs://HACluster",

"ha.zookeeper.quorum": "%HOSTGROUP::namenode1%:2181,%HOSTGROUP::namenode2%:2181,%HOSTGROUP::namenode3%:2181",

"hadoop.proxyuser.yarn.hosts": "%HOSTGROUP::namenode2%,%HOSTGROUP::namenode3%"

}

}

},

{ "hdfs-site": {

"properties" : {

"dfs.client.failover.proxy.provider.HACluster" : "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider",

"dfs.ha.automatic-failover.enabled" : "true",

"dfs.ha.fencing.methods" : "shell(/bin/true)",

"dfs.ha.namenodes.HACluster" : "nn1,nn2",

"dfs.namenode.http-address" : "%HOSTGROUP::namenode1%:50070",

"dfs.namenode.http-address.HACluster.nn1" : "%HOSTGROUP::namenode1%:50070",

"dfs.namenode.http-address.HACluster.nn2" : "%HOSTGROUP::namenode2%:50070",

"dfs.namenode.https-address" : "%HOSTGROUP::namenode1%:50470",

"dfs.namenode.https-address.HACluster.nn1" : "%HOSTGROUP::namenode1%:50470",

"dfs.namenode.https-address.HACluster.nn2" : "%HOSTGROUP::namenode2%:50470",

"dfs.namenode.rpc-address.HACluster.nn1" : "%HOSTGROUP::namenode1%:8020",

"dfs.namenode.rpc-address.HACluster.nn2" : "%HOSTGROUP::namenode2%:8020",

"dfs.namenode.shared.edits.dir" : "qjournal://%HOSTGROUP::namenode1%:8485;%HOSTGROUP::namenode2%:8485;%HOSTGROUP::namenode3%:8485/mycluster",

"dfs.nameservices" : "HACluster"

}

}

},

{ "yarn-site": {

"properties": {

"yarn.resourcemanager.ha.enabled": "true",

"yarn.resourcemanager.ha.rm-ids": "rm1,rm2",

"yarn.resourcemanager.hostname.rm1": "%HOSTGROUP::namenode2%",

"yarn.resourcemanager.hostname.rm2": "%HOSTGROUP::namenode3%",

"yarn.resourcemanager.webapp.address.rm1": "%HOSTGROUP::namenode2%:8088",

"yarn.resourcemanager.webapp.address.rm2": "%HOSTGROUP::namenode3%:8088",

"yarn.resourcemanager.webapp.https.address.rm1": "%HOSTGROUP::namenode2%:8090",

"yarn.resourcemanager.webapp.https.address.rm2": "%HOSTGROUP::namenode3%:8090",

"yarn.resourcemanager.recovery.enabled": "true",

"yarn.resourcemanager.store.class": "org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore",

"yarn.resourcemanager.zk-address": "%HOSTGROUP::namenode1%:2181,%HOSTGROUP::namenode2%:2181,%HOSTGROUP::namenode3%:2181",

"yarn.client.failover-proxy-provider": "org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider",

"yarn.resourcemanager.cluster-id": "yarn-cluster",

"yarn.resourcemanager.ha.automatic-failover.zk-base-path": "/yarn-leader-election"

}

}

},

{

"hdfs-site" : {

"properties_attributes" : { },

"properties" : {

"dfs.datanode.data.dir" : "/mnt/secondary1,/mnt/secondary2"

}

}

},

{

"hadoop-env" : {

"properties_attributes" : { },

"properties" : {

"namenode_heapsize" : "2048m"

}

}

},

{

"activity-zeppelin-shiro": {

"properties": {

"users.admin": "admin"

}

}

},

{

"hbase-site" : {

"properties" : {

"hbase.rootdir" : "hdfs://HACluster/apps/hbase/data"

}

}

}

]

}

- hostmap.json

{

"blueprint":"HACluster",

"default_password":"admin",

"host_groups": [

{

"name": "namenode1",

"hosts":

[

{ "fqdn": "namenode1" }

]

},

{

"name": "namenode2",

"hosts":

[

{ "fqdn": "namenode2" }

]

},

{

"name": "namenode3",

"hosts":

[

{ "fqdn": "namenode3" }

]

},

{

"name": "hosts_group",

"hosts":

[

{ "fqdn": "datanode1" },

{ "fqdn": "datanode2" },

{ "fqdn": "datanode3" }

]

}

]

}

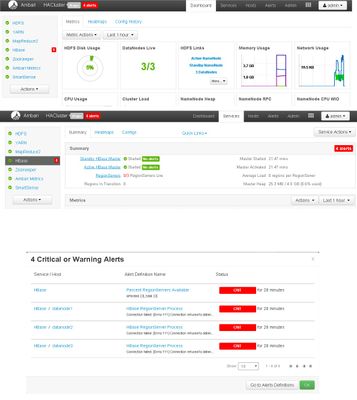

When I launch this configuration, HBase is the only service that doesn´t work. I get the following errors (screeshot attached).

What I am missing?

Thank you.

Created 12-05-2017 02:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found the error... By default "hbase_regionserver_heapsize" was set to 4096m, greater than my server, therefore, regionservers were not able to start.

I Changed that value to 1024 and everything went ok!

"hbase_regionserver_heapsize" : "4096m", "hbase_regionserver_heapsize" : "1024",

Created 12-05-2017 11:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please attach the region server logs located under (/var/log/hbase/hbase-hbase-regionserver-{hostname}.log)

Thanks,

Aditya

Created 12-05-2017 12:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the output:

$ cat /var/log/hbase/hbase-hbase-regionserver-namenode1.log

2017-12-05 12:11:29,525 INFO [timeline] availability.MetricSinkWriteShardHostnameHashingStrategy: Calculated collector shard namenode2 based on hostname: namenode1 2017-12-05 12:15:09,962 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=1.67 MB, freeSize=1.59 GB, max=1.59 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=29, evicted=0, evictedPerRun=0.0 2017-12-05 12:20:09,961 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=1.67 MB, freeSize=1.59 GB, max=1.59 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=59, evicted=0, evictedPerRun=0.0 2017-12-05 12:25:09,961 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=1.67 MB, freeSize=1.59 GB, max=1.59 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=89, evicted=0, evictedPerRun=0.0 2017-12-05 12:30:09,961 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=1.67 MB, freeSize=1.59 GB, max=1.59 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=119, evicted=0, evictedPerRun=0.0 2017-12-05 12:35:09,961 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=1.67 MB, freeSize=1.59 GB, max=1.59 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=149, evicted=0, evictedPerRun=0.0 2017-12-05 12:40:09,961 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=1.67 MB, freeSize=1.59 GB, max=1.59 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=179, evicted=0, evictedPerRun=0.0 2017-12-05 12:45:09,961 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=1.67 MB, freeSize=1.59 GB, max=1.59 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=209, evicted=0, evictedPerRun=0.0 2017-12-05 12:50:09,961 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=1.67 MB, freeSize=1.59 GB, max=1.59 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=239, evicted=0, evictedPerRun=0.0 2017-12-05 12:55:09,961 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=1.67 MB, freeSize=1.59 GB, max=1.59 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=269, evicted=0, evictedPerRun=0.0

$ cat /var/log/hbase/hbase-hbase-regionserver-datanode1.log

Tue Dec 5 12:08:28 CET 2017 Starting regionserver on datanode1 core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 13671 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 32000 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 16000 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited Tue Dec 5 12:18:37 CET 2017 Starting regionserver on datanode1 core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 13671 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 32000 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 16000 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited Tue Dec 5 12:21:20 CET 2017 Starting regionserver on datanode1 core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 13671 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 32000 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 16000 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited Tue Dec 5 12:46:37 CET 2017 Starting regionserver on datanode1 core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 13671 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 32000 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 16000 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited

Created 12-05-2017 12:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do not see any errors in the above logs. Can you do a tail on these logs and restart the region servers to see if there are any ERROR logs printed. That would be helpful for debugging.

Created 12-05-2017 12:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When region servers are restarted just the following is displayed when tail (same as obove) :(:

Tue Dec 5 13:11:53 CET 2017 Starting regionserver on datanode2 core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 13671 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 32000 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 16000 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited

Created 12-05-2017 02:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found the error... By default "hbase_regionserver_heapsize" was set to 4096m, greater than my server, therefore, regionservers were not able to start.

I Changed that value to 1024 and everything went ok!

"hbase_regionserver_heapsize" : "4096m", "hbase_regionserver_heapsize" : "1024",