Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HBase latency spikes every 10 minutes

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HBase latency spikes every 10 minutes

- Labels:

-

Apache HBase

Created on 10-12-2021 01:01 PM - edited 10-12-2021 01:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there,

I have an issue on my HBase cluster. HBase version: 2.0.2.3.1.4.0-315

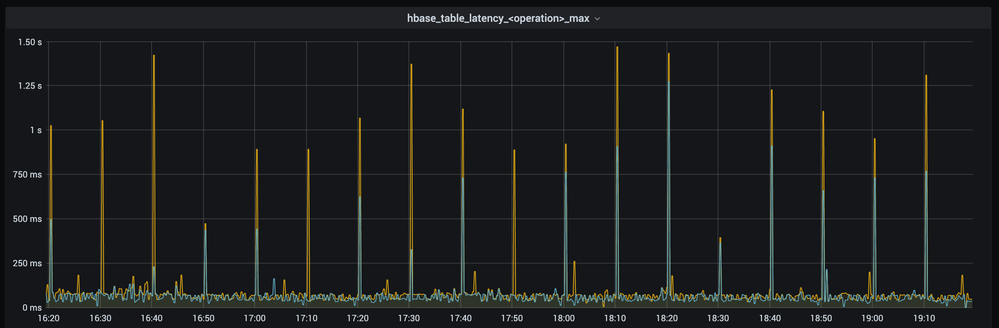

There are latency spikes every 10mins on all HBase operations, mostly visible on reads. Please have a look to the first graph below. Metric for the graph is `hbase_table_latency_gettime_max`.

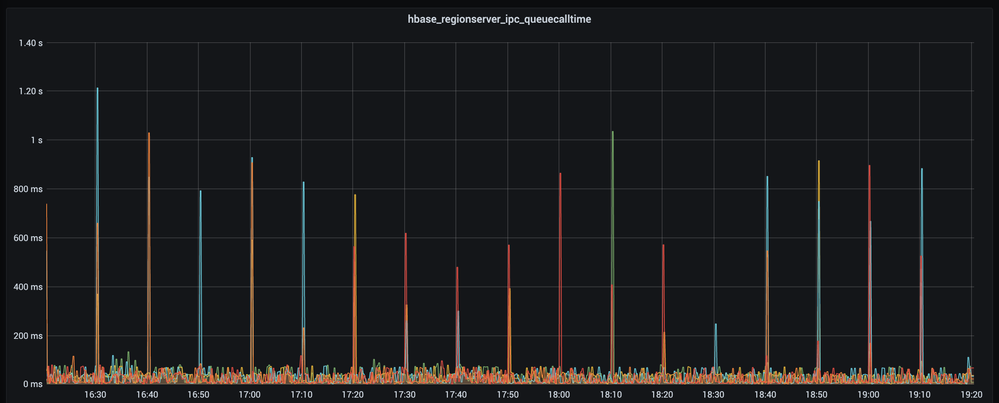

I see also spikes every 10mins on `hbase_regionserver_ipc_queuecalltime`, please have a look to the graph below:

What I've checked so far:

- It doesn't look like GC as GC doesn't correlate with spikes time.

- It is not a major compaction. I see spikes with and without it.

- It is not replication. I did a test with and without replication.

- I see nothing suspicious in logs or at least what could bring my attention: DEBUG and TRACE level was enabled.

- Memstore flushes are happening every hour.

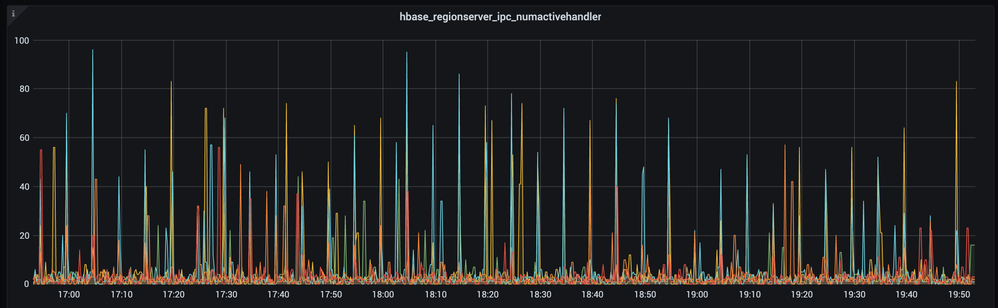

- Amount of active handlers looks good to me, it is set according to recommendations

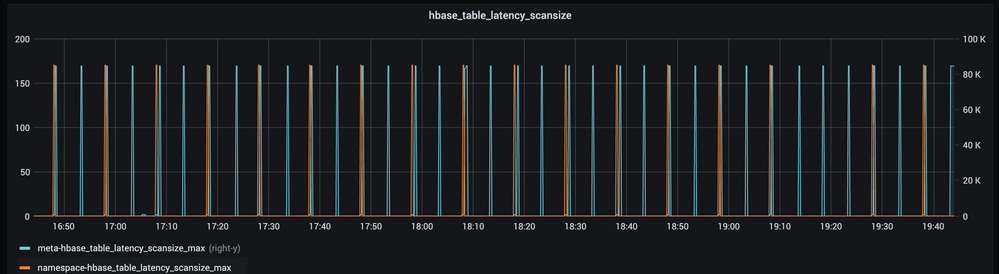

- There are scans of meta happening every 5 mins (please have a look to the graph below)

- There are scans of namespace happening every 10 mins and slightly before the spikes (please have a look to the graph below)

Could you help me and maybe share some ideas what else I could check. I would much appreciate it.

Created 10-13-2021 08:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @kras,

1. Is it CDH or HDP, what is the version.

2. In regionserver logs is there “responseTooSlow” or “operationTooSlow” or any other WARN/ERROR messages. please provide log snippets.

3. How is the locality of the regions (check locality on hbase webUI, click on table, on right side there is a column shows each region locality.)

4. How many regions deployed on each RegionServer.

5. Any warning / errors in RS log around the spike?

6. Is any job trying to scan every 10 min? Which table contribute most I/O? Is there any hotspot.

7. is HDFS healthy? check DN logs, is there any slow messages around the spike? Refer to https://my.cloudera.com/knowledge/Diagnosing-Errors-Error-Slow-ReadProcessor-Error-Slow?id=73443

Regards,

Will

Created 10-18-2021 08:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @willx , thanks a lot for your questions!

> 1. Is it CDH or HDP, what is the version.

HDP 3.1.4.0-315

> 2. In regionserver logs is there “responseTooSlow” or “operationTooSlow” or any other WARN/ERROR messages. please provide log snippets.

- Yes, I have in the logs “responseTooSlow”, have a look to the example below. But it doesn't correlate with spike times and there are very few amount of them during a day.

WARN [RpcServer.default.FPBQ.Fifo.handler=22,queue=3,port=16020] ipc.RpcServer: (responseTooSlow): {"call":"Multi(org.apache.hadoop.hbase.shaded.protobuf.generated.ClientProtos$MultiRequest)","starttimems":1634529195627,"responsesize":2846904,"method":"Multi","param":"region= table_name,%,1539591382521.35818b60a3e8dba8d3d1fe0f0d02b292., for 13378 action(s) and 1st row key=&C>\\x15\\x86\\xE7k\\xA6\\xFD5\\ <TRUNCATED>","processingtimems":11644,"client":"ip:port","queuetimems":0,"class":"HRegionServer"}

- There are now ERRORs

- Other WARNs:

WARN [RpcServer.default.FPBQ.Fifo.handler=10,queue=10,port=16020] regionserver.RSRpcServices: Large batch operation detected (greater than 5000) (HBASE-18023). Requested Number of Rows: 12596 Client: svc-stats//ip first region in multi=table_name,\x09,1541077881948.9bcc8cee00ab92b2402730813923c2f6.WARN [RpcServer.default.FPBQ.Fifo.handler=55,queue=17,port=16020] regionserver.MultiVersionConcurrencyControl: STUCK: MultiVersionConcurrencyControl{readPoint=3971335621, writePoint=3971335632}WARN [Close-WAL-Writer-3012] asyncfs.FanOutOneBlockAsyncDFSOutputHelper: complete file /foo/WALs/host,port,1633080603058/host%2C16020%2C1633080603058.1634479683029 not finished, retry = 0For the half of a day the amount of each WARNs is

grep WARN hbase-hbase-regionserver.log | grep "2021-10-18" | grep "responseTooSlow" | wc -l

13

grep WARN hbase-hbase-regionserver.log | grep "2021-10-18" | grep "Large batch operation detected" | wc -l

4194

grep WARN hbase-hbase-regionserver.log | grep "2021-10-18" | grep "MultiVersionConcurrencyControl" | wc -l

33

grep WARN hbase-hbase-regionserver.log | grep "2021-10-18" | grep "FanOutOneBlockAsyncDFSOutputHelper" | wc -l

4

> 3. How is the locality of the regions (check locality on hbase webUI, click on table, on right side there is a column shows each region locality.)

Locality is 100% on all RS.

> 4. How many regions deployed on each RegionServer.

I have 5 RS with 79 regions each. For each RS 16GB of heap and 65gb off-heap is allocated. Hadoop cluster backed by SSD.

> 5. Any warning / errors in RS log around the spike?

No errors. Only warns I mentioned above and I would say only Large batch operation detected (greater than 5000) is popping up a lot.

> 6. Is any job trying to scan every 10 min? Which table contribute most I/O? Is there any hotspot.

No cron jobs.

> 7. is HDFS healthy? check DN logs, is there any slow messages around the spike? Refer to https://my.cloudera.com/knowledge/Diagnosing-Errors-Error-Slow-ReadProcessor-Error-Slow?id=73443

Unfortunately I don't have access to the link. There is no any WARN/ERROR on DN. HDFS looks healthy, cluster serves plenty of requests with very low latency < 10ms.

Created 10-19-2021 04:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @kras,

From the evidences you provided, the most frequent warning is:

WARN [RpcServer.default.FPBQ.Fifo.handler=10,queue=10,port=16020] regionserver.RSRpcServices: Large batch operation detected (greater than 5000) (HBASE-18023). Requested Number of Rows: 12596 Client: svc-stats//ip first region in multi=table_name,\x09,1541077881948.9bcc8cee00ab92b2402730813923c2f6.which indicates when an RPC is received from a client that has more than 5000 "actions" (where an "action" is a collection of mutations for a specific row) in a single RPC. Misbehaving clients who send large RPCs to RegionServers can be malicious, causing temporary pauses via garbage collection or denial of service via crashes. The threshold of 5000 actions per RPC is defined by the property "hbase.rpc.rows.warning.threshold" in hbase-site.xml.

Please refer to this jira: https://issues.apache.org/jira/browse/HBASE-18023 for detailed explanation.

We can identify the table name is "table_name", please check which application is writing / reading this table. Simplest way is to halt this application, to see if performance is improved. If you identified the latency spike is due to this table, please improve your application logic, control your batch size.

If you have already improved the "harmful" applications but still see performance issues, I would recommend you read through this article which include most common performance issues and tuning suggestions:

This article has 5 parts, please read through it you will have ideas to tune your hbase.

This issue looks like a little complex, there will be multi-factors to impact your hbase performance. We encourage you to raise support cases with Cloudera.

Regards,

Will

If the answer helps, please accept as solution and click thumbs up.

Created 10-25-2021 12:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can confirm `Large batch operation detected` WARN is not a cause of the spikes. The client which produces traffic was identified and disabled. That wasn't resolve an issue.

WARN [RpcServer.default.FPBQ.Fifo.handler=10,queue=10,port=16020] regionserver.RSRpcServices: Large batch operation detected (greater than 5000) (HBASE-18023). Requested Number of Rows: 12596 Client: svc-stats//ip first region in multi=table_name,\x09,1541077881948.9bcc8cee00ab92b2402730813923c2f6.Created 10-22-2021 10:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are many reasons this could happen including OS/Kernel bugs (update your system), swap, transparent huge pages, pauses by a hypervisor for the High latency issues.

1) As we are seeing the "responseTooSlow" on the region servers, please check the data node logs for the underlying issue from the data node logs.

2) In the data node logs please check we have below ERROR/WARN in the data node logs are not.

Slow BlockReceiver write data to disk cost - This indicates that there was a delay in writing the block to the OS cache or disk.

Slow BlockReceiver write packet to mirror took - This indicates that there was a delay in writing the block across the network

Slow flushOrSync took/Slow manageWriterOsCache took - This indicates that there was a delay in writing the block to the OS cache or disk

3) If we have the above ERROR/WARN we need to check the infra team and OS vendor team to fix the underlying hardware issues to overcome issue.

Created 10-25-2021 12:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @PrathapKumar to pointed out stuff to check.

So far I can confirm on the data nodes there are no:

- Slow BlockReceiver write data to disk cost

- Slow BlockReceiver write packet to mirror took

- Slow flushOrSync took/Slow manageWriterOsCache took

- Any other WARN/ERROR.

Created 10-25-2021 01:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @kras Thank you for writing back with your observation.

Can you please check the below details as well?

1) When the Region Server JVM reports High CPU, Open "top" Command for the Region Server PID,

2) Use "Shift H" to open the Thread View of the PID. This would show the Threads within the Region Server JVM with CPU Usage,

3) Monitor the Thread View & Identify the Thread hitting the Max CPU Usage,

4) Take Thread Dump | JStack of Region Server PID & Compare the Thread with the "top" Thread View consuming the Highest CPU.

5) Check the CUP usage of the other services that are hosted on the Region Server host.

The above Process would allow you to identify the Thread contributing towards the CPU Usage. Compare the same with other Region Server & your Team can make a Conclusive Call to identify the reasoning for CPU Utilization. Howsoever Logs are reviewed, Narrowing the Focus of JVM review would assist in identifying the Cause. Review shared Link for additional reference.

Ref: https://www.infoworld.com/article/3336222/java-challengers-6-thread-behavior-in-the-jvm.html

https://blog.jamesdbloom.com/JVMInternals.html

Thanks & Regards,

Prathap Kumar.