Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HBase shell throws exception at startup

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HBase shell throws exception at startup

- Labels:

-

Apache HBase

Created 02-11-2016 08:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I added an edge node to our cluster and am running into problems with hbase shell:

[hirschs@bigfoot5 ~]$ hbase shell SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/hdp/2.3.2.0-2950/hadoop/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/hdp/2.3.2.0-2950/zookeeper/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] NativeException: java.io.IOException: java.lang.reflect.InvocationTargetException initialize at /usr/hdp/2.3.2.0-2950/hbase/lib/ruby/hbase/hbase.rb:42 (root) at /usr/hdp/2.3.2.0-2950/hbase/bin/hirb.rb:131

This works properly from the ambari server machine, but I'd like to move users off to a separate box. It appears that something may have been overlooked when I used ambari to setup the client-only machine, but what?

Created 02-12-2016 02:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One of our users pointed out that Phoenix was not installed on the new client. For whatever reason, it was never presented as an option in Ambari. I had installed it manually on the six other cluster machines and forgot to do this on the new one. After pulling it down with yum, everything started cooperating.

The error reporting from jruby is not particularly helpful. Hopefully this will be addressed at some point in the future?

Created 02-11-2016 08:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

User running hbase shell should have full access to /tmp directory

Created 02-11-2016 11:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you installed all the client tools in edge node?

Please see this http://stackoverflow.com/questions/31230687/not-able-to-start-hbase-shell-in-standalone-mode

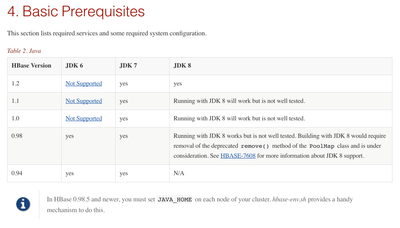

Java version mismatch

Created on 02-11-2016 11:56 PM - edited 08-19-2019 01:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Java version

Created 02-12-2016 02:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One of our users pointed out that Phoenix was not installed on the new client. For whatever reason, it was never presented as an option in Ambari. I had installed it manually on the six other cluster machines and forgot to do this on the new one. After pulling it down with yum, everything started cooperating.

The error reporting from jruby is not particularly helpful. Hopefully this will be addressed at some point in the future?

Created 02-12-2016 02:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the final followup. FYI: I believe phoenix installation ended up installing some other packages that needed for HBASE shell.

Created 06-30-2016 03:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wanted to post a quick followup on this thread. We recently found ourselves in a situation where we needed to deploy the hbase client code on an arbitrary number of machines and did not want the overhead of using Ambari. It was very straightforward to setup the Hortonworks repository reference and pull down hbase, however even after adding Phoenix the hbase shell would fail at startup with the dreaded (and spectacularly uninformative) exception:

NativeException: java.io.IOException: java.lang.reflect.InvocationTargetException initialize at /usr/hdp/2.3.2.0-2950/hbase/lib/ruby/hbase/hbase.rb:42 (root) at /usr/hdp/2.3.2.0-2950/hbase/bin/hirb.rb:131

After almost 1/2 day of hair-pulling, I ran strace against the shell startup on a working node and compared it to the trace from the failing one. It turns out that the shell absolutely requires this directory path to exist (it can be empty):

/hadoop/hbase/local/jars

Once I created that hierarchy the shell was able to start successfully:

$ mkdir /hadoop $ chmod 1777 /hadoop $ mkdir -p /hadoop/hbase/local/jars $ chmod -R 755 /hadoop/hbase

Hopefully this will save someone else the time and aggravation.

Created 02-14-2017 06:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Install HBase Client on that node after that you can able to launch HBase Shell