Support Questions

- Cloudera Community

- Support

- Support Questions

- HDFS NameNode Capacity Alert on Fresh Install

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS NameNode Capacity Alert on Fresh Install

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created 05-01-2017 05:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just installed Ambari 2.5.0.3 with HDP 2.6 and I am getting a critical alert stating my NameNode capacity is already 100%. After running a report, its only allocated 28kb! How do I fix this?

Created 05-02-2017 06:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I figured it out. Ambari is not allowing the DataNodes to access the /home (where the bulk of the free space is on a default Linux install) directories to store data. However, I created a folder pathway /home/hadoop/hdfs/data and placed a link to folder in the default /hadoop/hdfs pathway, so now the datanode directory technically reads /hadoop/hdfs/data, it executes a redirect to /home/hadoop/hdfs/data.

I had to do this for every datanode. I hope they fix this in the near future. Thanks for the help!

Created 05-01-2017 07:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you mean DataNode capacity? Which report? Is this a virtual or bare-metal environment? What are the hardware specs?

Created 05-02-2017 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@slachterman Thank you for responding. The CRIT alert is stating my NameNode capacity is maxed out and the dfsadmin report on the NameNode is stating the same: all 28kb of the dfs are used. As I run the same report on the DataNodes, I get the same results, they only have 28kb allocated to dfs and they are full as well.

As for the setup, I am using 1x physical machine with 1TB hdd to run the Ambari server/Edge Server/Secondary Master, 1x VM w/ 1TB hdd to run as Primary Master, and 3x VMs (each with a dedicated 2TB hdd) to run as the DataNodes. The purpose of this cluster is to act as a DEV/Admin Sandbox until I am given the funds to build a proper cluster.

On a side note, is there much difference in setting up a cluster with physical machines vs VMs? I wouldn't think so as they both require separated networks and similar design and deployments. Then again, nothing about this setup has gone right so far...

Created on 05-02-2017 03:53 PM - edited 08-17-2019 08:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Joshua Petree, that is odd. It seems like the 2 TB disks on your data nodes are not properly associated with your HDFS storage.

What do you see in dfs.datanode.data.dir? Are you sure the listed mount points are backed by the 2 TB disks you intended?

Created 05-02-2017 04:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

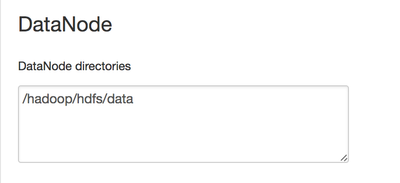

@slachterman, each VM has the OS (CentOS7) running on their respective hdd and partitioned accordingly. The DN directory is pointing to /hadoop/hdfs/data. However, according to the partition sizes of the OS, this folder is limited to 50 gb. The rest is dedicated to the /home partition, just over 1.7tb on each DN. I did notice during the install of Ambari I was not allowed to point my NameNode or DataNode to /home. Is this the problem?

Created 05-02-2017 06:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Joshua Petree yes, you want dfs.datanode.data.dir to be backed by a mount point that has the majority of the data available for HDFS blocks. Please upvote or accept the above answer if this is helpful in resolving your issue.

Created 05-02-2017 06:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

while this didn't directly help me solve the problem, it did help me think of a way to come to the solution. Thank you for the help!

Created 05-02-2017 06:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I figured it out. Ambari is not allowing the DataNodes to access the /home (where the bulk of the free space is on a default Linux install) directories to store data. However, I created a folder pathway /home/hadoop/hdfs/data and placed a link to folder in the default /hadoop/hdfs pathway, so now the datanode directory technically reads /hadoop/hdfs/data, it executes a redirect to /home/hadoop/hdfs/data.

I had to do this for every datanode. I hope they fix this in the near future. Thanks for the help!

Created 05-02-2017 06:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Joshua Petree can you explain further what you mean by "Ambari is not allowing the DataNodes to access the /home directories?"

Created 05-02-2017 06:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

During the initial setup of HDFS using Ambari, configuring the pathway to /home/hadoop/hdfs/data, or any /home pathway for that matter, is illegal. After further digging and research, it is written as a security measure to prevent writing to the /home directory. I had to literally take the long way home.