Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDFS not start after joined worker machine to ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

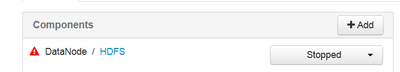

HDFS not start after joined worker machine to the cluster

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created on 11-20-2017 12:46 PM - edited 08-17-2019 09:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

before month we delete the worker machine from the cluster ( worker23 )

now we add this worker to the cluster by API commands

we started succsfuly the YARN ( nodemanager ) on the worker machine

but when we try to start the HDFS we get this errors ( under /var/log/hadoop/hdfs )

how to fix this situation ?

2017-11-20 22:20:44,907 WARN common.Storage (DataStorage.java:loadBlockPoolSliceStorage(502)) - Failed to add storage directory [DISK]file:/wrk/sdd/hadoop/hdfs/data/ for block pool BP-2098469986-197.14.28.53-1497173237387 java.io.IOException: BlockPoolSliceStorage.recoverTransitionRead: attempt to load an used block storage: /wrk/sdd/hadoop/hdfs/data/current/BP-2098469986-197.14.28.53-1497173237387 at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.loadBpStorageDirectories(BlockPoolSliceStorage.java:218) at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.recoverTransitionRead(BlockPoolSliceStorage.java:251) at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadBlockPoolSliceStorage(DataStorage.java:490) at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:419) at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:595) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1543) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1504) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:319) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:269) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:760) at java.lang.Thread.run(Thread.java:745) 2017-11-20 22:20:44,971 INFO common.Storage (BlockPoolSliceStorage.java:recoverTransitionRead(250)) - Analyzing storage directories for bpid BP-2098469986-197.14.28.53-1497173237387 2017-11-20 22:20:44,971 WARN common.Storage (BlockPoolSliceStorage.java:loadBpStorageDirectories(227)) - Failed to analyze storage directories for block pool BP-2098469986-197.14.28.53-1497173237387 java.io.IOException: BlockPoolSliceStorage.recoverTransitionRead: attempt to load an used block storage: /wrk/sde/hadoop/hdfs/data/current/BP-2098469986-197.14.28.53-1497173237387 at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.loadBpStorageDirectories(BlockPoolSliceStorage.java:218) at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.recoverTransitionRead(BlockPoolSliceStorage.java:251) at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadBlockPoolSliceStorage(DataStorage.java:490) at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:419) at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:595) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1543) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1504) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:319) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:269) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:760) at java.lang.Thread.run(Thread.java:745) 2017-11-20 22:20:44,972 WARN common.Storage (DataStorage.java:loadBlockPoolSliceStorage(502)) - Failed to add storage directory [DISK]file:/wrk/sde/hadoop/hdfs/data/ for block pool BP-2098469986-197.14.28.53-1497173237387 java.io.IOException: BlockPoolSliceStorage.recoverTransitionRead: attempt to load an used block storage: /wrk/sde/hadoop/hdfs/data/current/BP-2098469986-197.14.28.53-1497173237387 at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.loadBpStorageDirectories(BlockPoolSliceStorage.java:218) at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.recoverTransitionRead(BlockPoolSliceStorage.java:251) at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadBlockPoolSliceStorage(DataStorage.java:490) at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:419) at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:595) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1543) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1504) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:319) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:269) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:760) at java.lang.Thread.run(Thread.java:745) 2017-11-20 22:20:44,972 ERROR datanode.DataNode (BPServiceActor.java:run(772)) - Initialization failed for Block pool <registering> (Datanode Uuid ad0af75b-e973-475b-b525-52974df91fd1) service to master03.sys774.com/145.16.217.162:8020. Exiting. java.io.IOException: All specified directories are failed to load. at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:596) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1543) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1504) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:319) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:269) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:760) at java.lang.Thread.run(Thread.java:745) 2017-11-20 22:20:44,973 WARN datanode.DataNode (BPServiceActor.java:run(796)) - Ending block pool service for: Block pool <registering> (Datanode Uuid ad0af75b-e973-475b-b525-52974df91fd1) service to master03.sys774.com/145.16.217.162:8020 2017-11-20 22:20:44,973 INFO datanode.DataNode (BlockPoolManager.java:remove(103)) - Removed Block pool <registering> (Datanode Uuid ad0af75b-e973-475b-b525-52974df91fd1) 2017-11-20 22:20:46,974 WARN datanode.DataNode (DataNode.java:secureMain(2698)) - Exiting Datanode 2017-11-20 22:20:46,984 INFO util.ExitUtil (ExitUtil.java:terminate(124)) - Exiting with status 0 2017-11-20 22:20:46,990 INFO datanode.DataNode (LogAdapter.java:info(47)) - SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down DataNode at worker05.sys774.com/192.98.12.34

grep -i ERROR hadoop-hdfs-datanode-worker05.sys54.com.log | sort -u 2017-11-20 12:38:02,365 ERROR datanode.DataNode (BPServiceActor.java:run(767)) - Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to master03.sys54.com/133.21.45.211:8020 All specified directories are failed to load. 2017-11-20 12:38:07,507 ERROR datanode.DataNode (BPServiceActor.java:run(767)) - Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to master03.sys54.com/133.21.45.211:8020 All specified directories are failed to load. 2017-11-20 12:38:11,900 ERROR datanode.DataNode (BPServiceActor.java:run(772)) - Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to master01.sys54.com/133.21.45.212:8020. Exiting. 2017-11-20 12:38:12,599 ERROR datanode.DataNode (BPServiceActor.java:run(772)) - Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to master03.sys54.com/133.21.45.211:8020. Exiting.

Created 11-21-2017 04:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes all the disks are mounted

Created 11-21-2017 04:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the value of 'dfs.datanode.data.dir' . Is datanode failing to start only on the new node or all the nodes?

Created 11-21-2017 04:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes the datanode is fail only on the new node ( this node was delete from the cluster before one month , and now we add this node to the cluster again )

Created 11-21-2017 04:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dfs.datanode.data.dir is ok ( all the worker machines that are works defined with this value )

Created 11-21-2017 04:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dfs.datanode.data.dir is --> /wrk/sdb/hadoop/hdfs/data,/wrk/sdc/hadoop/hdfs/data,/wrk/sdd/hadoop/hdfs/data,/wrk/sde/hadoop/hdfs/data,/wrk/sdf/hadoop/hdfs/data,/wrk/sdg/hadoop/hdfs/data,/wrk/sdh/hadoop/hdfs/data,/wrk/sdi/hadoop/hdfs/data,/wrk/sdj/hadoop/hdfs/data,/wrk/sdk/hadoop/hdfs/data

Created 11-22-2017 06:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you check /wrk/sdd/hadoop/hdfs/data/current/BP-2098469986-197.14.28.53-1497173237387

and /wrk/sde/hadoop/hdfs/data/current/BP-2098469986-197.14.28.53-1497173237387 are present on your new worker node?

Created 12-02-2017 11:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank you for the answer , but we create another new worker machine instead that machine , I think it was waste of time find the problem on that machine and better to create a new one

- « Previous

-

- 1

- 2

- Next »