Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDFS not start after joined worker machine to ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

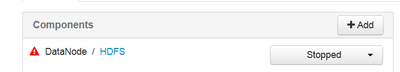

HDFS not start after joined worker machine to the cluster

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created on 11-20-2017 12:46 PM - edited 08-17-2019 09:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

before month we delete the worker machine from the cluster ( worker23 )

now we add this worker to the cluster by API commands

we started succsfuly the YARN ( nodemanager ) on the worker machine

but when we try to start the HDFS we get this errors ( under /var/log/hadoop/hdfs )

how to fix this situation ?

2017-11-20 22:20:44,907 WARN common.Storage (DataStorage.java:loadBlockPoolSliceStorage(502)) - Failed to add storage directory [DISK]file:/wrk/sdd/hadoop/hdfs/data/ for block pool BP-2098469986-197.14.28.53-1497173237387 java.io.IOException: BlockPoolSliceStorage.recoverTransitionRead: attempt to load an used block storage: /wrk/sdd/hadoop/hdfs/data/current/BP-2098469986-197.14.28.53-1497173237387 at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.loadBpStorageDirectories(BlockPoolSliceStorage.java:218) at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.recoverTransitionRead(BlockPoolSliceStorage.java:251) at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadBlockPoolSliceStorage(DataStorage.java:490) at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:419) at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:595) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1543) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1504) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:319) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:269) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:760) at java.lang.Thread.run(Thread.java:745) 2017-11-20 22:20:44,971 INFO common.Storage (BlockPoolSliceStorage.java:recoverTransitionRead(250)) - Analyzing storage directories for bpid BP-2098469986-197.14.28.53-1497173237387 2017-11-20 22:20:44,971 WARN common.Storage (BlockPoolSliceStorage.java:loadBpStorageDirectories(227)) - Failed to analyze storage directories for block pool BP-2098469986-197.14.28.53-1497173237387 java.io.IOException: BlockPoolSliceStorage.recoverTransitionRead: attempt to load an used block storage: /wrk/sde/hadoop/hdfs/data/current/BP-2098469986-197.14.28.53-1497173237387 at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.loadBpStorageDirectories(BlockPoolSliceStorage.java:218) at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.recoverTransitionRead(BlockPoolSliceStorage.java:251) at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadBlockPoolSliceStorage(DataStorage.java:490) at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:419) at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:595) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1543) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1504) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:319) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:269) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:760) at java.lang.Thread.run(Thread.java:745) 2017-11-20 22:20:44,972 WARN common.Storage (DataStorage.java:loadBlockPoolSliceStorage(502)) - Failed to add storage directory [DISK]file:/wrk/sde/hadoop/hdfs/data/ for block pool BP-2098469986-197.14.28.53-1497173237387 java.io.IOException: BlockPoolSliceStorage.recoverTransitionRead: attempt to load an used block storage: /wrk/sde/hadoop/hdfs/data/current/BP-2098469986-197.14.28.53-1497173237387 at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.loadBpStorageDirectories(BlockPoolSliceStorage.java:218) at org.apache.hadoop.hdfs.server.datanode.BlockPoolSliceStorage.recoverTransitionRead(BlockPoolSliceStorage.java:251) at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadBlockPoolSliceStorage(DataStorage.java:490) at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:419) at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:595) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1543) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1504) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:319) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:269) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:760) at java.lang.Thread.run(Thread.java:745) 2017-11-20 22:20:44,972 ERROR datanode.DataNode (BPServiceActor.java:run(772)) - Initialization failed for Block pool <registering> (Datanode Uuid ad0af75b-e973-475b-b525-52974df91fd1) service to master03.sys774.com/145.16.217.162:8020. Exiting. java.io.IOException: All specified directories are failed to load. at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:596) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1543) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1504) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:319) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:269) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:760) at java.lang.Thread.run(Thread.java:745) 2017-11-20 22:20:44,973 WARN datanode.DataNode (BPServiceActor.java:run(796)) - Ending block pool service for: Block pool <registering> (Datanode Uuid ad0af75b-e973-475b-b525-52974df91fd1) service to master03.sys774.com/145.16.217.162:8020 2017-11-20 22:20:44,973 INFO datanode.DataNode (BlockPoolManager.java:remove(103)) - Removed Block pool <registering> (Datanode Uuid ad0af75b-e973-475b-b525-52974df91fd1) 2017-11-20 22:20:46,974 WARN datanode.DataNode (DataNode.java:secureMain(2698)) - Exiting Datanode 2017-11-20 22:20:46,984 INFO util.ExitUtil (ExitUtil.java:terminate(124)) - Exiting with status 0 2017-11-20 22:20:46,990 INFO datanode.DataNode (LogAdapter.java:info(47)) - SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down DataNode at worker05.sys774.com/192.98.12.34

grep -i ERROR hadoop-hdfs-datanode-worker05.sys54.com.log | sort -u 2017-11-20 12:38:02,365 ERROR datanode.DataNode (BPServiceActor.java:run(767)) - Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to master03.sys54.com/133.21.45.211:8020 All specified directories are failed to load. 2017-11-20 12:38:07,507 ERROR datanode.DataNode (BPServiceActor.java:run(767)) - Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to master03.sys54.com/133.21.45.211:8020 All specified directories are failed to load. 2017-11-20 12:38:11,900 ERROR datanode.DataNode (BPServiceActor.java:run(772)) - Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to master01.sys54.com/133.21.45.212:8020. Exiting. 2017-11-20 12:38:12,599 ERROR datanode.DataNode (BPServiceActor.java:run(772)) - Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to master03.sys54.com/133.21.45.211:8020. Exiting.

Created 11-20-2017 11:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think what is happening here is, the clusterId of datanode and namenode is not matching here.

Check the version file on the namenode and datanode.

For namenode version file will be present in <<dfs.namenode.name.dir>>/current/VERSION

For datanode version file will be present in <<dfs.datanode.data.dir>>/current/VERSION

Both should be same, to start hdfs cluster.

Created 11-21-2017 12:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the clusterID is the same on the datanode and on the namenode

Created 11-21-2017 12:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do you mean the layoutVersion version in the file should be the same ?

Created 11-22-2017 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do you have some updates?

Created 11-21-2017 12:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I mean to say here clusterId.

Could you please provide complete log of datanode?

Created 11-21-2017 06:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

see the update , all the logs are updated

Created 11-22-2017 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do you have some updates ?

Created 11-21-2017 11:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have /wrk/sdd/ mounted on the new worker node. Check if this path exists in the new node '/wrk/sdd/hadoop/hdfs/data/'

Also check the value of "dfs.datanode.data.dir" is /hadoop/hdfs/data. Can you please attach the output of

'df -h' command.

Thanks,

Aditya

Created 11-21-2017 04:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

/dev/sdd 20511312 11106652 9388276 55% /wrk/sdd

/dev/sdb 20511312 10539404 9955524 52% /wrk/sdb

/dev/sdc 20511312 9175548 11319380 45% /wrk/sdc

/dev/sde 20511312 12263192 8231736 60% /wrk/sde

about the PATH , yes its exsists!