Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDP 2.6 - Can't upload files with more than 1M...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDP 2.6 - Can't upload files with more than 1MB in Ambari file view

Created on 03-07-2018 07:58 PM - edited 08-18-2019 01:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

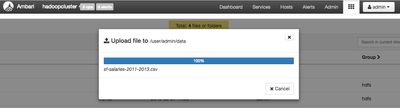

Hi, I can't upload files with more than 1MB in Ambari file view, the process start and reach 100% but never closed the popup and finished.

I'm using the Blueprint: hdp26-data-science-spark2

Thanks,

Marcelo

Created 03-08-2018 01:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Marcelo Dotti

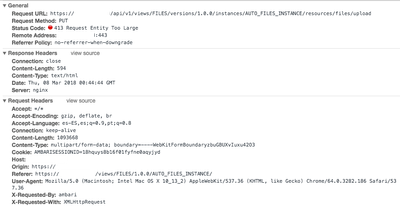

The problem i see here is that you are not accessing the ambari UI directly. I see a port "https://........:443" in your URL which indicates whether your ambari is SSL enabled and listening on port 443. (but i do not think that port 443 and SSL is enabled on your ambari), it may be something else like "nginx" proxy running on port 443 before ambari which is listening clients request on secure port 443 which is not allowing uploading the large data using PUT method.

Can you please confirm your ambari version and if there is any proxy in between.

.

Created 03-07-2018 08:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can catch the error message and it is:

413 Request Entity Too Large

But I don't know how to fix this error, so I need some help please.

Thanks

Created 03-08-2018 12:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

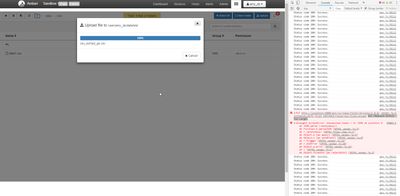

1 MB is too small ... i have put more than 100 MB of file to HDFS using Ambari File view just now and it works well.

Can you please try the following:

1. Try putting the file to some other directory?

2. Please check if the 413 Request Entity Too Large is coming from some other source ... Like Do you have a router or as webserver present in front of ambari or are you directly accessing the ambari UI without any proxy?

.

Created on 03-08-2018 12:49 AM - edited 08-18-2019 01:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have right, I have put the same files (with more than 1mb and one with 60mb) in HDP2.5 sandbox without problems, but now I created a cluster with cloudbreak, using hdp26-data-science-spark2 blueprint, and I can't upload these files.

I'm using the "user/admin/data" folder in HDP 2.6 and in HDP 2.5, but in the HDP 2.6 fail and in HDP 2.5 run without problems.

HDP 2.5:

HDP 2.6:

Created 03-08-2018 01:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Marcelo Dotti

The problem i see here is that you are not accessing the ambari UI directly. I see a port "https://........:443" in your URL which indicates whether your ambari is SSL enabled and listening on port 443. (but i do not think that port 443 and SSL is enabled on your ambari), it may be something else like "nginx" proxy running on port 443 before ambari which is listening clients request on secure port 443 which is not allowing uploading the large data using PUT method.

Can you please confirm your ambari version and if there is any proxy in between.

.

Created on 03-08-2018 01:26 AM - edited 08-18-2019 01:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

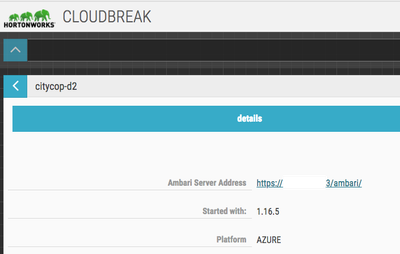

You're right!

I used the Cloudbreak Ambari link and this link use https with nginx proxy and can't load more than 1mb files.

I tried with 8080 and it worked!

Thank you very much.

Created on 08-16-2018 07:31 AM - edited 08-18-2019 01:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Installed the sandbox on VirtualBox but could not upload a .csv file more than 200mb on HDFS using Ambari interface and getting a message 413 (Request Entity Too Large). The file upload bar stuck at 100%.

Though working fine with files size smaller than 200mb.

I am using localhost:8080 to access Ambari had uploaded up to 4GB files using FilesView on windows docker. But could not upload same file on VirtualBox. I have allocated 8GB RAM to VirtualBox and plenty of disk space.

Is there a file size cap property somewhere on Ambari or with in the webserver hortonworks use? no idea which webserver.

Created 08-29-2018 04:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I cope with the similar problem by using scp. command. Then I connect through ssh and add file to hdfs.

scp -P 2201 <pathToLocalFile> root@127.0.0.1:~