Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDP 3.0 Cloudbreak Deployment possible?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDP 3.0 Cloudbreak Deployment possible?

Created 07-24-2018 07:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I just got a Cloudbreak 2.7.1 deployer server running. The press release for HDP 3.0 mentioned that is was possible to deploy 3.0 using Cloudbreak but HDP 3.0 isn't an available option under 2.7.1 (HDP 2.6 only). Do I need to upgrade Cloudbreak, add an MPack or how should I deploy HDP 3.0 via Cloudbreak?

Paul

Created on 07-24-2018 09:49 PM - edited 08-17-2019 10:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Paul,

I ran into the same issue with CB 2.7.1. I'm sure there must be a better way to get it resolved, but here are the steps I used to create an HDP3.0 blueprint first, then further create an HDP 3.0 cluster by using CB 2.7.1:

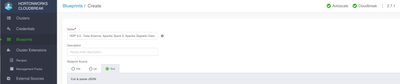

Step1: Click 'Blueprints' on the left navigation pane, then click 'CREATE BLUEPRINT', input the name, for instance 'HDP 3.0 - Data Science: Apache Spark 2, Apache Zeppelin'

Step2: Add the following JSON into the 'Text' field, and click 'CREATE'

{

"Blueprints": {

"blueprint_name": "hdp30-data-science-spark2-v4",

"stack_name": "HDP",

"stack_version": "3.0"

},

"settings": [

{

"recovery_settings": []

},

{

"service_settings": [

{

"name": "HIVE",

"credential_store_enabled": "false"

}

]

},

{

"component_settings": []

}

],

"configurations": [

{

"core-site": {

"fs.trash.interval": "4320"

}

},

{

"hdfs-site": {

"dfs.namenode.safemode.threshold-pct": "0.99"

}

},

{

"hive-site": {

"hive.exec.compress.output": "true",

"hive.merge.mapfiles": "true",

"hive.server2.tez.initialize.default.sessions": "true",

"hive.server2.transport.mode": "http"

}

},

{

"mapred-site": {

"mapreduce.job.reduce.slowstart.completedmaps": "0.7",

"mapreduce.map.output.compress": "true",

"mapreduce.output.fileoutputformat.compress": "true"

}

},

{

"yarn-site": {

"yarn.acl.enable": "true"

}

}

],

"host_groups": [

{

"name": "master",

"configurations": [],

"components": [

{

"name": "APP_TIMELINE_SERVER"

},

{

"name": "HDFS_CLIENT"

},

{

"name": "HISTORYSERVER"

},

{

"name": "HIVE_CLIENT"

},

{

"name": "HIVE_METASTORE"

},

{

"name": "HIVE_SERVER"

},

{

"name": "JOURNALNODE"

},

{

"name": "MAPREDUCE2_CLIENT"

},

{

"name": "METRICS_COLLECTOR"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "NAMENODE"

},

{

"name": "RESOURCEMANAGER"

},

{

"name": "SECONDARY_NAMENODE"

},

{

"name": "LIVY2_SERVER"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "SPARK2_JOBHISTORYSERVER"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "YARN_CLIENT"

},

{

"name": "ZEPPELIN_MASTER"

},

{

"name": "ZOOKEEPER_CLIENT"

},

{

"name": "ZOOKEEPER_SERVER"

}

],

"cardinality": "1"

},

{

"name": "worker",

"configurations": [],

"components": [

{

"name": "HIVE_CLIENT"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "DATANODE"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "NODEMANAGER"

}

],

"cardinality": "1+"

},

{

"name": "compute",

"configurations": [],

"components": [

{

"name": "HIVE_CLIENT"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "NODEMANAGER"

}

],

"cardinality": "1+"

}

]

}Step3: You now should be able to see the newly added HDP3.0 blueprint, and create a cluster off it

Hope it helps!

If you found it resolved the issue, please "accept" the answer, thanks.

Created on 07-24-2018 09:49 PM - edited 08-17-2019 10:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Paul,

I ran into the same issue with CB 2.7.1. I'm sure there must be a better way to get it resolved, but here are the steps I used to create an HDP3.0 blueprint first, then further create an HDP 3.0 cluster by using CB 2.7.1:

Step1: Click 'Blueprints' on the left navigation pane, then click 'CREATE BLUEPRINT', input the name, for instance 'HDP 3.0 - Data Science: Apache Spark 2, Apache Zeppelin'

Step2: Add the following JSON into the 'Text' field, and click 'CREATE'

{

"Blueprints": {

"blueprint_name": "hdp30-data-science-spark2-v4",

"stack_name": "HDP",

"stack_version": "3.0"

},

"settings": [

{

"recovery_settings": []

},

{

"service_settings": [

{

"name": "HIVE",

"credential_store_enabled": "false"

}

]

},

{

"component_settings": []

}

],

"configurations": [

{

"core-site": {

"fs.trash.interval": "4320"

}

},

{

"hdfs-site": {

"dfs.namenode.safemode.threshold-pct": "0.99"

}

},

{

"hive-site": {

"hive.exec.compress.output": "true",

"hive.merge.mapfiles": "true",

"hive.server2.tez.initialize.default.sessions": "true",

"hive.server2.transport.mode": "http"

}

},

{

"mapred-site": {

"mapreduce.job.reduce.slowstart.completedmaps": "0.7",

"mapreduce.map.output.compress": "true",

"mapreduce.output.fileoutputformat.compress": "true"

}

},

{

"yarn-site": {

"yarn.acl.enable": "true"

}

}

],

"host_groups": [

{

"name": "master",

"configurations": [],

"components": [

{

"name": "APP_TIMELINE_SERVER"

},

{

"name": "HDFS_CLIENT"

},

{

"name": "HISTORYSERVER"

},

{

"name": "HIVE_CLIENT"

},

{

"name": "HIVE_METASTORE"

},

{

"name": "HIVE_SERVER"

},

{

"name": "JOURNALNODE"

},

{

"name": "MAPREDUCE2_CLIENT"

},

{

"name": "METRICS_COLLECTOR"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "NAMENODE"

},

{

"name": "RESOURCEMANAGER"

},

{

"name": "SECONDARY_NAMENODE"

},

{

"name": "LIVY2_SERVER"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "SPARK2_JOBHISTORYSERVER"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "YARN_CLIENT"

},

{

"name": "ZEPPELIN_MASTER"

},

{

"name": "ZOOKEEPER_CLIENT"

},

{

"name": "ZOOKEEPER_SERVER"

}

],

"cardinality": "1"

},

{

"name": "worker",

"configurations": [],

"components": [

{

"name": "HIVE_CLIENT"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "DATANODE"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "NODEMANAGER"

}

],

"cardinality": "1+"

},

{

"name": "compute",

"configurations": [],

"components": [

{

"name": "HIVE_CLIENT"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "NODEMANAGER"

}

],

"cardinality": "1+"

}

]

}Step3: You now should be able to see the newly added HDP3.0 blueprint, and create a cluster off it

Hope it helps!

If you found it resolved the issue, please "accept" the answer, thanks.

Created 07-26-2018 03:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks dsun, that has worked for me. Note I used Dominika's blueprint below but don't know if it makes any difference.

Created 07-26-2018 05:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 07-25-2018 06:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Paul Norris It is possible to use Cloudbreak 2.7.1 to deploy HDP 3.0; However, Cloudbreak 2.7.1 does not include any default blueprints for HDP 3.0 and so if you want to use HDP 3.0 you must first:

1) create an HDP 3.0 blueprint. Here is an example:

{

"Blueprints": {

"blueprint_name": "hdp30-data-science-spark2-v4",

"stack_name": "HDP",

"stack_version": "3.0"

},

"settings": [

{

"recovery_settings": []

},

{

"service_settings": [

{

"name": "HIVE",

"credential_store_enabled": "false"

}

]

},

{

"component_settings": []

}

],

"configurations": [

{

"core-site": {

"fs.trash.interval": "4320"

}

},

{

"hdfs-site": {

"dfs.namenode.safemode.threshold-pct": "0.99"

}

},

{

"hive-site": {

"hive.exec.compress.output": "true",

"hive.merge.mapfiles": "true",

"hive.server2.tez.initialize.default.sessions": "true",

"hive.server2.transport.mode": "http"

}

},

{

"mapred-site": {

"mapreduce.job.reduce.slowstart.completedmaps": "0.7",

"mapreduce.map.output.compress": "true",

"mapreduce.output.fileoutputformat.compress": "true"

}

},

{

"yarn-site": {

"yarn.acl.enable": "true"

}

}

],

"host_groups": [

{

"name": "master",

"configurations": [],

"components": [

{

"name": "APP_TIMELINE_SERVER"

},

{

"name": "HDFS_CLIENT"

},

{

"name": "HISTORYSERVER"

},

{

"name": "HIVE_CLIENT"

},

{

"name": "HIVE_METASTORE"

},

{

"name": "HIVE_SERVER"

},

{

"name": "JOURNALNODE"

},

{

"name": "MAPREDUCE2_CLIENT"

},

{

"name": "METRICS_COLLECTOR"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "NAMENODE"

},

{

"name": "RESOURCEMANAGER"

},

{

"name": "SECONDARY_NAMENODE"

},

{

"name": "LIVY2_SERVER"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "SPARK2_JOBHISTORYSERVER"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "YARN_CLIENT"

},

{

"name": "ZEPPELIN_MASTER"

},

{

"name": "ZOOKEEPER_CLIENT"

},

{

"name": "ZOOKEEPER_SERVER"

}

],

"cardinality": "1"

},

{

"name": "worker",

"configurations": [],

"components": [

{

"name": "HIVE_CLIENT"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "DATANODE"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "NODEMANAGER"

}

],

"cardinality": "1+"

},

{

"name": "compute",

"configurations": [],

"components": [

{

"name": "HIVE_CLIENT"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "NODEMANAGER"

}

],

"cardinality": "1+"

}

]

}2) Upload the blueprint to Cloudbreak (you can paste it under the Blueprints menu item).

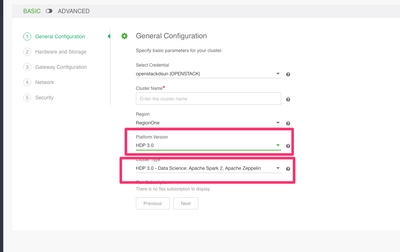

3) When creating a cluster:

- Under General Configuration, select Platform Version > HDP-3.0 and then your blueprint should appear under Cluster Type

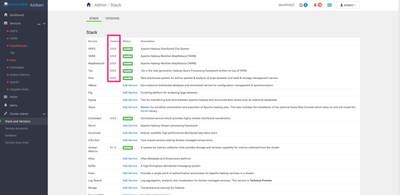

- Under Image Settings, specify Ambari 2.7 and HDP 3.0 public repos (you can find them in Ambari 2.7 docs: https://docs.hortonworks.com/HDPDocuments/Ambari-2.7.0.0/bk_ambari-installation/content/ch_obtaining....

Created 07-26-2018 03:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, Dominika, I used your Blueprint and it didn't seem to need me to add the repo's, it had the correct information there automatically.

Created 07-26-2018 09:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great! I wasn't sure if Cloudbreak would do it correctly.

Created 01-17-2019 02:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Dominika, The blueprint in the example given was for Data Science: Apache Spark 2, Apache Zeppelin.

Do you have a sample blueprint for HDP 3.0 - EDW-ETL: Apache Hive, Apache Spark 2 which I can run on Cloudbreak 2.7.x?

Created 01-17-2019 06:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 01-18-2019 05:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content