Support Questions

- Cloudera Community

- Support

- Support Questions

- Hadoop backup with distcp: org.apache.hadoop.secur...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hadoop backup with distcp: org.apache.hadoop.security.AccessControlException: Permission denied: user=XXXX, access=EXECUTE

- Labels:

-

Apache Ranger

-

HDFS

-

Kerberos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hej,

We have a script for data backup to a remote Hadoop cluster using distcp. Both clusters are secured with Kerberos, and both clusters are version 2.6.4. The backup fails with error:

org.apache.hadoop.security.AccessControlException: Permission denied: user=XXXXXX, access=EXECUTE, inode="/databank/.snapshot/databank_201904250000":hdfs:hdfs:d---------

There is a policy in Ranger that gives read and execute permissions in the HDFS for user XXXXX. I can list the contents of the HDFS using:

hdfs dfs -ls

after doing kinit to the user XXXXX.

I am completely at loss why the user XXXXX get an execute access denied.

Please advice,

Created 10-06-2020 07:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Doesn't it look strange that permissions are

/databank/.snapshot/databank_201904250000 :hdfs:hdfs:d---------

The normal permissions show be

rwx

Try that and revert

Created 10-06-2020 10:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

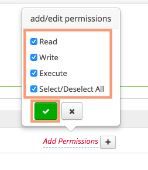

You should toggle to RECURSIVE for the RWX permission on the hdfs should look like this

/databank/*The selection should look like

Hope that helps

Created 10-08-2020 04:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The same problem occurs with other directories with other permissions:

org.apache.hadoop.security.AccessControlException: Permission denied: user=XXXXX, access=READ, inode="/user/.snapshot/user_201806150000":w93651:hdfs:drwx------

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=XXXXX, access=READ, inode="/user/.snapshot/user_201806150000":w93651:hdfs:drwx------

Again Ranger audit log says that no Policy is applied, instead it uses hdfs acl.

Created on 10-08-2020 10:40 AM - edited 10-08-2020 10:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How Ranger policies work for HDFS

Apache Ranger offers a federated authorization model for HDFS. Ranger plugin for HDFS checks for Ranger policies and if a policy exists, access is granted to user. If a policy doesn’t exist in Ranger, then Ranger would default to the native permissions model in HDFS (POSIX or HDFS ACL). This federated model is applicable for HDFS and Yarn service in Ranger.

For other services such as Hive or HBase, Ranger operates as the sole authorizer which means only Ranger policies are in effect.

The option for the fallback model is configured using a property in Ambari → Ranger → HDFS config → Advanced ranger-hdfs-security

xasecure.add-hadoop-authorization=true

The federated authorization model enables to safely implement Ranger in an existing cluster without affecting jobs that rely on POSIX permissions to enable this option as the default model for all deployments.

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=XXXXX, access=READ,

inode="/user/.snapshot/user_201806150000":w93651:hdfs:drwx------Is self-explanatory does the user w93651 exist on both clusters with valid Kerberos tickets if the cluster is kerberized? Ensure the CROSS-REALM is configured and working.

Is your ranger managing the 2 clusters?

HTH

Created on 10-08-2020 04:40 AM - edited 10-08-2020 04:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your answers, but we have been doing som digging and it looks like the operation with distcp bypass the Ranger policy and use HDFS ACL instead.

We have a Ranger allow policy that says that the user XXXXX can read and execute in /* . But in the audit log we get an EXECUTE access denied to:

/databank

Access enforcer:hadoop-acl

and a READ access denied to:

/databank/.snapshot/databank_201...

Access enforcer: hadoop-acl

Moreover, we have a directory where the backup succeed because it has POSIX permissions read and execute for others and other with rwx permissions for the owner only that fail equally.

But should it not Ranger Policy apply and override the HDFS POSIX permissions? It looks like that what happens is the opposite, HDFS permissions override Ranger policy.

Brgds, Paz

Created 10-06-2020 07:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Permission Denied for the user.

Try checking the read and execute permission or ranger policies.