Support Questions

- Cloudera Community

- Support

- Support Questions

- HandleHttpRequest processor is throwing "container...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HandleHttpRequest processor is throwing "container queue is full; Responding with SURVICE_UNAVAILABLE" error

- Labels:

-

Apache NiFi

Created on 07-28-2022 12:28 AM - edited 07-28-2022 12:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Experts,

We have HandleHttpRequests processor running with 16 concurrency.

Container queue size configured is 1000.

While running a load test suddenly HandleHttpRequests stopped emitting flowfiles recieved and started throwing error show in below image -

So we stopped the load test and now even after 30 mins when we send requests it will just emit 2 or 3 flow files and again shows same error and does not release/emit any request flowfiles.

Ideally when no request is getting hit to that port (which HttpRequestListener is configured) container should get cleared and flow files should get created from queue and emit right?

What could be the reason this processor is just trhowing container full error and not emitting any flow files ?

It started working only when we manually cleanup all repositories (content, provenance, ff) and restart the nifi !

Any suggestion would be appreciated.

Thanks,

Mahendra

Created 08-01-2022 06:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@hegdemahendra

This could be and IOPS issue possibly, but it could also be a concurrency issue with threads.

How large is your Timer Driven thread pool? This is the pool of threads from which the scheduled components can use. If it is set to 10 and and all are currently in use by components, the HandleHTTPRequest processor , while scheduled, will be waiting for a free thread from that pool before it can execute. Adjusting the "Max Timer Driven Thread pool" requires careful consideration of average CPU load average across on every node in your NiFi cluster since same value is applied to each node separately. General starting pool size should be 2 to 4 times the number of cores on a single node. Form there you monitor CPU load average across all nodes and use the one with the highest CPU load average to determine if you can add more threads to that pool. If you have a single node that is always has a much higher CPU load average, you should take a closer look at that server. Does it have other service running on it tat are not running on other nodes? Does it unproportionately consistently have more FlowFiles then any other node (This typically is a result of dataflow design and not handling FlowFile load balancing redistribution optimally.)?

How many concurrent tasks on your HandleHttpRequest processor. The concurrent tasks are responsible for obtaining threads (1 per concurrent task if available) to read data from the Container queue and create the FlowFiles. Perhaps the request come in so fast that there are not enough available threads to keep the container queue from filling and thus blocking new requests. Assuming your CPU load average is not too high, increase your Max Timer Driven Thread pool and the number fo concurrent tasks on your HandleHttpRequest processor to see if that resolves your issue. But keep in mind that even if this helps with processor getting more threads, if the disk I/O can't keep up then you will still have same issue.

As far as having all your NiFi repos on same disk, this is not a recommended practice. Typical setup would have the content_repository on its own disk (content repo can fill disk to 100% which does not cause issue other then not being able to write new content until disk usage drops), The provenance_repository on its own disk (size of this disk depends on amount of provenance history you want to retain and size fo your dataflows along with volume of FlowFiles, but its disk usage is controllable. Recommend separate disk due to disk I/O), and put the database_repository (very small in terms of disk usage) and flowfile_repository (relatively small unless you allow a very large number fo FlowFiles to queue in your dataflows. FlowFile_repos only hold metadata/attributes about your queued FlowFIles, but can also be I/O intensive on disk) together on a third disk.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 07-29-2022 02:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@hegdemahendra

How many FlowFiles are queued on the outbound connection(s) from your HandleHttpRequest processor?

Is backpressure being applied on the HandleHTTPRequest processor?

What version of NiFi are you using?

Any logging in the app.log about not being allowed to write to content repository and waiting on archive cleanup? If NiFi is blocking on on creating new content claims in to the content_repository, the HandleHTTPRequest processor will not be able to take data from the container and generate the outbound FlowFile. This would explain why cleaning up those repos would reduce the disk usage below the blocking threshold.

There are some know issues around NiFi blocking even if archive sub-directories in the content_repository are empty which were addressed in the latest Apache NiFi 1.16 release or Cloudera's CFM 2.1.4.1000 release.

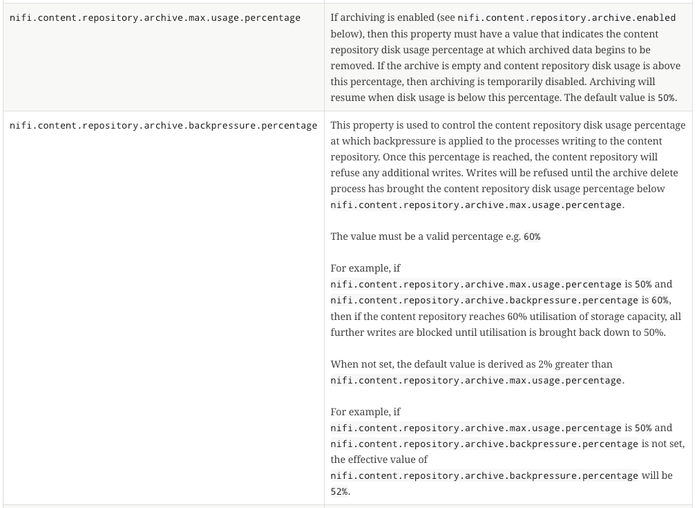

You may also want to look at your content repository settings for:

Compare those to your disk usage where your content_repo is located.

https://nifi.apache.org/docs/nifi-docs/html/administration-guide.html#content-repository

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 07-29-2022 07:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @MattWho .

- 0 flow file in outbound connection.

- no backpressure

- nifi 1.12.1 version we are using

- Don't see any logs related to this

- We are using 500 gb disk and only 20gb was utilized at that time

But all repos (flowfile, content and prov) are located in same ebs volume of 500gb,

so is this could be something due to lack of iops availability?

Created 08-01-2022 06:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@hegdemahendra

This could be and IOPS issue possibly, but it could also be a concurrency issue with threads.

How large is your Timer Driven thread pool? This is the pool of threads from which the scheduled components can use. If it is set to 10 and and all are currently in use by components, the HandleHTTPRequest processor , while scheduled, will be waiting for a free thread from that pool before it can execute. Adjusting the "Max Timer Driven Thread pool" requires careful consideration of average CPU load average across on every node in your NiFi cluster since same value is applied to each node separately. General starting pool size should be 2 to 4 times the number of cores on a single node. Form there you monitor CPU load average across all nodes and use the one with the highest CPU load average to determine if you can add more threads to that pool. If you have a single node that is always has a much higher CPU load average, you should take a closer look at that server. Does it have other service running on it tat are not running on other nodes? Does it unproportionately consistently have more FlowFiles then any other node (This typically is a result of dataflow design and not handling FlowFile load balancing redistribution optimally.)?

How many concurrent tasks on your HandleHttpRequest processor. The concurrent tasks are responsible for obtaining threads (1 per concurrent task if available) to read data from the Container queue and create the FlowFiles. Perhaps the request come in so fast that there are not enough available threads to keep the container queue from filling and thus blocking new requests. Assuming your CPU load average is not too high, increase your Max Timer Driven Thread pool and the number fo concurrent tasks on your HandleHttpRequest processor to see if that resolves your issue. But keep in mind that even if this helps with processor getting more threads, if the disk I/O can't keep up then you will still have same issue.

As far as having all your NiFi repos on same disk, this is not a recommended practice. Typical setup would have the content_repository on its own disk (content repo can fill disk to 100% which does not cause issue other then not being able to write new content until disk usage drops), The provenance_repository on its own disk (size of this disk depends on amount of provenance history you want to retain and size fo your dataflows along with volume of FlowFiles, but its disk usage is controllable. Recommend separate disk due to disk I/O), and put the database_repository (very small in terms of disk usage) and flowfile_repository (relatively small unless you allow a very large number fo FlowFiles to queue in your dataflows. FlowFile_repos only hold metadata/attributes about your queued FlowFIles, but can also be I/O intensive on disk) together on a third disk.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 08-01-2022 09:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MattWho - We have separated out ebs volumes of each repos and also 3 ebs volumes each for content and provenance repos.

Now looks like issue is pretty much resolved !

Thanks for all your suggestions, helped a lot

Thanks

Mahendra