Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Hive Insert Into S3 External Table Overwrites ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hive Insert Into S3 External Table Overwrites Whole File !

- Labels:

-

Hortonworks Data Platform (HDP)

Created on 04-17-2017 06:10 PM - edited 08-17-2019 10:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

All,

We have been facing an issue while we are trying to insert data from Managed to External Table S3. Each time when we have a new data in Managed Table, we need to append that new data into our external table S3. Instead of appending, it is replacing old data with newly received data (Old data are over written). I have come across similar JIRA thread and that patch is for Apache Hive (Link at the bottom). Since we are on HDP, can anyone help me out with this?

Below are the Versions:

HDP 2.5.3

Hive 1.2.1000.2.5.3.0-37

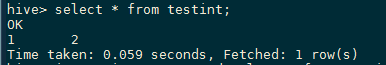

create external table tests3prd (c1 string, c2 string) location 's3a://testdata/test/tests3prd/'; create table testint (c1 string,c2 string); insert into testint values (1,2);

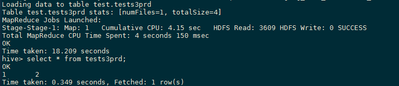

insert into tests3prd select * from testint; (2 times)

When I re-insert the same values 1,2 , it overwrites the existing row and replaces with the new record.

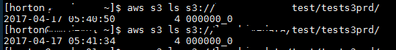

Here is the S3 external files where each time *0000_0 is overwritten instead a new copy or serial addition.

PS: Jira Thread : https://issues.apache.org/jira/browse/HIVE-15199

Created 04-17-2017 06:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@vpoornalingam Will you be able to check this out for me?

Created 05-03-2017 11:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is fixed in Hortonworks Cloud. Is this on-prime cluster or Hortonworks Cloud?.

Created 05-04-2017 07:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Rajesh for the reply, this is on AWS EC2 instance installed with HDP 2.5.3 ! Let me know if you know any workaround for the same !

Cheers,

Ram

Created 08-18-2017 07:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try below workaround.

-Create merged temp table (old data + new data) using union all

-Insert overwrite the final table with merged data

-Drop temp table .

Created 08-18-2017 07:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Rajesh Balamohan We are also facing the similar issue. Please let us know if there are any fixes available or any plan for fix in future releases for HDP.

HDP 2.5.3 (EC2 Instances), Hive 1.2.1

Thanks..

Created 12-29-2017 03:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It should be fixed in the current release of HDP 2.6. If not, please put in an official support request or JIRA ticket.

Created 12-29-2017 02:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello guys, I have a similar issue but with external table onto HDFS. Is there any solution on this so far? we are using HDP-2.6.3.0 and here is how my table looks like:

create external table test1(c1 int, c2 int) CLUSTERED BY(c1) SORTED BY(c1) INTO 4 BUCKETS ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE LOCATION '/user/companies/';

Currently the data is always overwritten

Thank you

Created 12-29-2017 03:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what version of Hive are using. 1.2 or 2.1?

Is there permissions for reading on that HDFS location? S3?

By default location is LOCATION '<hdfs_location>';

LOCATION 's3a://BUCKET_NAME/tpcds_bin_partitioned_orc_200.db/inventory';

You need to specify that it is stored in S3 and has permissions. By default it assumes the path is in HDFS.

Created 01-09-2018 08:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello guys, any update on this? I remind you that in my case it occurs on HDFS not S3.

Thank you in advance