Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Hive OutOfMemoryError: unable to create new na...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hive OutOfMemoryError: unable to create new native thread

- Labels:

-

Apache Ambari

-

Apache Hive

-

Apache Tez

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seeing below exception on running Hive TPCDS data gen (https://github.com/hortonworks/hive-testbench) for a scale of ~500G.

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.io.IOException: java.lang.OutOfMemoryError: unable to create new native thread

Attached log for complete stacktrace.

Cluster Configuration :

16 Nodes / 12 Nodemanagers / 12 Datanodes

Per Node Config :

Cores : 40

Memory : 392GB

Ambari Configs changed from initial configs to improve performance :

Decided to set 10G as container size to utilise maximum cores per node (320G/10G = 32 containers using 1 Core/node. Hence ~32 Cores/node utilised)

YARN

Hive

hive.tez.container.size = 10240MB

hive.auto.convert.join.noconditionaltask.size = 2027316838 B

hive.exec.reducers.bytes.per.reducer = 1073217536 B

Tez

tez.am.resource.memory.mb = 10240 MB

tez.am.resource.java.opts = -server -Xmx8192m

tez.task.resource.memory.mb = 10240 MB

Referred https://community.cloudera.com/t5/Community-Articles/Demystify-Apache-Tez-Memory-Tuning-Step-by-Step... for some tuning values.

Created 10-23-2019 04:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ssulav ,

I see the below error in the HS2 logs shared:

ERROR [HiveServer2-Background-Pool: Thread-886]: SessionState (:()) - Vertex failed, vertexName=Map 1, vertexId=vertex_1571760131080_0019_1_00, diagnostics=[Task failed, taskId=task_1571760131080_0019_1_00_000380, diagnostics=[TaskAttempt 0 failed, info=[Error: Error while running task ( failure ) : java.lang.OutOfMemoryError: unable to create new native thread

The above error is reported by the Yarn application.

This error is not related to any Hadoop or Yarn or Hive configuration. Rather this is an error received by the OS for now able to create new threads for the process.

You need to check the ulimits of the Yarn user on the NodeManager and ResourceManager nodes.

Though, it is more likely this error is coming from the NodeManager running the above mentioned Task ID.

You can identify the host where the above Task is running by searching for the vertex or Task ID in the Yarn application logs.

The job would be running as Yarn user and check for similar errors in the nodemanager logs for the same host.

You can try increase the ulimit for "ulimit -u" option.

Created on 10-23-2019 05:33 AM - edited 10-23-2019 05:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reply @rohitmalhotra

User Limit for yarn is set to 65536.

Is there any recommended highest value or shall I just make it unlimited? (It can have consequences?)

Edit : I tried setting unlimited. Still seeing same error.

Created 10-23-2019 11:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

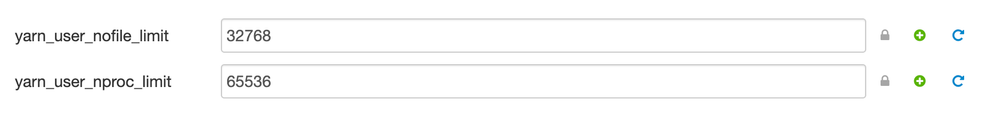

You can try changing the limits from Ambari as well.

Under Ambari > Yarn Configs > Advanced:

Restart Yarn after increasing the limit.