Support Questions

- Cloudera Community

- Support

- Support Questions

- Hive View Not Populating Database Explorer

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hive View Not Populating Database Explorer

- Labels:

-

Apache Hive

Created on 10-16-2015 01:32 PM - edited 08-19-2019 05:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

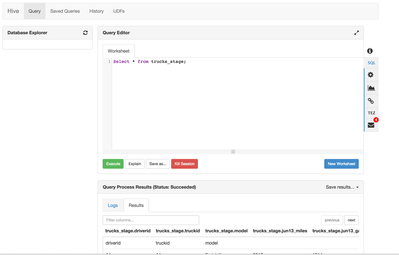

I've downloaded the latest sandbox and I'm running through a tutorial. It seems that the Hive view in Ambari can execute commands, but the Database Explorer will not show any of the tables that are present.

However I can still execute statements and receive the results. I just can't see the list of tables. It's not throwing any errors up on the screen so I'm not sure what's wrong.

Is there any way to fix this?

Created 10-16-2015 04:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It showed all of the databases when I ran the mysql command

It could possibly be an issue with user permissions - and the 'admin' user that the view is using does not have access to the hive databases or tables.

I found this out by ssh'ing into the sandbox and I tried immediately running hive

[root@sandbox ~]# hive

gave me the error

org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="/user/root":hdfs:hdfs:drwxr-xr-x

However if I did a "su hdfs" or "su hive", it would allow me to run hive and see the tables.

Where I'm stumped is why I could run

select * from table_name;

and still have all the results returned.

Created 10-16-2015 01:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please launch mysql cli and check for databases ? Just want to make sure that you have privilege to see show databases;

Created 10-16-2015 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you share some log and configurations? I'd be interested in the log that is produced when you open that view or press the refresh button of the database explorer

Created 10-16-2015 04:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The tables that you are expecting, were they created from the Hive View? I ran into issues with the tables getting refreshed. Can you kill session and launch new?

Created 10-16-2015 04:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think it's an issue with user permissions, but I don't know why I can still run commands like

select * from table_name;

Created 10-16-2015 04:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could table_name be an external table with location that is readable by admin?

Created 10-16-2015 04:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suppose so? I was really just using it as a place holder. See screenshot in original question. That "trucks_stage" table, I was able to read and load from.

Created 10-16-2015 04:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It showed all of the databases when I ran the mysql command

It could possibly be an issue with user permissions - and the 'admin' user that the view is using does not have access to the hive databases or tables.

I found this out by ssh'ing into the sandbox and I tried immediately running hive

[root@sandbox ~]# hive

gave me the error

org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="/user/root":hdfs:hdfs:drwxr-xr-x

However if I did a "su hdfs" or "su hive", it would allow me to run hive and see the tables.

Where I'm stumped is why I could run

select * from table_name;

and still have all the results returned.

Created 02-03-2016 02:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ended up restarting the Sandbox and my problem was eventually fixed.

Created 10-16-2015 06:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you followed the instructions from Ambari documentation for configuring Hive view. It states there:

Ambari views use the doAs option for commands. This option enables the Ambari process user to impersonate the Ambari logged-in user. To avoid receiving permissions errors for job submissions and file save operations, you must create HDFS users for all Ambari users that use the views.

The HDFS permission error that you are showing above:

org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="/user/root":hdfs:hdfs:drwxr-xr-x

It clearly shows that the /user/root which is the default user home of root user on HDFS is not owned by root, that's a problem. Can you make sure that /user/root is created with ownership of root user. Also if the authorization mode in Hive is StorageBased then /apps/hive/warehouse should be writable by root. This is usually accomplished by having the executing user in the same group as the one that has write permissions on /apps/hive/warehouse.

I would also guess that in your current setup any query that requires a job to be run will fail. Simple metadata queries would succeed.