Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Hive databases are not visible in Spark sessio...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hive databases are not visible in Spark session.

- Labels:

-

Apache Hive

-

Apache Spark

Created 05-05-2019 04:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

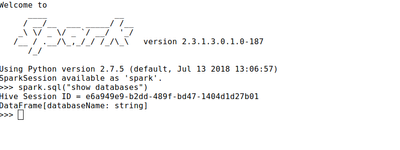

I am trying to run spark application which will need access to Hive databases. But Hive databases like FOODMART are not visible in spark session.

I did spark.sql("show databases").show(); it is not showing Foodmart database, though spark session is having enableHiveSupport.

Below i've tried:

1)

cp /etc/hive/conf/hive-site.xml /etc/spark2/conf

2)

Changed spark.sql.warehouse.dir in spark UI from /apps/spark/warehouse to /warehouse/tablespace/managed/hive

Even though it is not working.

Please let me know what configuration changes would be required to have this.

Please note - Above is working in HDP2.6.5.

Created 05-10-2019 07:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shashank Naresh,

It's not clear what is your current version, I'll assume HDP3. If that is the case, you may want to read the following links, along with its internal links:

Spark to Hive access on HDP3 - https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/integrating-hive/content/hive_hivewarehouse...

Configuration - https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/integrating-hive/content/hive_configure_a_s...

API Operations - https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/integrating-hive/content/hive_hivewarehouse...

In short, Spark has its own catalog, meaning that you will not natively have access to Hive catalog as you did on HDP2.

BR,

David Bompart

Created 08-13-2019 03:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Shashank,

You're still skipping the link to: API Operations - https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/integrating-hive/content/hive_hivewarehouse...

Listing the databases in Hive from Spark using the SparkSQL API will not work, as long as metastore.default.catalog is set to "spark" which is the default value and recommended to leave it as it is. So to summarize, by default SparkSQL API (spark.sql("$query")) will access the Spark catalog, instead you should be using the HiveWarehouseSessionAPI as explained in the link above, something like:

import com.hortonworks.hwc.HiveWarehouseSession

import com.hortonworks.hwc.HiveWarehouseSession._

val hive = HiveWarehouseSession.session(spark).build()

hive.showDatabases().show()

hive.setDatabase("foodmart")

hive.showTables().show()

hive.execute("describe formatted foodmartTable").show()

hive.executeQuery("select * from foodmartTable limit 5").show()

Created 05-10-2019 07:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shashank Naresh,

It's not clear what is your current version, I'll assume HDP3. If that is the case, you may want to read the following links, along with its internal links:

Spark to Hive access on HDP3 - https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/integrating-hive/content/hive_hivewarehouse...

Configuration - https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/integrating-hive/content/hive_configure_a_s...

API Operations - https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/integrating-hive/content/hive_hivewarehouse...

In short, Spark has its own catalog, meaning that you will not natively have access to Hive catalog as you did on HDP2.

BR,

David Bompart

Created 08-13-2019 12:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using HDP 3.1.0

Created on 08-13-2019 04:00 AM - edited 08-17-2019 03:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dbompart,

Thanks for the answer,

I am using HDP3.1,

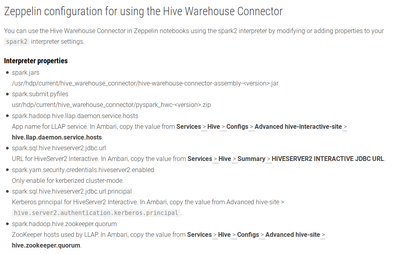

I've tried to change the settings per link "https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/integrating-hive/content/hive_configure_a_s..."

1) Spark setting below

2) Trying to get hive databases in spark - no success;

3) Can see hive databases in hive

Could you please assist me on this, what else needs to be done.

Created 08-13-2019 03:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Shashank,

You're still skipping the link to: API Operations - https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/integrating-hive/content/hive_hivewarehouse...

Listing the databases in Hive from Spark using the SparkSQL API will not work, as long as metastore.default.catalog is set to "spark" which is the default value and recommended to leave it as it is. So to summarize, by default SparkSQL API (spark.sql("$query")) will access the Spark catalog, instead you should be using the HiveWarehouseSessionAPI as explained in the link above, something like:

import com.hortonworks.hwc.HiveWarehouseSession

import com.hortonworks.hwc.HiveWarehouseSession._

val hive = HiveWarehouseSession.session(spark).build()

hive.showDatabases().show()

hive.setDatabase("foodmart")

hive.showTables().show()

hive.execute("describe formatted foodmartTable").show()

hive.executeQuery("select * from foodmartTable limit 5").show()

Created on 08-13-2019 05:27 PM - edited 08-17-2019 03:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

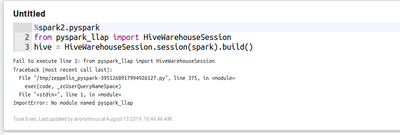

Hi @dbompart,

Thanks for your reply;

I've tried below:

1) Changed the Zeppelin setting per below

2) Restarted notebook

3) Tried below code in notebook and getting below import error.

Requesting to assist here.

Thanks and Regards.

Created 08-13-2019 07:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Zeppelin and Spark-shell are not the same client and properties work diferently, if you moved on to Zeppelin can we assume it did work for Spark-shell?

In regard to the Zeppelin issue, the problem should be within the the path to the hive warehouse connector file either on the spark.jars or the spark.submit.pyFiles, I believe the path must be whitelisted in Zeppelin, but its clear that the hivewarehouseconnector files are not being succesfully uploaded to the application classpath, therefore, the pyspark_llap module cannot be imported. Hope it helps.

BR,

David