Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HiveServer2 not starting

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HiveServer2 not starting

- Labels:

-

Apache Hive

Created on 02-22-2024 05:24 AM - edited 02-22-2024 05:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

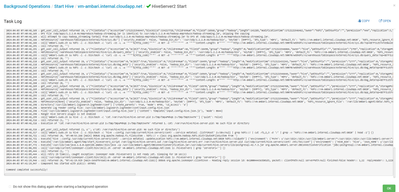

I need your help in figuring out why this Hive Server 2 are not working in ambari, Please find the log information

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/ODP/1.0/services/HIVE/package/scripts/hive_server.py", line 143, in <module>

HiveServer().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 352, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/ODP/1.0/services/HIVE/package/scripts/hive_server.py", line 53, in start

hive_service('hiveserver2', action = 'start', upgrade_type=upgrade_type)

File "/var/lib/ambari-agent/cache/stacks/ODP/1.0/services/HIVE/package/scripts/hive_service.py", line 64, in hive_service

check_fs_root(params.hive_server_conf_dir, params.execute_path)

File "/var/lib/ambari-agent/cache/stacks/ODP/1.0/services/HIVE/package/scripts/hive_service.py", line 173, in check_fs_root

environment = {'PATH': execution_path}

File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line 166, in __init__

self.env.run()

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/ambari-agent/lib/resource_management/core/providers/system.py", line 263, in action_run

returns=self.resource.returns)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 72, in inner

result = function(command, **kwargs)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 102, in checked_call

tries=tries, try_sleep=try_sleep, timeout_kill_strategy=timeout_kill_strategy, returns=returns)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 150, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 314, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of 'hive --config /usr/odp/current/hive-server2/conf/ --service metatool -updateLocation hdfs://master1.com:8020 12:33:52.326 [main] DEBUG org.apache.hadoop.fs.FileSystem - hdfs:// = class org.apache.hadoop.hdfs.DistributedFileSystem from' returned 1. SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/odp/1.2.2.0-46/hive/lib/logback-classic-1.2.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/odp/1.2.2.0-46/tez/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/odp/1.2.2.0-46/hadoop/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/odp/1.2.2.0-46/hadoop-mapreduce/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [ch.qos.logback.classic.util.ContextSelectorStaticBinder]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/odp/1.2.2.0-46/hive/lib/logback-classic-1.2.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/odp/1.2.2.0-46/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/odp/1.2.2.0-46/tez/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/odp/1.2.2.0-46/hadoop/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/odp/1.2.2.0-46/hadoop-mapreduce/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [ch.qos.logback.classic.util.ContextSelectorStaticBinder]

12:33:57.225 [main] DEBUG org.apache.hadoop.util.Shell - setsid exited with exit code 0

Initializing HiveMetaTool..

Exception in thread "main" java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: 12:33:52.326

at org.apache.hadoop.fs.Path.initialize(Path.java:263)

at org.apache.hadoop.fs.Path.<init>(Path.java:221)

at org.apache.hadoop.hive.metastore.tools.HiveMetaTool.main(HiveMetaTool.java:441)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:328)

at org.apache.hadoop.util.RunJar.main(RunJar.java:241)

Caused by: java.net.URISyntaxException: Relative path in absolute URI: 12:33:52.326

at java.net.URI.checkPath(URI.java:1823)

at java.net.URI.<init>(URI.java:745)

at org.apache.hadoop.fs.Path.initialize(Path.java:260)

... 8 more

12:33:57.252 [Thread-0] DEBUG org.apache.hadoop.util.ShutdownHookManager - Completed shutdown in 0.007 seconds; Timeouts: 0

12:33:57.260 [Thread-0] DEBUG org.apache.hadoop.util.ShutdownHookManager - ShutdownHookManager completed shutdown.

2024-02-22 12:33:35,620 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:35,627 - Using hadoop conf dir: /usr/odp/1.2.2.0-46/hadoop/conf

2024-02-22 12:33:35,679 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:35,681 - Using hadoop conf dir: /usr/odp/1.2.2.0-46/hadoop/conf

2024-02-22 12:33:35,682 - Group['ubuntu'] {}

2024-02-22 12:33:35,682 - Group['hdfs'] {}

2024-02-22 12:33:35,682 - User['hive'] {'gid': 'ubuntu', 'fetch_nonlocal_groups': True, 'groups': ['ubuntu'], 'uid': None}

2024-02-22 12:33:35,683 - User['yarn-ats'] {'gid': 'ubuntu', 'fetch_nonlocal_groups': True, 'groups': ['ubuntu'], 'uid': None}

2024-02-22 12:33:35,684 - User['zookeeper'] {'gid': 'ubuntu', 'fetch_nonlocal_groups': True, 'groups': ['ubuntu'], 'uid': None}

2024-02-22 12:33:35,684 - User['ubuntu'] {'gid': 'ubuntu', 'fetch_nonlocal_groups': True, 'groups': ['ubuntu'], 'uid': None}

2024-02-22 12:33:35,684 - User['tez'] {'gid': 'ubuntu', 'fetch_nonlocal_groups': True, 'groups': ['ubuntu'], 'uid': None}

2024-02-22 12:33:35,685 - User['yarn'] {'gid': 'ubuntu', 'fetch_nonlocal_groups': True, 'groups': ['ubuntu'], 'uid': None}

2024-02-22 12:33:35,685 - User['mapred'] {'gid': 'ubuntu', 'fetch_nonlocal_groups': True, 'groups': ['ubuntu'], 'uid': None}

2024-02-22 12:33:35,686 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2024-02-22 12:33:35,686 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ubuntu /tmp/hadoop-ubuntu,/tmp/hsperfdata_ubuntu,/home/ubuntu,/tmp/ubuntu,/tmp/sqoop-ubuntu 0'] {'not_if': '(test $(id -u ubuntu) -gt 1000) || (false)'}

2024-02-22 12:33:35,696 - Group['ubuntu'] {}

2024-02-22 12:33:35,696 - User['ubuntu'] {'fetch_nonlocal_groups': True, 'groups': ['ubuntu', u'ubuntu']}

2024-02-22 12:33:35,696 - FS Type: HDFS

2024-02-22 12:33:35,696 - Directory['/etc/hadoop'] {'mode': 0755}

2024-02-22 12:33:35,703 - File['/usr/odp/1.2.2.0-46/hadoop/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'ubuntu', 'group': 'ubuntu'}

2024-02-22 12:33:35,703 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'ubuntu', 'group': 'ubuntu', 'mode': 01777}

2024-02-22 12:33:35,711 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2024-02-22 12:33:35,713 - Skipping Execute[('setenforce', '0')] due to not_if

2024-02-22 12:33:35,713 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'ubuntu', 'mode': 0775, 'cd_access': 'a'}

2024-02-22 12:33:35,714 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2024-02-22 12:33:35,714 - Directory['/var/run/hadoop/ubuntu'] {'owner': 'ubuntu', 'cd_access': 'a'}

2024-02-22 12:33:35,714 - Directory['/tmp/hadoop-ubuntu'] {'owner': 'ubuntu', 'create_parents': True, 'cd_access': 'a'}

2024-02-22 12:33:35,716 - File['/usr/odp/1.2.2.0-46/hadoop/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'ubuntu'}

2024-02-22 12:33:35,717 - File['/usr/odp/1.2.2.0-46/hadoop/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'ubuntu'}

2024-02-22 12:33:35,719 - File['/usr/odp/1.2.2.0-46/hadoop/conf/log4j.properties'] {'content': InlineTemplate(...), 'owner': 'ubuntu', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:35,723 - File['/usr/odp/1.2.2.0-46/hadoop/conf/hadoop-metrics2.properties'] {'content': InlineTemplate(...), 'owner': 'ubuntu', 'group': 'ubuntu'}

2024-02-22 12:33:35,724 - File['/usr/odp/1.2.2.0-46/hadoop/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2024-02-22 12:33:35,725 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'ubuntu', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:35,727 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2024-02-22 12:33:35,728 - Skipping unlimited key JCE policy check and setup since the Java VM is not managed by Ambari

2024-02-22 12:33:35,834 - Using hadoop conf dir: /usr/odp/1.2.2.0-46/hadoop/conf

2024-02-22 12:33:35,838 - call['ambari-python-wrap /usr/bin/odp-select status hive-server2'] {'timeout': 20}

2024-02-22 12:33:35,849 - call returned (0, 'hive-server2 - 1.2.2.0-46')

2024-02-22 12:33:35,849 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:35,857 - File['/var/lib/ambari-agent/cred/lib/CredentialUtil.jar'] {'content': DownloadSource('http://ambari.com:9090/resources/CredentialUtil.jar'), 'mode': 0755}

2024-02-22 12:33:35,858 - Not downloading the file from http://ambari.com:9090/resources/CredentialUtil.jar, because /var/lib/ambari-agent/tmp/CredentialUtil.jar already exists

2024-02-22 12:33:36,262 - Directories to fill with configs: [u'/usr/odp/current/hive-server2/conf', u'/usr/odp/current/hive-server2/conf/']

2024-02-22 12:33:36,262 - Directory['/etc/hive/1.2.2.0-46/0'] {'owner': 'hive', 'group': 'ubuntu', 'create_parents': True, 'mode': 0755}

2024-02-22 12:33:36,262 - XmlConfig['mapred-site.xml'] {'group': 'ubuntu', 'conf_dir': '/etc/hive/1.2.2.0-46/0', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2024-02-22 12:33:36,270 - Generating config: /etc/hive/1.2.2.0-46/0/mapred-site.xml

2024-02-22 12:33:36,270 - File['/etc/hive/1.2.2.0-46/0/mapred-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'ubuntu', 'mode': 0644, 'encoding': 'UTF-8'}

2024-02-22 12:33:36,289 - File['/etc/hive/1.2.2.0-46/0/hive-default.xml.template'] {'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,290 - File['/etc/hive/1.2.2.0-46/0/hive-env.sh.template'] {'owner': 'hive', 'group': 'ubuntu', 'mode': 0755}

2024-02-22 12:33:36,291 - File['/etc/hive/1.2.2.0-46/0/llap-daemon-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,292 - File['/etc/hive/1.2.2.0-46/0/llap-cli-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,293 - File['/etc/hive/1.2.2.0-46/0/hive-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,294 - File['/etc/hive/1.2.2.0-46/0/hive-exec-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,295 - File['/etc/hive/1.2.2.0-46/0/beeline-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,296 - XmlConfig['beeline-site.xml'] {'owner': 'hive', 'group': 'ubuntu', 'mode': 0644, 'conf_dir': '/etc/hive/1.2.2.0-46/0', 'configurations': {'beeline.hs2.jdbc.url.container': u'jdbc:hive2://master1.com:2181,master2.com:2181,slave1.com:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2', 'beeline.hs2.jdbc.url.default': 'container'}}

2024-02-22 12:33:36,299 - Generating config: /etc/hive/1.2.2.0-46/0/beeline-site.xml

2024-02-22 12:33:36,299 - File['/etc/hive/1.2.2.0-46/0/beeline-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'ubuntu', 'mode': 0644, 'encoding': 'UTF-8'}

2024-02-22 12:33:36,300 - File['/etc/hive/1.2.2.0-46/0/parquet-logging.properties'] {'content': ..., 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,300 - Directory['/etc/hive/1.2.2.0-46/0'] {'owner': 'hive', 'group': 'ubuntu', 'create_parents': True, 'mode': 0755}

2024-02-22 12:33:36,300 - XmlConfig['mapred-site.xml'] {'group': 'ubuntu', 'conf_dir': '/etc/hive/1.2.2.0-46/0', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2024-02-22 12:33:36,303 - Generating config: /etc/hive/1.2.2.0-46/0/mapred-site.xml

2024-02-22 12:33:36,304 - File['/etc/hive/1.2.2.0-46/0/mapred-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'ubuntu', 'mode': 0644, 'encoding': 'UTF-8'}

2024-02-22 12:33:36,326 - File['/etc/hive/1.2.2.0-46/0/hive-default.xml.template'] {'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,327 - File['/etc/hive/1.2.2.0-46/0/hive-env.sh.template'] {'owner': 'hive', 'group': 'ubuntu', 'mode': 0755}

2024-02-22 12:33:36,329 - File['/etc/hive/1.2.2.0-46/0/llap-daemon-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,331 - File['/etc/hive/1.2.2.0-46/0/llap-cli-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,333 - File['/etc/hive/1.2.2.0-46/0/hive-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,334 - File['/etc/hive/1.2.2.0-46/0/hive-exec-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,336 - File['/etc/hive/1.2.2.0-46/0/beeline-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,336 - XmlConfig['beeline-site.xml'] {'owner': 'hive', 'group': 'ubuntu', 'mode': 0644, 'conf_dir': '/etc/hive/1.2.2.0-46/0', 'configurations': {'beeline.hs2.jdbc.url.container': u'jdbc:hive2://master1.com:2181,master2.com:2181,slave1.com:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2', 'beeline.hs2.jdbc.url.default': 'container'}}

2024-02-22 12:33:36,342 - Generating config: /etc/hive/1.2.2.0-46/0/beeline-site.xml

2024-02-22 12:33:36,342 - File['/etc/hive/1.2.2.0-46/0/beeline-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'ubuntu', 'mode': 0644, 'encoding': 'UTF-8'}

2024-02-22 12:33:36,344 - File['/etc/hive/1.2.2.0-46/0/parquet-logging.properties'] {'content': ..., 'owner': 'hive', 'group': 'ubuntu', 'mode': 0644}

2024-02-22 12:33:36,344 - File['/usr/odp/current/hive-server2/conf/hive-site.jceks'] {'content': StaticFile('/var/lib/ambari-agent/cred/conf/hive_server/hive-site.jceks'), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0640}

2024-02-22 12:33:36,344 - Writing File['/usr/odp/current/hive-server2/conf/hive-site.jceks'] because contents don't match

2024-02-22 12:33:36,344 - XmlConfig['core-site.xml'] {'group': 'ubuntu', 'conf_dir': '/usr/odp/current/hive-server2/conf/', 'mode': 0644, 'configuration_attributes': {u'final': {u'fs.defaultFS': u'true'}}, 'owner': 'hive', 'configurations': ...}

2024-02-22 12:33:36,350 - Generating config: /usr/odp/current/hive-server2/conf/core-site.xml

2024-02-22 12:33:36,350 - File['/usr/odp/current/hive-server2/conf/core-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'ubuntu', 'mode': 0644, 'encoding': 'UTF-8'}

2024-02-22 12:33:36,364 - XmlConfig['hive-site.xml'] {'group': 'ubuntu', 'conf_dir': '/usr/odp/current/hive-server2/conf/', 'mode': 0644, 'configuration_attributes': {u'hidden': {u'javax.jdo.option.ConnectionPassword': u'HIVE_CLIENT,CONFIG_DOWNLOAD'}}, 'owner': 'hive', 'configurations': ...}

2024-02-22 12:33:36,367 - Generating config: /usr/odp/current/hive-server2/conf/hive-site.xml

2024-02-22 12:33:36,367 - File['/usr/odp/current/hive-server2/conf/hive-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'ubuntu', 'mode': 0644, 'encoding': 'UTF-8'}

2024-02-22 12:33:36,427 - Writing File['/usr/odp/current/hive-server2/conf/hive-site.xml'] because contents don't match

2024-02-22 12:33:36,429 - File['/usr/odp/current/hive-server2/conf//hive-env.sh'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0755}

2024-02-22 12:33:36,429 - Writing File['/usr/odp/current/hive-server2/conf//hive-env.sh'] because contents don't match

2024-02-22 12:33:36,429 - Directory['/etc/security/limits.d'] {'owner': 'root', 'create_parents': True, 'group': 'root'}

2024-02-22 12:33:36,431 - File['/etc/security/limits.d/hive.conf'] {'content': Template('hive.conf.j2'), 'owner': 'root', 'group': 'root', 'mode': 0644}

2024-02-22 12:33:36,431 - File['/usr/lib/ambari-agent/DBConnectionVerification.jar'] {'content': DownloadSource('http://ambari.com:9090/resources/DBConnectionVerification.jar'), 'mode': 0644}

2024-02-22 12:33:36,431 - Not downloading the file from http://ambari.com:9090/resources/DBConnectionVerification.jar, because /var/lib/ambari-agent/tmp/DBConnectionVerification.jar already exists

2024-02-22 12:33:36,431 - Directory['/var/run/hive'] {'owner': 'hive', 'create_parents': True, 'group': 'ubuntu', 'mode': 0755, 'cd_access': 'a'}

2024-02-22 12:33:36,431 - Directory['/var/log/hive'] {'owner': 'hive', 'create_parents': True, 'group': 'ubuntu', 'mode': 0755, 'cd_access': 'a'}

2024-02-22 12:33:36,431 - Directory['/var/lib/hive'] {'owner': 'hive', 'create_parents': True, 'group': 'ubuntu', 'mode': 0755, 'cd_access': 'a'}

2024-02-22 12:33:36,432 - File['/var/lib/ambari-agent/tmp/start_hiveserver2_script'] {'content': Template('startHiveserver2.sh.j2'), 'mode': 0755}

2024-02-22 12:33:36,434 - File['/usr/odp/current/hive-server2/conf/hadoop-metrics2-hiveserver2.properties'] {'content': Template('hadoop-metrics2-hiveserver2.properties.j2'), 'owner': 'hive', 'group': 'ubuntu', 'mode': 0600}

2024-02-22 12:33:36,434 - XmlConfig['hiveserver2-site.xml'] {'group': 'ubuntu', 'conf_dir': '/usr/odp/current/hive-server2/conf/', 'mode': 0600, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': {u'hive.metastore.metrics.enabled': u'true', u'hive.security.authorization.enabled': u'false', u'hive.service.metrics.reporter': u'HADOOP2', u'hive.service.metrics.hadoop2.component': u'hiveserver2', u'hive.server2.metrics.enabled': u'true'}}

2024-02-22 12:33:36,438 - Generating config: /usr/odp/current/hive-server2/conf/hiveserver2-site.xml

2024-02-22 12:33:36,438 - File['/usr/odp/current/hive-server2/conf/hiveserver2-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'ubuntu', 'mode': 0600, 'encoding': 'UTF-8'}

2024-02-22 12:33:36,440 - Called copy_to_hdfs tarball: mapreduce

2024-02-22 12:33:36,440 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:36,441 - Tarball version was calcuated as 1.2.2.0-46. Use Command Version: True

2024-02-22 12:33:36,441 - Source file: /usr/odp/1.2.2.0-46/hadoop/mapreduce.tar.gz , Dest file in HDFS: /odp/apps/1.2.2.0-46/mapreduce/mapreduce.tar.gz

2024-02-22 12:33:36,441 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:36,441 - Tarball version was calcuated as 1.2.2.0-46. Use Command Version: True

2024-02-22 12:33:36,441 - HdfsResource['/odp/apps/1.2.2.0-46/mapreduce'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'ubuntu', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2024-02-22 12:33:36,442 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/odp/apps/1.2.2.0-46/mapreduce?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpmafiEp 2>/tmp/tmpJY3zLf''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:36,458 - call returned (0, '')

2024-02-22 12:33:36,458 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":2,"fileId":16423,"group":"ubuntu","length":0,"modificationTime":1708598632002,"owner":"ubuntu","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-02-22 12:33:36,459 - HdfsResource['/odp/apps/1.2.2.0-46/mapreduce/mapreduce.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'source': '/usr/odp/1.2.2.0-46/hadoop/mapreduce.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'ubuntu', 'group': 'ubuntu', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2024-02-22 12:33:36,459 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/odp/apps/1.2.2.0-46/mapreduce/mapreduce.tar.gz?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpVAiYlM 2>/tmp/tmpuX9xr6''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:36,474 - call returned (0, '')

2024-02-22 12:33:36,474 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1708593881581,"blockSize":134217728,"childrenNum":0,"fileId":16424,"group":"ubuntu","length":800695408,"modificationTime":1708593888563,"owner":"ubuntu","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2024-02-22 12:33:36,474 - DFS file /odp/apps/1.2.2.0-46/mapreduce/mapreduce.tar.gz is identical to /usr/odp/1.2.2.0-46/hadoop/mapreduce.tar.gz, skipping the copying

2024-02-22 12:33:36,474 - Will attempt to copy mapreduce tarball from /usr/odp/1.2.2.0-46/hadoop/mapreduce.tar.gz to DFS at /odp/apps/1.2.2.0-46/mapreduce/mapreduce.tar.gz.

2024-02-22 12:33:36,474 - Called copy_to_hdfs tarball: tez

2024-02-22 12:33:36,474 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:36,474 - Tarball version was calcuated as 1.2.2.0-46. Use Command Version: True

2024-02-22 12:33:36,474 - Source file: /usr/odp/1.2.2.0-46/tez/lib/tez.tar.gz , Dest file in HDFS: /odp/apps/1.2.2.0-46/tez/tez.tar.gz

2024-02-22 12:33:36,475 - Preparing the Tez tarball...

2024-02-22 12:33:36,475 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:36,475 - Tarball version was calcuated as 1.2.2.0-46. Use Command Version: True

2024-02-22 12:33:36,475 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:36,475 - Tarball version was calcuated as 1.2.2.0-46. Use Command Version: True

2024-02-22 12:33:36,475 - Extracting /usr/odp/1.2.2.0-46/hadoop/mapreduce.tar.gz to /var/lib/ambari-agent/tmp/mapreduce-tarball-Nmp_Dz

2024-02-22 12:33:36,475 - Execute[('tar', '-xf', u'/usr/odp/1.2.2.0-46/hadoop/mapreduce.tar.gz', '-C', '/var/lib/ambari-agent/tmp/mapreduce-tarball-Nmp_Dz/')] {'tries': 3, 'sudo': True, 'try_sleep': 1}

2024-02-22 12:33:42,186 - Extracting /usr/odp/1.2.2.0-46/tez/lib/tez.tar.gz to /var/lib/ambari-agent/tmp/tez-tarball-33Ib99

2024-02-22 12:33:42,186 - Execute[('tar', '-xf', u'/usr/odp/1.2.2.0-46/tez/lib/tez.tar.gz', '-C', '/var/lib/ambari-agent/tmp/tez-tarball-33Ib99/')] {'tries': 3, 'sudo': True, 'try_sleep': 1}

2024-02-22 12:33:42,643 - Execute[('cp', '-a', '/var/lib/ambari-agent/tmp/mapreduce-tarball-Nmp_Dz/hadoop/lib/native', '/var/lib/ambari-agent/tmp/tez-tarball-33Ib99/lib')] {'sudo': True}

2024-02-22 12:33:42,726 - Directory['/var/lib/ambari-agent/tmp/tez-tarball-33Ib99/lib'] {'recursive_ownership': True, 'mode': 0755, 'cd_access': 'a'}

2024-02-22 12:33:42,727 - Creating a new Tez tarball at /var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz

2024-02-22 12:33:42,727 - Execute[('tar', '-zchf', '/tmp/tmpwfXAfX', '-C', '/var/lib/ambari-agent/tmp/tez-tarball-33Ib99', '.')] {'tries': 3, 'sudo': True, 'try_sleep': 1}

2024-02-22 12:33:49,968 - Execute[('mv', '/tmp/tmpwfXAfX', '/var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz')] {}

2024-02-22 12:33:50,182 - HdfsResource['/odp/apps/1.2.2.0-46/tez'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'ubuntu', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2024-02-22 12:33:50,183 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/odp/apps/1.2.2.0-46/tez?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpJlnT_v 2>/tmp/tmpsBGxqY''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,198 - call returned (0, '')

2024-02-22 12:33:50,198 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16431,"group":"ubuntu","length":0,"modificationTime":1708596909969,"owner":"ubuntu","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-02-22 12:33:50,198 - HdfsResource['/odp/apps/1.2.2.0-46/tez/tez.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'source': '/var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'ubuntu', 'group': 'ubuntu', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2024-02-22 12:33:50,199 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/odp/apps/1.2.2.0-46/tez/tez.tar.gz?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpsDJS5i 2>/tmp/tmprmv1Tk''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,212 - call returned (0, '')

2024-02-22 12:33:50,212 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1708596909969,"blockSize":134217728,"childrenNum":0,"fileId":16432,"group":"ubuntu","length":147278725,"modificationTime":1708596911282,"owner":"ubuntu","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2024-02-22 12:33:50,213 - Not replacing existing DFS file /odp/apps/1.2.2.0-46/tez/tez.tar.gz which is different from /var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz, due to replace_existing_files=False

2024-02-22 12:33:50,213 - Will attempt to copy tez tarball from /var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz to DFS at /odp/apps/1.2.2.0-46/tez/tez.tar.gz.

2024-02-22 12:33:50,213 - Called copy_to_hdfs tarball: hive

2024-02-22 12:33:50,213 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:50,213 - Tarball version was calcuated as 1.2.2.0-46. Use Command Version: True

2024-02-22 12:33:50,213 - Source file: /usr/odp/1.2.2.0-46/hive/hive.tar.gz , Dest file in HDFS: /odp/apps/1.2.2.0-46/hive/hive.tar.gz

2024-02-22 12:33:50,213 - HdfsResource['/odp/apps/1.2.2.0-46/hive'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'ubuntu', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2024-02-22 12:33:50,213 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/odp/apps/1.2.2.0-46/hive?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpy05Qzz 2>/tmp/tmpRo5_jV''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,227 - call returned (0, '')

2024-02-22 12:33:50,228 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16433,"group":"ubuntu","length":0,"modificationTime":1708598628859,"owner":"ubuntu","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-02-22 12:33:50,228 - HdfsResource['/odp/apps/1.2.2.0-46/hive/hive.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'source': '/usr/odp/1.2.2.0-46/hive/hive.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'ubuntu', 'group': 'ubuntu', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2024-02-22 12:33:50,228 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/odp/apps/1.2.2.0-46/hive/hive.tar.gz?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpvvyprQ 2>/tmp/tmpKWOUOv''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,242 - call returned (0, '')

2024-02-22 12:33:50,242 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1708598628859,"blockSize":134217728,"childrenNum":0,"fileId":16434,"group":"ubuntu","length":334198509,"modificationTime":1708598631887,"owner":"ubuntu","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2024-02-22 12:33:50,242 - DFS file /odp/apps/1.2.2.0-46/hive/hive.tar.gz is identical to /usr/odp/1.2.2.0-46/hive/hive.tar.gz, skipping the copying

2024-02-22 12:33:50,242 - Will attempt to copy hive tarball from /usr/odp/1.2.2.0-46/hive/hive.tar.gz to DFS at /odp/apps/1.2.2.0-46/hive/hive.tar.gz.

2024-02-22 12:33:50,242 - Called copy_to_hdfs tarball: sqoop

2024-02-22 12:33:50,242 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:50,242 - Tarball version was calcuated as 1.2.2.0-46. Use Command Version: True

2024-02-22 12:33:50,242 - sqoop-env is not present on the cluster. Skip copying /usr/odp/1.2.2.0-46/sqoop/sqoop.tar.gz

2024-02-22 12:33:50,242 - Called copy_to_hdfs tarball: hadoop_streaming

2024-02-22 12:33:50,242 - Stack Feature Version Info: Cluster Stack=1.2, Command Stack=None, Command Version=1.2.2.0-46 -> 1.2.2.0-46

2024-02-22 12:33:50,242 - Tarball version was calcuated as 1.2.2.0-46. Use Command Version: True

2024-02-22 12:33:50,243 - Source file: /usr/odp/1.2.2.0-46/hadoop-mapreduce/hadoop-streaming.jar , Dest file in HDFS: /odp/apps/1.2.2.0-46/mapreduce/hadoop-streaming.jar

2024-02-22 12:33:50,243 - HdfsResource['/odp/apps/1.2.2.0-46/mapreduce'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'ubuntu', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2024-02-22 12:33:50,243 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/odp/apps/1.2.2.0-46/mapreduce?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmppOXVoW 2>/tmp/tmpzjAesB''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,256 - call returned (0, '')

2024-02-22 12:33:50,256 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":2,"fileId":16423,"group":"ubuntu","length":0,"modificationTime":1708598632002,"owner":"ubuntu","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-02-22 12:33:50,257 - HdfsResource['/odp/apps/1.2.2.0-46/mapreduce/hadoop-streaming.jar'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'source': '/usr/odp/1.2.2.0-46/hadoop-mapreduce/hadoop-streaming.jar', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'ubuntu', 'group': 'ubuntu', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2024-02-22 12:33:50,257 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/odp/apps/1.2.2.0-46/mapreduce/hadoop-streaming.jar?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmp8kAIp7 2>/tmp/tmpU119aX''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,270 - call returned (0, '')

2024-02-22 12:33:50,270 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1708598632002,"blockSize":134217728,"childrenNum":0,"fileId":16435,"group":"ubuntu","length":141285,"modificationTime":1708598632022,"owner":"ubuntu","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2024-02-22 12:33:50,270 - DFS file /odp/apps/1.2.2.0-46/mapreduce/hadoop-streaming.jar is identical to /usr/odp/1.2.2.0-46/hadoop-mapreduce/hadoop-streaming.jar, skipping the copying

2024-02-22 12:33:50,270 - Will attempt to copy hadoop_streaming tarball from /usr/odp/1.2.2.0-46/hadoop-mapreduce/hadoop-streaming.jar to DFS at /odp/apps/1.2.2.0-46/mapreduce/hadoop-streaming.jar.

2024-02-22 12:33:50,271 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'hive', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01755}

2024-02-22 12:33:50,271 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmplKlPGH 2>/tmp/tmpaApxLp''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,285 - call returned (0, '')

2024-02-22 12:33:50,285 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":46,"fileId":16440,"group":"hdfs","length":0,"modificationTime":1708600623687,"owner":"hive","pathSuffix":"","permission":"1755","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-02-22 12:33:50,285 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/query_data/'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'hive', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01777}

2024-02-22 12:33:50,286 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/query_data/?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpP0_KOv 2>/tmp/tmp4KDxWm''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,299 - call returned (0, '')

2024-02-22 12:33:50,299 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16441,"group":"hdfs","length":0,"modificationTime":1708600602424,"owner":"hive","pathSuffix":"","permission":"1777","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-02-22 12:33:50,299 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/dag_meta'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'hive', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01777}

2024-02-22 12:33:50,300 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/dag_meta?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpRuatMU 2>/tmp/tmp7Vt95c''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,313 - call returned (0, '')

2024-02-22 12:33:50,313 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":0,"fileId":16442,"group":"hdfs","length":0,"modificationTime":1708598632220,"owner":"hive","pathSuffix":"","permission":"1777","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-02-22 12:33:50,313 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/dag_data'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'hive', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01777}

2024-02-22 12:33:50,314 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/dag_data?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpwXr1OS 2>/tmp/tmpdlnY39''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,327 - call returned (0, '')

2024-02-22 12:33:50,327 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":0,"fileId":16443,"group":"hdfs","length":0,"modificationTime":1708598632285,"owner":"hive","pathSuffix":"","permission":"1777","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-02-22 12:33:50,328 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/app_data'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'owner': 'hive', 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01777}

2024-02-22 12:33:50,328 - call['ambari-sudo.sh su ubuntu -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://master1.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/app_data?op=GETFILESTATUS&user.name=ubuntu'"'"' 1>/tmp/tmpwWnbzu 2>/tmp/tmpdqG2A1''] {'logoutput': None, 'quiet': False}

2024-02-22 12:33:50,342 - call returned (0, '')

2024-02-22 12:33:50,342 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":0,"fileId":16444,"group":"hdfs","length":0,"modificationTime":1708598632346,"owner":"hive","pathSuffix":"","permission":"1777","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2024-02-22 12:33:50,342 - HdfsResource[None] {'security_enabled': False, 'hadoop_bin_dir': '/usr/odp/1.2.2.0-46/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://master1.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'ubuntu', 'action': ['execute'], 'hadoop_conf_dir': '/usr/odp/1.2.2.0-46/hadoop/conf', 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp']}

2024-02-22 12:33:50,344 - Directory['/usr/lib/ambari-logsearch-logfeeder/conf'] {'create_parents': True, 'mode': 0755, 'cd_access': 'a'}

2024-02-22 12:33:50,344 - Generate Log Feeder config file: /usr/lib/ambari-logsearch-logfeeder/conf/input.config-hive.json

2024-02-22 12:33:50,344 - File['/usr/lib/ambari-logsearch-logfeeder/conf/input.config-hive.json'] {'content': Template('input.config-hive.json.j2'), 'mode': 0644}

2024-02-22 12:33:50,344 - Ranger Hive Server2 plugin is not enabled

2024-02-22 12:33:50,344 - call['ambari-sudo.sh su hive -l -s /bin/bash -c 'cat /var/run/hive/hive-server.pid 1>/tmp/tmpn67x1F 2>/tmp/tmpUDp6ht''] {'quiet': False}

2024-02-22 12:33:50,353 - call returned (1, '')

2024-02-22 12:33:50,353 - Execution of 'cat /var/run/hive/hive-server.pid 1>/tmp/tmpn67x1F 2>/tmp/tmpUDp6ht' returned 1. cat: /var/run/hive/hive-server.pid: No such file or directory

2024-02-22 12:33:50,353 - get_user_call_output returned (1, u'', u'cat: /var/run/hive/hive-server.pid: No such file or directory')

2024-02-22 12:33:50,354 - call['ambari-sudo.sh su hive -l -s /bin/bash -c 'hive --config /usr/odp/current/hive-server2/conf/ --service metatool -listFSRoot' 2>/dev/null | grep hdfs:// | cut -f1,2,3 -d '/' | grep -v 'hdfs://master1.com:8020' | head -1'] {}

2024-02-22 12:33:55,773 - call returned (0, '12:33:52.326 [main] DEBUG org.apache.hadoop.fs.FileSystem - hdfs:// = class org.apache.hadoop.hdfs.DistributedFileSystem from ')

2024-02-22 12:33:55,774 - Execute['hive --config /usr/odp/current/hive-server2/conf/ --service metatool -updateLocation hdfs://master1.com:8020 12:33:52.326 [main] DEBUG org.apache.hadoop.fs.FileSystem - hdfs:// = class org.apache.hadoop.hdfs.DistributedFileSystem from'] {'environment': {'PATH': u'/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin:/var/lib/ambari-agent:/usr/odp/current/hive-server2/bin:/usr/odp/1.2.2.0-46/hadoop/bin'}, 'user': 'hive'}

Command failed after 1 tries

Created 02-23-2024 01:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @rizalt

From the shared stack trace I can see, you are using ODP. If that's true, ODP is not Cloudera-Product. I kindly suggest you, check the ODP Community to look into this issue.

Created 02-22-2024 07:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@rizalt Welcome to the Cloudera Community!

To help you get the best possible solution, I have tagged our Hive experts @Shmoo @mszurap who may be able to assist you further.

Please keep us updated on your post, and we hope you find a satisfactory solution to your query.

Regards,

Diana Torres,Senior Community Moderator

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created 02-22-2024 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank You Diana

Created 02-22-2024 11:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can someone take a look at this issue and advise please? Thank you very much.

Created 04-03-2024 01:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

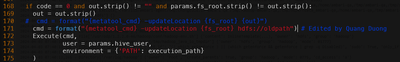

@rizalt I had a same bug when used ODP stack to deploy Ambari.

After debug, I found code in ambari-agent bug when runtime to try start Hive Server2.

Problem: This below codes output {out} parameter to handle hdfs path, but this code returned not valid hdfs URI

163 metatool_cmd = format("hive --config {conf_dir} --service metatool")

164 cmd = as_user(format("{metatool_cmd} -listFSRoot", env={'PATH': execution_path}), params.hive_user) \

165 + format(" 2>/dev/null | grep hdfs:// | cut -f1,2,3 -d '/' | grep -v '{fs_root}' | head -1")

166 code, out = shell.call(cmd)

2024-04-03 07:40:48,317 - call['ambari-sudo.sh su hive -l -s /bin/bash -c 'hive --config /usr/odp/current/hive-server2/conf/ --service metatool -listFSRoot' 2>/dev/null | grep hdfs:// | cut -f1,2,3 -d '/' | grep -v 'hdfs://vm-ambari.internal.cloudapp.net:8020' | head -1'] {}

2024-04-03 07:40:58,721 - call returned (0, '07:40:53.268 [main] DEBUG org.apache.hadoop.fs.FileSystem - hdfs:// = class org.apache.hadoop.hdfs.DistributedFileSystem from ')To fix:

Step 1: edit row 170 in file "/var/lib/ambari-agent/cache/stacks/ODP/1.0/services/HIVE/package/scripts/hive_service.py" as below: I hard code old_path to valid URI, this help by pass updateLocation config

# cmd = format("{metatool_cmd} -updateLocation {fs_root} {out}")

cmd = format("{metatool_cmd} -updateLocation {fs_root} hdfs://oldpath")

You can see as below image:

Step 2: Restart ambari agent

sudo ambari-agent restart

Step 3: Try to restart Hive server2 in Ambari

Service started successful.

Created 02-23-2024 01:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @rizalt

From the shared stack trace I can see, you are using ODP. If that's true, ODP is not Cloudera-Product. I kindly suggest you, check the ODP Community to look into this issue.