Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How Clean HDFS in ambari?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How Clean HDFS in ambari?

- Labels:

-

Apache Ambari

Created on 06-01-2016 10:04 AM - edited 08-19-2019 04:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I've been starting with Ambari and Sandbox Horton works, but I have a problem.

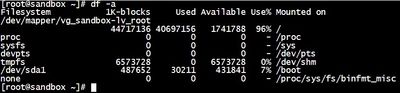

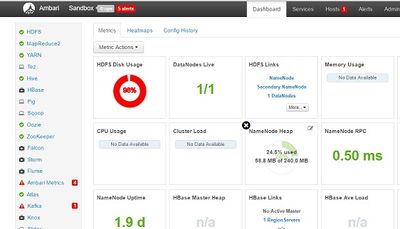

I've been developing some MapReduce and Pig script to test the framework and I always do rm in the result output in order to not waste space disk, but with the time (1 month) the hdfs disk is fully in the ambari metrics and I don't recovery space spite of remove and remove files.

Created 06-03-2016 06:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@BRivas garriv

It takes some manual effort but, first change the working directory to / (cd /).

then do du -h on every folder to find the disk usage. (Example: "du -h var" or "du -h usr").

Locate the folder which is taking up all disk space and try to delete irrelevant files from that folder.

Created 06-01-2016 10:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you do just rm you're actually moving your data to the Trash. In order to remove the data from HDFS and free space, when you do the rm you have to put the flag -skipTrash.

In order to delete the data from the trash, you can run:

hdfs dfs -expunge

Created on 06-03-2016 08:17 AM - edited 08-19-2019 04:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, thanks for the response. I´ve try but dont work. The problem is that the disk is filling while it is on but not store results. I think it may be because that is not where logs can be store, that is, every day metrics mark a 1 % stored more despite I dont use the machine. And now with the time is 99%, yesterday have 98% like show the picture.

Created 06-03-2016 06:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@BRivas garriv

It takes some manual effort but, first change the working directory to / (cd /).

then do du -h on every folder to find the disk usage. (Example: "du -h var" or "du -h usr").

Locate the folder which is taking up all disk space and try to delete irrelevant files from that folder.

Created 06-03-2016 08:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks you so much, its works. The problem is that Atlas was comsuming 25GB of disk space in logs. You have any idea why this can happend? I never use Atlas app.

Created 06-03-2016 08:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content