Support Questions

- Cloudera Community

- Support

- Support Questions

- How to Setup HiveServer2 Authentication with LDAP ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to Setup HiveServer2 Authentication with LDAP SSL (No Knox)

- Labels:

-

Apache Hive

Created 10-15-2015 04:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created on 10-21-2015 04:10 PM - edited 08-19-2019 05:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is how I got it to work.

In order for tools such as Hive, Beeline to use LDAPs, you need to make a global change in HADOOP_OPTS for CA Certs, so that it is loaded with Hadoop in general, assuming you imported the cert (self-signed) into a cacert located in /etc/pki/java/cacerts

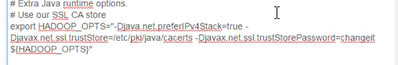

In HDFS-> Configs -> Hadoop Env Template add the following:

export HADOOP_OPTS="-Djava_net_preferIPv4Stack=true =Djavax.net.ssl.trustStore=/etc/pki/java/cacerts -Djavax.net.ssl.trustStorePassword=changeit ${HADOOP_OPTS}"

Note: Components like Knox and Ranger does not use the hadoop_env and needs its own config to be set for LDAP SSL and a manually restart.

Why a manual restart? Because it seems when you start with Ambari, there is no way to manual set user options so that Ambari can pick up these settings and use in java process of Ranger and Knox when it starts. Only when Ranger and Knox is started manually, when restarting is the certs picked up.

Note also Hive View does not work with LDAP or LDAP ssl.

Created 10-15-2015 04:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Personally I haven't setup LDAP SSL but here are the properties you can set in hive-site.xml.

hive.server2.authentication = LDAP hive.server2.authentication.ldap.url = <LDAP URL> hive.server2.authentication.ldap.baseDN = <LDAP Base DN> hive.server2.use.SSL = true hive.server2.keystore.path = <KEYSTORE FILE PATH> hive.server2.keystore.password = <KEYSTORE PASSWORD>

Created 10-21-2015 04:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

These keystore.path and the keystore.password is ONLY for SSL encryption. It has nothing to do with LDAP SSL

Created 10-15-2015 04:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Both LDAP and SSL are covered in the Apache Hive docs:

Created 10-15-2015 05:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Isn't the ssl encryption different from LDAPs for authentication? The key path is different

Created 10-15-2015 05:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You're right. For LDAPS you just need to make sure the LDAP server's SSL certificate is trusted by the JVM that runs HS2. If using a self-signed (or otherwise untrusted) cert, import it into the corresponding cacerts, usually under $JAVA_HOME/jre/lib/security/cacerts

Created on 10-21-2015 04:10 PM - edited 08-19-2019 05:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is how I got it to work.

In order for tools such as Hive, Beeline to use LDAPs, you need to make a global change in HADOOP_OPTS for CA Certs, so that it is loaded with Hadoop in general, assuming you imported the cert (self-signed) into a cacert located in /etc/pki/java/cacerts

In HDFS-> Configs -> Hadoop Env Template add the following:

export HADOOP_OPTS="-Djava_net_preferIPv4Stack=true =Djavax.net.ssl.trustStore=/etc/pki/java/cacerts -Djavax.net.ssl.trustStorePassword=changeit ${HADOOP_OPTS}"

Note: Components like Knox and Ranger does not use the hadoop_env and needs its own config to be set for LDAP SSL and a manually restart.

Why a manual restart? Because it seems when you start with Ambari, there is no way to manual set user options so that Ambari can pick up these settings and use in java process of Ranger and Knox when it starts. Only when Ranger and Knox is started manually, when restarting is the certs picked up.

Note also Hive View does not work with LDAP or LDAP ssl.

Created 10-27-2015 04:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@amcbarnett@hortonworks.com Can you confirm you really needed the -D settings after you imported your cert into the truststore? These arguments you added are the defaults.

Created 10-27-2015 05:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@carter@hortonworks.com Yes, the only way it worked is when I used the -D settings.

However I have since been told that in order for Hadoop to use the cert, we should import into $JAVA_HOME/jre/lib/security/cacerts instead of /etc/pki/java/cacerts which we thought was the default.

So apparently if you are using any trustStore besides $JAVA_HOME/jre/lib/security/cacerts you would need the -D settings.

I haven't had a chance to test this as the folks I am working with got it to work with the -D settings, using /etc/java/cacerts and do not want to make any further changes.