Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to do row count using Nifi in source table...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to do row count using Nifi in source table and target table while ingestion

- Labels:

-

Apache NiFi

Created 09-27-2017 11:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am ingesting data from oracle to hive using sqoop . I want to know whether i can use nifi to count the no of rows in oracle table is same as the no of rows in target table after ingestion.

Created on 09-27-2017 12:01 PM - edited 08-17-2019 11:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

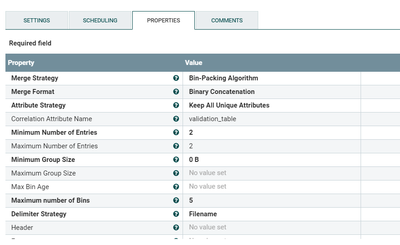

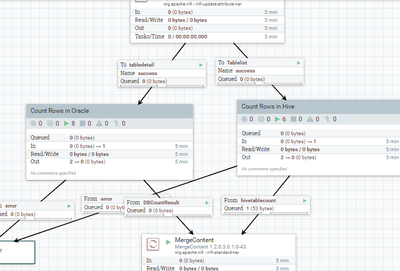

Here i used validation_table attribute to have the table name in the flow file

Create your own logic to count the rows from oracle and hive . Then merge the 2 flows using merge processor.

I have created a process group to count oracle table and another for counting hive which will add a oracle_cnt attribute and hive_cnt attribute with the result.

The result is merged to a single flow file by correlating using the co relation attribute name . Allso mention the attribute strategy as "keep all unique attribute"

Created 09-27-2017 11:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure , You can do that with MergeContent Processor . if you are using only source and target then you can set the processor property Min no of entries to 2 and max no of entries to 2 and also mention a correlation attribute to do the merge.

Created 09-27-2017 11:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you help me with some example on how to do it

Created on 09-27-2017 12:01 PM - edited 08-17-2019 11:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here i used validation_table attribute to have the table name in the flow file

Create your own logic to count the rows from oracle and hive . Then merge the 2 flows using merge processor.

I have created a process group to count oracle table and another for counting hive which will add a oracle_cnt attribute and hive_cnt attribute with the result.

The result is merged to a single flow file by correlating using the co relation attribute name . Allso mention the attribute strategy as "keep all unique attribute"

Created 03-03-2020 11:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please post the template, I am trying to solve the same problem. It would be a great help for me

Created 09-27-2017 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Aneena Paul how much volume of data is being moved as part of the sqoop. IF the volume is not too high, why not simply use nifi for moving data from oracle to hive. Nifi can easily handle anything in the GB ranges for daily / hourly jobs. A simple flow would be Qeneratetablefetch -> RPG->executesql->puthdfs.

Created 09-27-2017 04:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This will give you provenance in nifi, which provides you with confirmation of how much data in bytes was extracted and sent to hdfs, so no need to do this additional check.