Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to fix an error in spark submit ?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to fix an error in spark submit ?

- Labels:

-

Apache Ambari

-

Apache Spark

Created 09-29-2017 08:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

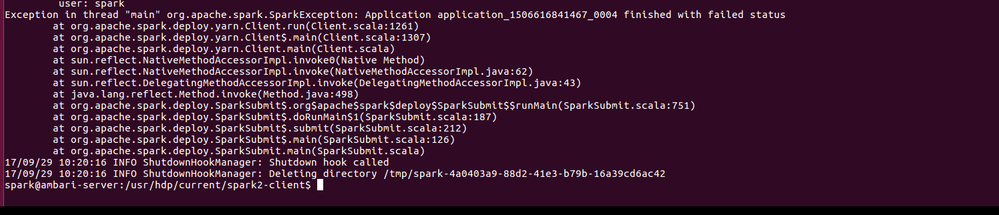

I'm trying to run this command ./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn-cluster --deploy-mode cluster --driver-memory 512m --executor-memory 1g --executor-cores 4 --queue default examples/jars/spark-examples*.jar 10in the directory /usr/hdp/current/spark2-client with spark user.

I got the error log mentioned in "log.png".

How can i fix this issue ?

P.S:

1.My cluster is composed of an ambari-server and 2 ambari-agents.

2. When i run spark submit before adding the second node there were no issues.

Created 10-02-2017 02:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @raouia ,

Can you please look at the Yarn Application log(application_XXX_XXX) which will have the detailed information about the root cause for the failure.

on the other hand, from the symptom, Looks something to do with library Configuration , con you please ensure, when you add the additional node, all the spark libraries are available on that node too.

Hope This Helps !!

Created 10-03-2017 08:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've explained the logs i got in the following link

so, how can i fix this problem?

Created 10-03-2017 08:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @raouia,

The error mentioned that unknown host exception (ambari-agent1/2), which means the nodes can't be discovered with that name.

if you don't have fully qualified domain name with DNS, you can update your /etc/hosts file with respective IP address, so that that can be discovered.

that implies your /etc/hosts file can be appended with

<ip of ambari-agent1> amabir-agent1

<ip of ambari-agent2> amabir-agent2

in all the hosts so that every one can be discover other hosts.