Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to keep data locality after a HBase Region...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to keep data locality after a HBase RegionServers rolling restart?

- Labels:

-

Apache Ambari

-

Apache HBase

Created 02-14-2016 12:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I noticed that after performing a rolling restart the data locality for the entire cluster goes down to 20% which is bad and for realtime applications this can be a nightmare.

I've read here that we should switch off the balancer before perform a manual rolling restart on HBase. However, I used the Ambari rolling restart and I didn't see any reference to the balancer in the documentation. Maybe the balancer is not the issue, what is the safest way to perform a rolling restart on all region servers but keeping the data locality at least above 75%. Is there any option on Ambari to take care of that before a RS Rolling Restart.

Another issue that I noticed is that some regions have split during the Rolling Restart but they are bit far for being full.

Any insights?

Thank you,

Cheers

Pedro

Created 02-14-2016 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pedro Gandola splitting occurs when your regions grow to the max size (hbase.hregion.max.filesize) as defined in your hbase-site.xml

http://hbase.apache.org/book.html#disable.splitting

when you run major compaction, the data locality is restored. Run major compactions on a busy system in off-peak hours.

balancer distributes regions across the cluster, runs every 5 minutes by default, do not turn it off. You can implement your own balancer and replace the default StochasticLoadBalancer class, not recommended unless you know what you're doing.

Another option is to enable read replicas, so essentially you're duplicating data in a different region server. The secondary replicas are read-only and maximize your data availablity.

All in all, it's more art than science and you need to experiment with many hbase properties to get an ultimate result.

Created 02-14-2016 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pedro Gandola splitting occurs when your regions grow to the max size (hbase.hregion.max.filesize) as defined in your hbase-site.xml

http://hbase.apache.org/book.html#disable.splitting

when you run major compaction, the data locality is restored. Run major compactions on a busy system in off-peak hours.

balancer distributes regions across the cluster, runs every 5 minutes by default, do not turn it off. You can implement your own balancer and replace the default StochasticLoadBalancer class, not recommended unless you know what you're doing.

Another option is to enable read replicas, so essentially you're duplicating data in a different region server. The secondary replicas are read-only and maximize your data availablity.

All in all, it's more art than science and you need to experiment with many hbase properties to get an ultimate result.

Created 02-14-2016 01:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pedro Gandola tagging @Enis and @Josh Elser

Created on 02-14-2016 07:30 PM - edited 08-19-2019 01:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Artem Ervits,

Thanks for the reply.

I ran a major compaction after restart and yes the data locality came back to normal but I'm wondering if I'm doing something wrong and if there is a way to keep data locality after restart a RegionServer. For clusters running real-time load it can be a big deal to update configurations in our cluster.

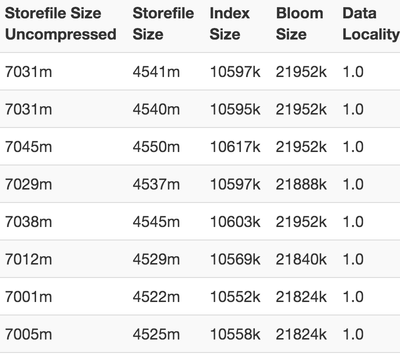

Regarding with the region split I'm pretty sure that the regions are far way from the max allowed (hbase.hregion.max.filesize). My max region size is 30G and they have ~4.5GB compressed (7GB uncompressed) right now.

This is my current hbase.hregion.max.filesize property:

<property> <name>hbase.hregion.max.filesize</name> <value>32212254720</value> <source>hbase-site.xml</source> </property>

This is my is a snapshot of my region sizes:

So, we are using uniform distribution for our rowkey for that reason if my region size was larger than hbase.hregion.max.filesize I was expecting to see all or almost all regions splitting but they were only 3 regions splitting out of 150 regions. I believe that I'm doing something wrong during a rolling restart of all region servers because we might have other conditions for a region split.

Cheers

Thank you

Pedro

Created 02-15-2016 12:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HDP ships with 10gb size region size by default. Having more regions, in the order of 100-200 per RS is recommended. If your size is 30GB but fewer regions, consider reducing that. How many nodes do you have? Balancer will handle data locality until major compaction happens. I wouldn't mess with that. How often do you expect to apply config and do rolling restarts? You can increase time between RS restarts to minimize impact, you can increase replication factor but that may be overkill, you can enable read replicas and have read-only replicas available for more data availability.

Created 02-15-2016 12:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Artem Ervits, I have 15 RS (increasing to 20 soon), 150 regions (pre-split) and 30GB max size per region. I have read many different opinions regarding with the number of regions and region sizes but in general I've read "less regions" and "small regions" but both are incompatible. In this case, I preferred to have a bit larger regions even know that I'm going to pay for that during major compactions. Do you see more issues breaking the 10GB recommendation?

Updating configs does not happen very often but and before I switch off the balancer and perform a rolling restart again I would like to know the community opinion because I don't want to mess up with the cluster. Regarding the data locality, I agree with you that via replication is overkill. I'm going to have a slower rolling restart (e.g 1 machine per hour) this might give some time to the restarted RS to gain some data locality. Cheers Thanks

Created 02-15-2016 01:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pedro Gandola I would say if it works for you, then go ahead, its more trial and error and tuning usually takes a few tries to get optimal performance. I think you have a great foundation and have a good handle on the situation. Keep those questions coming!

Created 02-15-2016 09:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Artem Ervits, Thank you, Yes this is really trial&error job :). Cheers

Created 02-15-2016 07:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Guys,

Regarding, the region split issue it was because I was using the de default policy IncreasingToUpperBoundRegionSplitPolicy instead ConstantSizeRegionSplitPolicy and I believe that because my RSs had a bit more regions that they were supposed to have this was causing the unexpected splits.

Cheers

Pedro

Created 02-15-2016 08:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pedro Gandola good find, glad you were able to identify this.