Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to limit Kudu's memrowset

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to limit Kudu's memrowset

- Labels:

-

Apache Impala

-

Apache Kudu

Created on 07-10-2019 03:07 PM - edited 09-16-2022 07:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are testing heavy write on kudu-1.8 shipped by CDH 6.1.1. Now we have around 350 "hot replicas" on one t-server. When we are ingesting the data, the memory consumed by each tablet keep on increasing and eventally the total used memory end up being exceeding the memory limit we give to kudu.

From the mem-tracker, some replica's memrowset use as much as 500 MB,does that mean we need to provide up to 350 * 500 MB= 175 GB for each t-server? Is there any way/config to limit/throttle the memory consumed by memrowset(The limit shows in mem-tradcker UI is "none", see below screenshot)? Any suggestion will be appreaciated, thanks!

Created 07-10-2019 03:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As the a tserver approaches the memory limit, it'll apply backpressure on incoming writes, forcing them to slow down. This backpressure (and the limiting mechanism in general) is process-wide; there's no way to customize it for a particular tablet or memrowset.

If you let your write job run for a while, the memory usage should eventually stabilize. If it doesn't, please share a heap sample as per the instructions found here.

Created 07-10-2019 03:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As the a tserver approaches the memory limit, it'll apply backpressure on incoming writes, forcing them to slow down. This backpressure (and the limiting mechanism in general) is process-wide; there's no way to customize it for a particular tablet or memrowset.

If you let your write job run for a while, the memory usage should eventually stabilize. If it doesn't, please share a heap sample as per the instructions found here.

Created 07-10-2019 03:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reply Adar! It seems there is no backpressure you mentioned take place before it hits the memory limit (Service unavailable: Soft memory limit exceeded). Then it starts to reject incoming writes which bring down the ingestion rate a lot, that is not the backpressure you mentioned right?

I will try to paste a heap sample here.

Created 07-10-2019 03:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Besides the limit itself, there are two additional knobs you can experiment with: --memory_limit_soft_percentage (defaults to 80%) and --memory_pressure_percentage (defaults to 60%).

Created 07-10-2019 05:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Adar,

I have made another test with following setting:

--memory_limit_hard_bytes=80530636800 (75G) --memory_limit_soft_percentage=85 --memory_limit_warn_threshold_percentage=90 --memory_pressure_percentage=70

One of the t-server starts to return:

Service unavailable: Soft memory limit exceeded (at 89.17% of capacity)

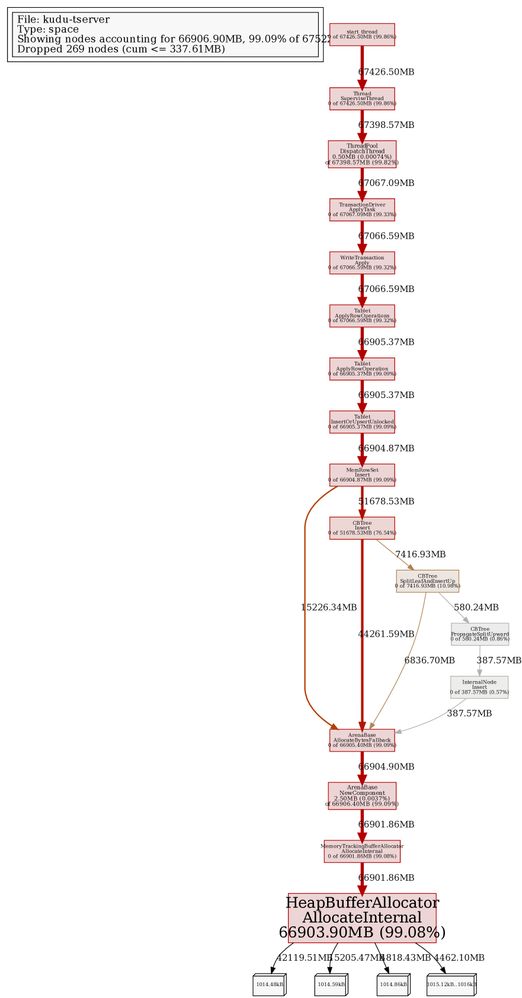

after the ingestion job running for around 20 mins and the ingestion rate drops. Below is the heap sample of that t-server:

Let me know if you have any thoughts

Created 07-10-2019 05:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Isn't this the behavior you expected?

Created 07-10-2019 05:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The ingestion rate drops about 60-70 percent. Is this write rejection the backpressure you mentioned? Does this mean I need to provide more memory in order to achieve the ingestion rate without rejection?

Created 07-10-2019 05:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is your --fs_data_dirs configured to take advantage of all of the disks on each machine? Is your --maintenance_manager_num_threads configured appropriately (we recommend 1 thread for every 3 data directories when they're backed by spinning disks).

Created on 07-10-2019 05:39 PM - edited 07-10-2019 05:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We only have two disks(data directories) per t-server for current setup, so the maintenance_manager_num_threads is only 1. Will this be the bottleneck for write? Do you have recommended number for data directories(I can't find any in the document yet)?

Also we obseves that with less tablets, the memory usage will be lower and so that the ingestion rate can be kept. Do you think reducing the tablets(partitions) can also benefit the ingestion rate?

Created 07-17-2019 09:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you reduce the number of partitions, you'll generally be reducing the overall ingest speed because you're reducing write parallelism. If your goal is to reduce ingest speed, then by all means explore reducing the number of partitions.