Support Questions

- Cloudera Community

- Support

- Support Questions

- How to make more containers in parallel RUNNING?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to make more containers in parallel RUNNING?

- Labels:

-

Apache Hive

-

Apache YARN

Created 02-07-2017 07:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have two clusters, UAT and PROD. The UAT have more less resources than PROD.

But I notice that there almost have no PENDING stage on UAT when run HIVE QL, while the PENDING containers for a little long time on PROD like below:

hive> select count(1) from humep.ems_barcode_material_ption_h;

Query ID = root_20170111172857_3f3057c0-a819-4b2d-9881-9915f2e80216

Total jobs = 1

Launching Job 1 out of 1

Status: Running (Executing on YARN cluster with App id application_1483672680049_59226)

--------------------------------------------------------------------------------

VERTICES STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

--------------------------------------------------------------------------------

Map 1 ... RUNNING 1405 494 108 803 0 0

Reducer 2 INITED 1 0 0 1 0 0

--------------------------------------------------------------------------------

VERTICES: 00/02 [=========>>-----------------] 35% ELAPSED TIME: 36.68 s

--------------------------------------------------------------------------------

Is there any method that can promote the parallel RUNNING ? I tried

- set hive.exec.parallel=true;

- set hive.exec.parallel.thread.number=8;

but no effect.

Thanks for your great help and support

Created 02-07-2017 02:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The task in pending mode is because there is no container can be allocated for that task at the time. So, please go to the Resource Manager UI to check how many container can be launched for each cluster, and how many has been launched at the time of running. From there, you can decide whether the constraint comes from resource or from setting.

Created 02-08-2017 08:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The parallelism is determined by the available cluster capacity - namely the number of nodes; the amount of memory and CPUs on the nodes in relation to the size of the container; as well as potentially the limits set for a queue, if the cluster is separated into multiple queues.

You can increase the memory available to YARN (if there's space for that), reduce the container size (usually not recommended, unless it was previously set to values higher than default, or you know that containers will always be smaller than the current setting), or make sure that queue has more capacity (if applicable).

Compare these settings between two clusters to see which one might be the culprit.

Parallel workloads on PROD cluster may also reduce available resources, esp. if they are running in the same YARN queue.

Created on 02-09-2017 01:37 AM - edited 08-18-2019 04:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

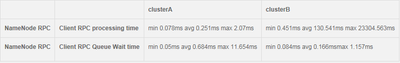

Would anyone have a look at this post? Actully, the problem from following post.

Created 02-09-2017 02:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very briefly looked over your original post, it seems that you sepearte Data nodes away from NodeManagers in your cluster B, which might increase the cost of data transferring among the nodes if the computing and data are not on the same node. In general, data node and node manager are colocated to guarantee the data locality as much as possible. I would suggest you try to set the cluster in that way, and see how the performance comes back.

Created 02-10-2017 03:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

currently, I had extend the datanodes on nodemanagers alreay by installing DISKs. so it is 40 datanodes and 19 Nodemanagers now. but still have the same issue.

Is one datanode to one nodemanager as best practice?

Created 02-10-2017 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes. Datanode and nodemanager usually colocated. So, if you have 40 datanodes, then deploy 40 nodemanagers on these 40 datanodes. If you have some data that sit on the node that does not have nodemanager, then those data have to be transferred which increases the running time.