Support Questions

- Cloudera Community

- Support

- Support Questions

- How to repeat a json to maintain uniqueness for Pu...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to repeat a json to maintain uniqueness for PutHbaseJson processor

- Labels:

-

Apache Hadoop

-

Apache HBase

Created 05-10-2018 11:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have flattened a json using jolt since the input was json array it has resulted in list for the keys. But to use PutHBaseJson processor I need scalar values to define rowkey. Is there a way to repeat the whole json again and keep one value at a time? So that the uniqueness is maintained.

Below are my input, transformation and output

Input

{

"resource": {

"id": "1234",

"name": "Resourse_Name"

},

"data": [

{

"measurement": {

"key": "5678",

"value": "status"

},

"timestamp": 1517784040000,

"value": 1

},

{

"measurement": {

"key": "91011",

"value": "location"

},

"timestamp": 1519984070000,

"value": 0

}

]

}

Transformation

[

{

"operation": "shift",

"spec": {

"resource": {

"id": "resource_id",

"name": "resource_name"

},

//

// Turn all the SecondaryRatings into prefixed data

// like "rating-Design" : 4

"data": {

"*": {

// the "&" in "rating-&" means go up the tree 0 levels,

// grab what is ther and subtitute it in

"measurement": {

"*": "measurement_&"

},

"timestamp": "measurement_timestamp",

"value": "value"

}

}

}

}

]

Output

{

"resource_id" : "1234",

"resource_name" : "Resourse_Name",

"measurement_key" : [ "5678", "91011" ],

"measurement_value" : [ "status", "location" ],

"measurement_timestamp" : [ 1517784040000, 1519984070000 ],

"value" : [ 1, 0 ]

}

Expected output

{

"resource_id" : "1234",

"resource_name" : "Resourse_Name",

"measurement_key" : "5678",

"measurement_value" : "status",

"measurement_timestamp" : 1517784040000, ,

"value" : 1

},

{

"resource_id" : "1234",

"resource_name" : "Resourse_Name",

"measurement_key" : "91011" ,

"measurement_value" : "location" ,

"measurement_timestamp" : 1519984070000 ,

"value" : 0

}

Created on 05-11-2018 12:43 AM - edited 08-18-2019 12:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

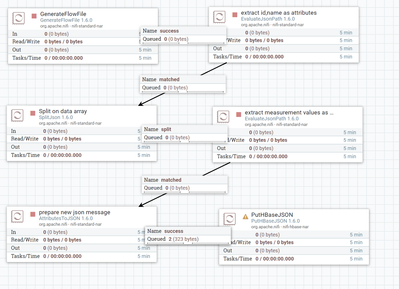

Without using JOLT tranform you can achieve the same expected output with Evaluate and Split Json processors.

Example:-

i have used your input json in generate flowfile processor to test out this flow.

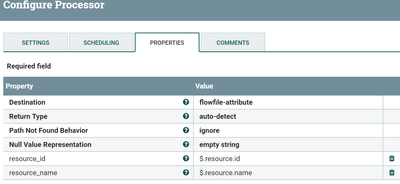

EvaluateJsonPath Configs:-

Change the below property value

Destination

flowfile-attribute

Add new properties to the processor

resource_id

$.resource.id

resource_name

$.resource.name

now we are extracting id,name values and assigning to resource_id,resource_name attributes.

SplitJson Configs:-

As you are having data array split the array using split json processor.

Configure the processor as below

JsonPath Expression

$.data

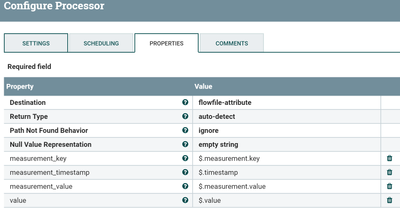

EvaluateJsonPath Configs:-

Once we have splitted the data array we are going to have 2 flowfiles having same resource_id,resource_name attributes.

Change the below property value

Destination

flowfile-attributeAdd new propety as

measurement_key

$.measurement.key

measurement_timestamp

$.timestamp

measurement_value

$.measurement.value

value

$.value

Now we are going to extract all the values and keep them as attributes to the flowfile.

AttributesToJSON processor:-

Use this processor to prepare the required message

Attributes List

resource_id,resource_name,measurement_key,measurement_value,measurement_timestamp,value

Now we are going to have 2 flowfiles then you can feed those flowfile to PutHBaseJson processor because puthbasejson processor expects one json message at a time, use some unique field(combination of attributes (or) ${UUID()}..etc) as rowkey value so that You are not going to overwrite the existing data in HBase.

if you want to merge them into 1 then use merge content processor with defragment as merge strategy and prepare the a valid json array of two messages in it so that you can use PutHBaseRecord processor to process chunks of messages at a time.

I have attached my sample flow.xml save/upload and make changes as per your needs

-

If the Answer addressed your question, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues.

Created on 05-11-2018 12:43 AM - edited 08-18-2019 12:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Without using JOLT tranform you can achieve the same expected output with Evaluate and Split Json processors.

Example:-

i have used your input json in generate flowfile processor to test out this flow.

EvaluateJsonPath Configs:-

Change the below property value

Destination

flowfile-attribute

Add new properties to the processor

resource_id

$.resource.id

resource_name

$.resource.name

now we are extracting id,name values and assigning to resource_id,resource_name attributes.

SplitJson Configs:-

As you are having data array split the array using split json processor.

Configure the processor as below

JsonPath Expression

$.data

EvaluateJsonPath Configs:-

Once we have splitted the data array we are going to have 2 flowfiles having same resource_id,resource_name attributes.

Change the below property value

Destination

flowfile-attributeAdd new propety as

measurement_key

$.measurement.key

measurement_timestamp

$.timestamp

measurement_value

$.measurement.value

value

$.value

Now we are going to extract all the values and keep them as attributes to the flowfile.

AttributesToJSON processor:-

Use this processor to prepare the required message

Attributes List

resource_id,resource_name,measurement_key,measurement_value,measurement_timestamp,value

Now we are going to have 2 flowfiles then you can feed those flowfile to PutHBaseJson processor because puthbasejson processor expects one json message at a time, use some unique field(combination of attributes (or) ${UUID()}..etc) as rowkey value so that You are not going to overwrite the existing data in HBase.

if you want to merge them into 1 then use merge content processor with defragment as merge strategy and prepare the a valid json array of two messages in it so that you can use PutHBaseRecord processor to process chunks of messages at a time.

I have attached my sample flow.xml save/upload and make changes as per your needs

-

If the Answer addressed your question, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues.

Created 05-11-2018 11:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shu you are awesome. Thanks for the help. It does resolve my problem. I wanted to make rowkey using the combination of json attributes, in order to achieve I used updateAttribute processor and declared the key there using the combination of resource id, metric id and timestamp. Then included this rowkey in AttributesToJson.