Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to replicate a file in NiFi

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to replicate a file in NiFi

- Labels:

-

Apache NiFi

-

HDFS

Created 06-06-2022 07:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the issue whereby I receive a file into NiFi on a single node but wish to copy this onto multiple nodes.

Has anyone done this before and what would be the process to do so?

Created 06-13-2022 02:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

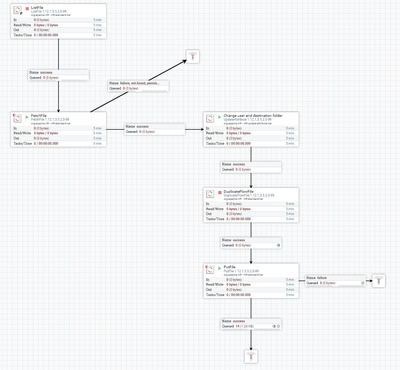

Current setup is as below.

Basically to reiterate the concept. We wish to receive the single file uploaded on a single data node (1 of 7), process that through NiFi to present an individual copy of that file on every data node. Currently I am getting a multiple created by the duplicateflowfile processor but this is not being placed on the individual nodes.

Created 06-13-2022 06:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

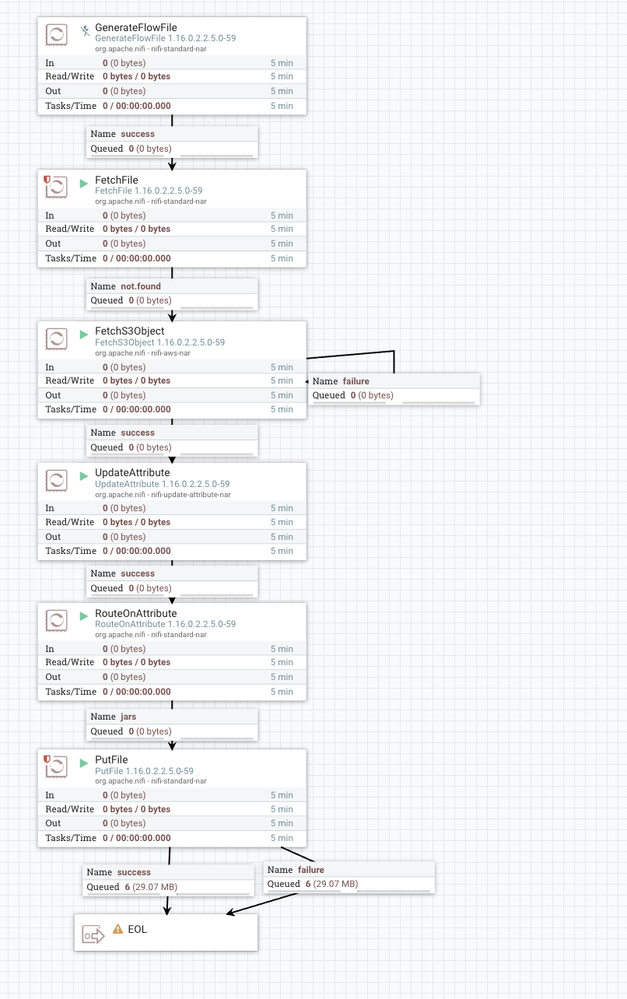

I have done similar here when I need to deliver jar files to all nodes. It's really a "this is not how things are done", but in this case I did not have access to the node's file system without doing this in a flow. So that said, it works great! The first proc creates a flowfile on all nodes (even when I dont know the number), then it checks, if not found, proceeds to get the file and write it to the file system.

Created 06-14-2022 06:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Tryfan

I think the concept of sending a file to one node is what needs to change here. BY sending to a single node in the NiFi cluster you create a single point of failure. What happens if that one node on your 7 node cluster goes down? You end up with none of the nodes getting that file and outage to your dataflow.

A better design is to place this file somewhere that all nodes can pull it from.

Maybe it is a commonly mounted file system to all 7 nodes. (getFile processor)?

Maybe an external SFTP server (GetSFTP processor)?

etc...

Then you construct a dataflow where all nodes are retrieving a file independently as needed.

Thanks,

Matt

Created 06-29-2022 03:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MattWho - so we have the file coming into the system via a loadbalancer which due to other intricacies, is configured to only ingest on one data node. We don't have the option of an SFTP server so I have to figure this out on the canvas.

Created on 06-13-2022 02:49 AM - edited 06-13-2022 02:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so you got the copy file in NiFi using List/FetchFile then you changed the new directory location where it has to be written using UpdateAttribute then you have used DuplicateFlowFile to create duplicates, till now what is happening since ListFile is running on Primary node (And it should be running on Primary ) rest all downstream flow will be processed/computed on Primary that why you see DuplicateFlowFile creating duplicated on node which is Primary in NiFi Cluster. I do not see any issue here. If you wish to have flow files on All NiFi nodes redistributed after DuplicateFlowFile then you need to set Load Balancing at queue connection on the connection "Round Robin " between DuplicateFlowFile & PutFile, by doing this duplicates flow files will be distributed among nifi nods in round-robin manner one by one and will be written by PutFile back on each nifi nodes where files got distributed after DuplicateFlowFile. Now one question you mention 7 HDFS data nodes, does that means you have NiFi service running as well on the same 7 data nodes ? and having 7 node nifi cluster? in that case, only all 7 nodes will receive each file copy. I see

Created 06-29-2022 03:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I have a single file coming into a single data node (one of 7 as part of a cluster). This file I need to fetch onto NiFi and process through the flow so that a copy is placed on all available data nodes.

I update the attributes to change the files location on the destination and have a put file to place it there. I have since included a duplicateflowfile processor to copy the file 6 times (total of 7 including the original) but with round robin on the connector, this isn't distributing it across the data nodes correctly.

I am now looking at adding a distributeload processor after the duplicateflowfile and configuring it to direct at 7 individual putfile processors. However, unsure of how I can configure these to place on a specific data node.

Can i include the hostname in the directory field?

Created 06-29-2022 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Tryfan

You mention this file comes in daily. You also mention that this file arrives through a load-balancer so you don't know which node will receive it. This means you can't configure your source processor for "primary node" only execution as you have done in your shared sample flow with the ListFile. As Primary Node only, the elected primary node will be the only node that executes that processor. So if the source file lands on any other node, it would not get listed.

You could handle this flow in the following manor:

GetFile ---> (7 success relationships) PostHTTP or InvokeHTTP (7 of these with one configured for each node in your cluster cluster)

ListenHTTP --> UpdateAttribute --> PutFile

So in this flow, no matter which node receives your source file, the GetFile will consume it. It will then get cloned 6 times (7 copies then exist) with one copy of the FlowFile getting routed to 7 unique PostHttp processors. Each of these targets the ListenHTTP processor listening on each node in your cluster. That ListenHTTP processor will receive all 7 copies (one copy per node) of the original source file. Then use the UpdateAttribute to set your username and location info before the putFile which place each copy in the desired location on the source node.

If you add or remove nodes from your cluster, you would need to modify this flow accordingly which is a major downside to such a design. Thus the best solution is still one where the source file is placed somewhere all nodes can retrieve it from so it scales automatically.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

- « Previous

-

- 1

- 2

- Next »