Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to speed up Nifi FlowFile transfer from Pr...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to speed up Nifi FlowFile transfer from Process group internal output port to external output port?

- Labels:

-

Apache NiFi

Created on 05-02-2017 06:44 AM - edited 08-17-2019 08:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a Process Group which has an internal output port with a large queue. When I exit the Process Group and view the queue from the output of that Process Group to another group it seems to be very small. It seems as though there is a limit/time on the number of FlowFiles being transferred from one port to another even though these ports are both within Nifi without a process in between.

Example:

Process Group A has an internal output Port ExampleOutputPortA with a queue of XX,XXX FlowFiles. When you exit ExampleA and view the queue into port ExampleInputPortB the queue is XXX which is constantly lower than the queue within ExampleA.

Created 05-02-2017 02:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A few questions come to mind...

1. What kind of processor is feeding the connection with the large queue inside ExampleA?

2. How large is that queue?

The reason I ask is because NiFi uses swapping to help to limit JVM heap usage by queued FlowFiles.

How swapping is handled is configured in the nifi.properties file:

nifi.queue.swap.threshold=20000 nifi.swap.in.period=5 sec nifi.swap.in.threads=1 nifi.swap.out.period=5 sec nifi.swap.out.threads=4

The above shows NiFi defaults.

A few options you may do to improve performance:

1. Set backpressure thresholds on you connections to limit the number of FlowFiles that will queue at any time. Setting the value lower then they swapping threshold will prevent swapping from occurring on the connection. Newer version of NiFi by default set FlowFile object thresholds on newly created connections to 10,000. swapping is per connection and not per NiFi instance.

2. Adjust the swap.threshold value to a large value to prevent swapping. Keep in mind that any FlowFiles not being swapped are held in JVM heap memory. Setting this value to high may result in Out Of Memory (OOM) errors. Make sure you adjust your heap setting fro your NiFi in the bootstrap.conf file.

3. Adjust the swap in and swap out number of threads.

Thanks,

Matt

Created 05-02-2017 02:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A few questions come to mind...

1. What kind of processor is feeding the connection with the large queue inside ExampleA?

2. How large is that queue?

The reason I ask is because NiFi uses swapping to help to limit JVM heap usage by queued FlowFiles.

How swapping is handled is configured in the nifi.properties file:

nifi.queue.swap.threshold=20000 nifi.swap.in.period=5 sec nifi.swap.in.threads=1 nifi.swap.out.period=5 sec nifi.swap.out.threads=4

The above shows NiFi defaults.

A few options you may do to improve performance:

1. Set backpressure thresholds on you connections to limit the number of FlowFiles that will queue at any time. Setting the value lower then they swapping threshold will prevent swapping from occurring on the connection. Newer version of NiFi by default set FlowFile object thresholds on newly created connections to 10,000. swapping is per connection and not per NiFi instance.

2. Adjust the swap.threshold value to a large value to prevent swapping. Keep in mind that any FlowFiles not being swapped are held in JVM heap memory. Setting this value to high may result in Out Of Memory (OOM) errors. Make sure you adjust your heap setting fro your NiFi in the bootstrap.conf file.

3. Adjust the swap in and swap out number of threads.

Thanks,

Matt

Created on 05-03-2017 01:20 AM - edited 08-17-2019 08:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Matt Clarke,

I don't believe it is the swapping that is an issue. My backpressure threshold values are all set to 10,000. Even when my queue is below the swapping threshold the rate at which the FlowFiles are being transferred from one Process Group to another are abysmal.

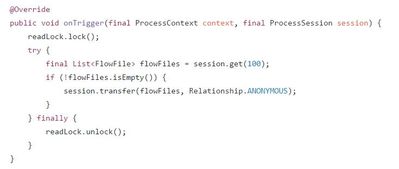

I've been doing some digging and found that FlowFiles from a Process Group are transferred in batches of 100. This seems to be in the source code LocalPort.java. I'm not quite sure what would be limiting the frequency of this though.

I think if I disband the Process Group and remove the Nifi internal port to port layer this may remove the latency. But this is just a hassle and makes Process Groups redundant.

Created on 05-03-2017 12:44 PM - edited 08-17-2019 08:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

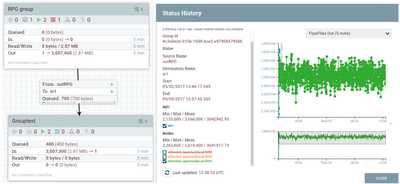

FlowFiles are transferred in a batches between process groups, but that transfer amounts to a updated FlowFile records. This transfer should take fractions of a ms to complete. So many threads should execute per second.

So this raises the question of whether your flow is thread starved, concurrent tasks have been over allocated across your processors, your NiFi max timer driven thread count is to low, or your disk IO is very high.

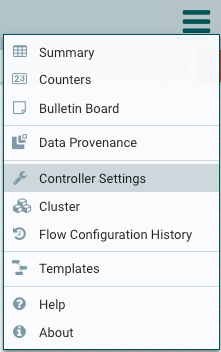

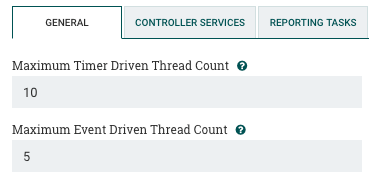

I would start by looking at your "Max Timer Driven Thread Count" settings. The default is only 10. By default every component you add to the NiFi canvas uses Timer driven threads. The above count restricts how many system thread can be allocated to components at any one time.

I setup a simple 4 cpu vm running a default configuration.

The number of FlowFiles passed through the connection between process group 1 and process group 2 ranged between 7084/second to 12,200/second.

Thanks,

Matt

Created on 05-09-2017 02:53 AM - edited 08-17-2019 08:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Matt Clarke,

Sorry I'm new to Nifi and didn't realise the controller settings for the Process Group. It seems as though increasing the "Maximum Timer Driven Thread Count" increased the transfer rate between Process Groups. This is very confusing, I thought the FlowFile transfer was event driven.

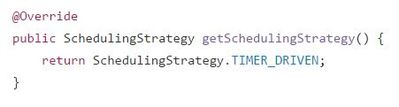

Update: It seems as though in the Source Code that the FlowFile transfer is TimeDriven.

Thanks a lot for the information by the way.

Created 05-09-2017 12:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad you were able to get the performance improvement you were looking for by allowing your NiFi instance access to additional system threads.

If this answer helped you get to your solution, please mark it as accepted.

Thank you,

Matt