Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to split the output flow file from Execute...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to split the output flow file from ExecuteSQL (query perform joins)?

- Labels:

-

Apache NiFi

Created 09-03-2020 07:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello.

I'm using an ExecuteSQL Processor to extract data from Oracle DB. The query has multiple joins and returns a large number of fields.

The problem is that ExecuteSQL Processor returns a single flow file (avro format) that is huge.

I want to split the flow file (based in a number of rows, for example) and then merge them at the proper moment.

I have read about QueryDatabaseTable and GenerateFetchTable which are processors that can split the output flow files but looks like these processors aren't able to perform joins..

Does anyone knows a workaround?

Created 09-10-2020 12:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe I have found a solution..

I'm gonna use the ExecuteSQL to do a "select insert" query.. The query will perform the joins and load the data into a table. Then the QueryDatabaseTable will read from the new table.. That way I'll be able to use the "Max Rows Per Floe File" property.

Created 09-03-2020 12:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

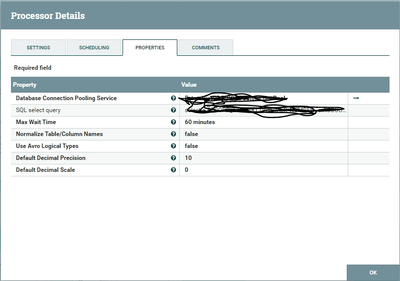

At ExecuteSQL Processor is a property named by "Max Rows Per Flow File".

There you can set how much rows each Flow File should be contain and later you can merge them like you wanted cause the flow files get an fragment attribute.

Created 09-03-2020 01:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no such a option at ExecuteSQL..

Perhaps you're using a newer version of NiFi: Mine is 1.5.0.3.1.2.0-7

Created 09-04-2020 12:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should use ListDatabaseTable and generatetablefetch to perform an incremental load. If you are joining the tables, you can do a replacetext after generatetablefetch to add the join query and then feed the flowfile to execute sql. You can split the amount of data in generatetablefetch.

OR

You can use splitrecord / splitcontent to split the single avro to multiple smaller files and then use mergecontent to merge them back if required.

Hope this helps. If the comment helps you to find a solution or move forward, please accept it as a solution for other community members

Created 09-04-2020 06:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know if these solutions works for me.

What I really wanna do is make ExecuteSQL work as (for example) SelectHiveQL, which means, ExecuteSQL get only one incoming flow file and sends forward multiple flows file that can be merged.

My real problem is that the ExecuteSQL query sometimes needs to produce a flowfile whose size is too large for the edge machine to process, what ends in error.. so, I need to split the flow file to decrease the pressure on the edge machine...

I've been through the same situation in Hive queries, but I solved using the "Max Rows Per Flow File" property and then merging the flowfiles.

Created 09-07-2020 04:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why not use the 2nd option i said above....Use splitcontent or splitrecord and then merge it later whenever you want it.

Created 09-08-2020 07:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My point is, the error occurs when the ExecuteSQL is running. The cause is: ExecuteSQL needs to create a huge flow file that the edge machine doest not have enough processing power to create.. Your solutions looks good, but it would split the flow file after it was produced by the ExecuteSQL, your solution takes action in a moment when the error has already occurred.

Created 09-08-2020 07:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Then im afraid its difficult to do so. I dont understand how you are feeding the queries to execute sql. Maybe its good to feed executesql with manageable queries. If you are using GenerateTableFetch then it allows you to break a big query into smaller queries like you want and feed it to ExecuteSQL. Hope this helps. Please do post back on how to managed to move forward.

Created 09-08-2020 07:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The process is triggered by a GetSFTP that retrieves a date file.. Then the query uses the date for filtering..

Thanks for your contribution.. As soon as I make progress I'll update you guys.

Created 09-10-2020 12:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe I have found a solution..

I'm gonna use the ExecuteSQL to do a "select insert" query.. The query will perform the joins and load the data into a table. Then the QueryDatabaseTable will read from the new table.. That way I'll be able to use the "Max Rows Per Floe File" property.