Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to utilize the queues or node label dynam...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to utilize the queues or node label dynamically based on the application / jobs running ?

- Labels:

-

Apache YARN

Created 05-06-2016 07:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Working with a customer who are currently using Node labels and Yarn queue extensively, allocating resources and granting access to the users to their respective queues to work on the application they are working.

For example:

Lets us take we have 2 users (User1 and User2), User1 works on application related to Hive and User2 works on applications related to Spark.

Assigned 10 nodes which was labelled as HiveNodeLabel and another 10 nodes as SparkNodeLabel.

The customer then assigned these respective Hive and Spark node labels to its relevant HiveQueue and SparkQueue so that it can leverage its optimized nodes for processing.

User 1 is now granted access to run his hive application using his HiveQueue which utilizes the resources from the assigned nodes (HiveNodeLabel) , similarly for User 2 for SparkQueue utilizing SparkNodeLabel.

The question is if User 1 needs to run application both Hive and Spark Application and has been assigned to both the Queues. How will the Cluster know which application the user is currently running (Hive or Spark) and how will it decide that it has to be run on Spark Queue & Node Labels or Hive Queue & Node Labels ? Is there anything like Project / Application Type we can use in order to determine which one to use ?

As anyone worked on such scenario and it would be great if anyone can throw some light and provide couple of options in how we can handle this ?

Created on 05-07-2016 04:56 PM - edited 08-19-2019 01:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Veera B. Budhi,

- Job by job approach: One solution to your problem is to specify the queue to use when submitting your Spark job or when you connect to hive.

When submitting your Spark job you can specify the queue by --queue like in this example

$ ./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn --deploy-mode cluster --driver-memory 4g --executor-memory 2g --executor-cores 1 --queue SparkQueue lib/spark-examples*.jar 10

To specify the queue at connection time to HS2:

beeline -u "jdbc:hive2://sandbox.hortonworks.com:10000/default?tez.queue.name=HiveQueue" -n it1 -p it1-d org.apache.hive.jdbc.HiveDriver

Or you can set the queue after you are connected using set tez.queue.name=HiveQueue;

beeline -u "jdbc:hive2://sandbox.hortonworks.com:10000/default" -n it1 -p it1-d org.apache.hive.jdbc.HiveDriver >set tez.queue.name=HiveQueue;

- Change default queue: The second approach would be to specify a default queue for Hive or Spark to use.

To do it for Spark set spark.yarn.queue to SparkQueue instead of default in Ambari

To do this for Hive, you can add tez.queue.name to custom hiverserver2-site configuration in Ambari

Hope this helps

Created on 05-07-2016 04:56 PM - edited 08-19-2019 01:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Veera B. Budhi,

- Job by job approach: One solution to your problem is to specify the queue to use when submitting your Spark job or when you connect to hive.

When submitting your Spark job you can specify the queue by --queue like in this example

$ ./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn --deploy-mode cluster --driver-memory 4g --executor-memory 2g --executor-cores 1 --queue SparkQueue lib/spark-examples*.jar 10

To specify the queue at connection time to HS2:

beeline -u "jdbc:hive2://sandbox.hortonworks.com:10000/default?tez.queue.name=HiveQueue" -n it1 -p it1-d org.apache.hive.jdbc.HiveDriver

Or you can set the queue after you are connected using set tez.queue.name=HiveQueue;

beeline -u "jdbc:hive2://sandbox.hortonworks.com:10000/default" -n it1 -p it1-d org.apache.hive.jdbc.HiveDriver >set tez.queue.name=HiveQueue;

- Change default queue: The second approach would be to specify a default queue for Hive or Spark to use.

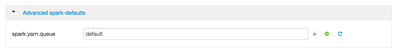

To do it for Spark set spark.yarn.queue to SparkQueue instead of default in Ambari

To do this for Hive, you can add tez.queue.name to custom hiverserver2-site configuration in Ambari

Hope this helps

Created 05-09-2016 05:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Awesome. Thanks Abdelkrim for the great info, this helps.