Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: I used GetFTP processor to get a CSV file from...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

I used GetFTP processor to get a CSV file from filezilla and transferred it to a PUTFILE. it worked but when I open the directory where I wanted the file to save in laptop. It's not there.

- Labels:

-

Apache NiFi

Created on 09-21-2017 12:33 AM - edited 09-16-2022 05:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

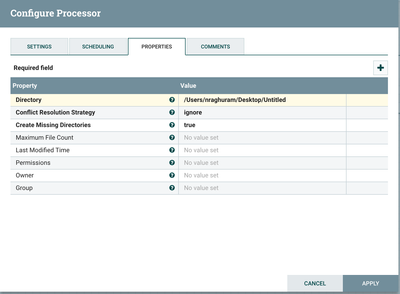

As you can see the 3 mb got transferred in screenshot - 1. And in the screenshot -2 I am specifying my Path where I want it stored. I basically want the CSV file written in the untitled text file. Am I doing it right.? I very new to NIFI. Please Help..:

Created on 09-21-2017 10:03 PM - edited 08-18-2019 12:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shailesh Nookala

Sure, if you are thinking to insert the data to Sql Server we

can do that by using NiFi, You don’t have to download the file at all.

You can do that couple of ways by using ExtractText Processor and extract all the contents of csv file into attributes and prepare insert statement by using ReplaceText Processor and push the data to Sql server.

Another method is preparing json document for input CSV file

and extracting all the attributes of flow file and prepare record to insert

data to SQL server.

I’m using Json method which is easy to extract attributes of flowfiles.

Example:-

Let’s consider you

are having the csv file having just 2 records in it.

1,test,1000

2,test1,2000

As I’m using NiFi 1.1 there is no direct processor which can convert the incoming csv file to json.

we need to follow

InferAvroSchema--> ConvertCSVToAvro --> ConvertAvroToJSON

the output would be in Json format with the same contents of our input

CSV file.

But in new versions of NiFi(I think from 1.3) there are processors to convert

CSV to Json directly using ConvertRecord Processor.

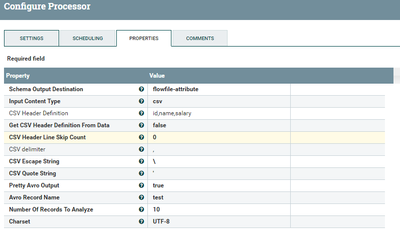

InferAvroSchema:-

In this processor I’m giving the incoming csv file

definition (or) you can read header definition from incoming flowfile by

changing Get CSV Header Definition From Data property to true NiFi can

read definition from 1 line of file.

as I know my incoming file having id,name,salary I have given this definition

and keep Schema Output Destination to flowfile-attribute we can make use

of this schema in ConvertCSVToAvro

processor.

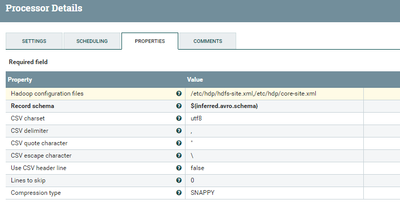

ConvertCSVToAvro:-

change the Record Schema property to

${inferred.avro.schema}Which can infer the schema from flowfile attribute.

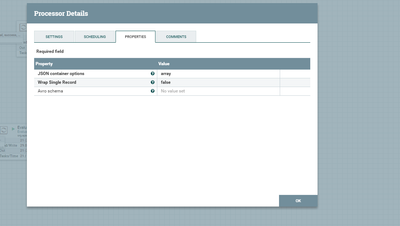

ConvertAvroToJSON:-

drag and drop ConvertAvroToJSON processor and leave the properties

to default.

Output:-

[{"id": 1, "name":

"test", "salary": 1000},{"id": 2,

"name": "test1", "salary": 2000}]

SplitJson:-

If you are having more than 1 record in your csv file use

splitjson processor because if ConvertAvroToJSON

processors find more than one record in the flowfile it will make an array and

keep all the records inside the array.

As we need only 1 record in the flowfile before inserting data to Sqlserver.

If your flowfile having array then json path expression property should be

$.*

as it will dynamically splits the array of records to one record for one flowfile.

Input:-

[{"id": 1, "name": "test","salary": 1000},{"id": 2, "name":"test1", "salary": 2000}]Output:-

flowfile1:-

{"id": 1, "name": "test", "salary":

1000}flowfile2:-

{"id": 2, "name": "test1", "salary":

2000}As Splijson splits the array into individual records.

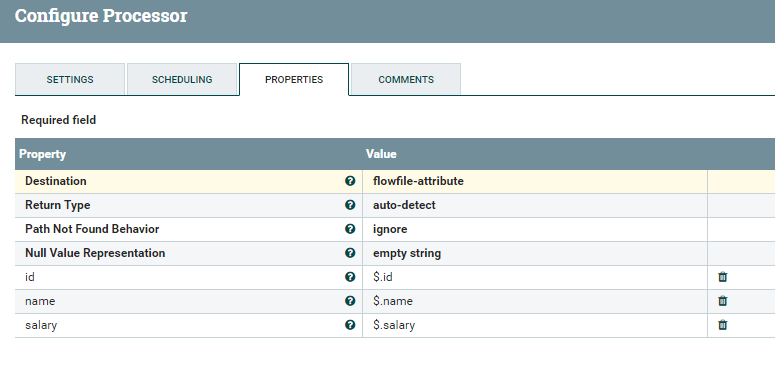

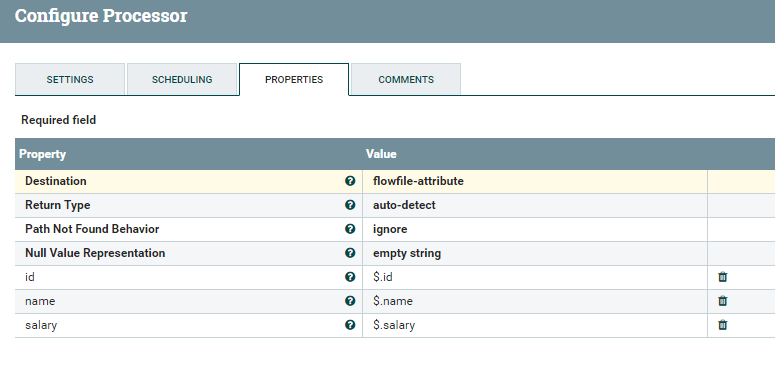

EvaluateJsonPath configs:-

in this processor extracting all the contents of flowfile to attributes

by changing the list of properties

Destination as flowfile-attribute

extracting contents of json file by adding new properties by clicking + symbol on right corner.

id as $.id name as $.name salary as $.salary

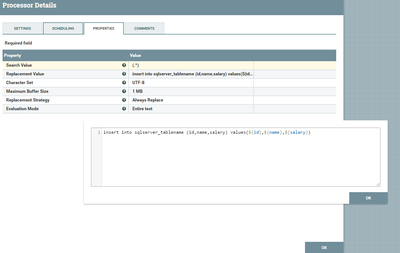

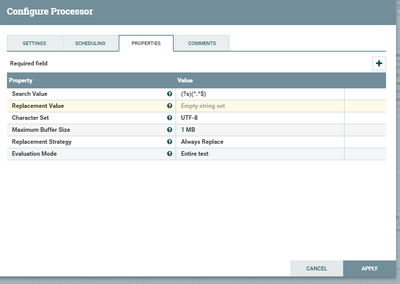

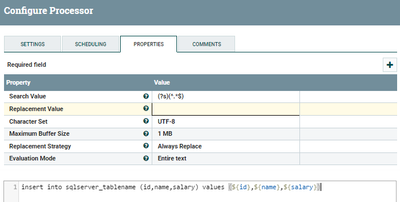

ReplaceText Configs:-

We are preparing insert statement in this processor and change

Replacement Strategy property as AlwaysReplace

Use insert statement and give destination table name and use the extracted attributes to replace the contents of values dynamically.

insert into sqlserver_tablename (id,name,salary) values (${id},${name},${salary})output:-

flowfile1 content:- insert into sqlserver_tablename (id,name,salary) values(1,test,1000) flowfile2 content:- insert into sqlserver_tablename (id,name,salary) values(2,test1,2000)

use putsql processor and make a connection pool to sql server enable and use the connection pool.

so Putsql processor will executes all the insert statements that we are having contents of the flowfile and all the data will get inserted to sql server.

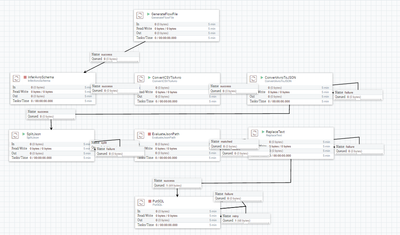

FlowScreenshot:-

Created on 09-21-2017 04:52 AM - edited 08-18-2019 12:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shailesh Nookala, PutFile processor Writes the contents of a FlowFile to the local file system.

1.You can find the file in NiFi running nodes only as the property create missing directories is true, NiFi will creates those directories for you and store the csv file into your directory(i.e /Users/nraghuram/...).

Example:-

1.Lets take you are having 2 NiFi nodes(E01,E02) and you are running GetFile processor on only primary node(consider E02 is primary), so when you run on primary node then the File got stored in the specified directory of your processor on primary node(in our case on E02 because it is primary node).

2.In this example im having E02 is primary node and running PutFile processor to store File in local directory.

3.As Highlighted below the File is on E02 node

4.When i store the file it is inside /d1 directory on E02 node only because i'm running GetFile only on Primary Node.

Created 09-21-2017 08:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Yash. Thank you so much for taking your time to reply to my Question.

I am sorry I do not understand your answer. I do not know what nodes are.

What I was trying to do is download a CSV file from filezilla and insert it into a table in sql server. I used FetchFTP to get a CSV file from Filezilla. Before inserting I though I would try writing the file into my local machine. That's where i got struck.

Can you teach me how to insert it into a table in sql server.

If you know any good tutorials. Please suggest me.

Thanks

Created on 09-21-2017 10:03 PM - edited 08-18-2019 12:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shailesh Nookala

Sure, if you are thinking to insert the data to Sql Server we

can do that by using NiFi, You don’t have to download the file at all.

You can do that couple of ways by using ExtractText Processor and extract all the contents of csv file into attributes and prepare insert statement by using ReplaceText Processor and push the data to Sql server.

Another method is preparing json document for input CSV file

and extracting all the attributes of flow file and prepare record to insert

data to SQL server.

I’m using Json method which is easy to extract attributes of flowfiles.

Example:-

Let’s consider you

are having the csv file having just 2 records in it.

1,test,1000

2,test1,2000

As I’m using NiFi 1.1 there is no direct processor which can convert the incoming csv file to json.

we need to follow

InferAvroSchema--> ConvertCSVToAvro --> ConvertAvroToJSON

the output would be in Json format with the same contents of our input

CSV file.

But in new versions of NiFi(I think from 1.3) there are processors to convert

CSV to Json directly using ConvertRecord Processor.

InferAvroSchema:-

In this processor I’m giving the incoming csv file

definition (or) you can read header definition from incoming flowfile by

changing Get CSV Header Definition From Data property to true NiFi can

read definition from 1 line of file.

as I know my incoming file having id,name,salary I have given this definition

and keep Schema Output Destination to flowfile-attribute we can make use

of this schema in ConvertCSVToAvro

processor.

ConvertCSVToAvro:-

change the Record Schema property to

${inferred.avro.schema}Which can infer the schema from flowfile attribute.

ConvertAvroToJSON:-

drag and drop ConvertAvroToJSON processor and leave the properties

to default.

Output:-

[{"id": 1, "name":

"test", "salary": 1000},{"id": 2,

"name": "test1", "salary": 2000}]

SplitJson:-

If you are having more than 1 record in your csv file use

splitjson processor because if ConvertAvroToJSON

processors find more than one record in the flowfile it will make an array and

keep all the records inside the array.

As we need only 1 record in the flowfile before inserting data to Sqlserver.

If your flowfile having array then json path expression property should be

$.*

as it will dynamically splits the array of records to one record for one flowfile.

Input:-

[{"id": 1, "name": "test","salary": 1000},{"id": 2, "name":"test1", "salary": 2000}]Output:-

flowfile1:-

{"id": 1, "name": "test", "salary":

1000}flowfile2:-

{"id": 2, "name": "test1", "salary":

2000}As Splijson splits the array into individual records.

EvaluateJsonPath configs:-

in this processor extracting all the contents of flowfile to attributes

by changing the list of properties

Destination as flowfile-attribute

extracting contents of json file by adding new properties by clicking + symbol on right corner.

id as $.id name as $.name salary as $.salary

ReplaceText Configs:-

We are preparing insert statement in this processor and change

Replacement Strategy property as AlwaysReplace

Use insert statement and give destination table name and use the extracted attributes to replace the contents of values dynamically.

insert into sqlserver_tablename (id,name,salary) values (${id},${name},${salary})output:-

flowfile1 content:- insert into sqlserver_tablename (id,name,salary) values(1,test,1000) flowfile2 content:- insert into sqlserver_tablename (id,name,salary) values(2,test1,2000)

use putsql processor and make a connection pool to sql server enable and use the connection pool.

so Putsql processor will executes all the insert statements that we are having contents of the flowfile and all the data will get inserted to sql server.

FlowScreenshot:-

Created 09-22-2017 12:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks you so much man @Yash. I'll try this out.:)

Created on 09-23-2017 10:05 PM - edited 08-18-2019 12:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

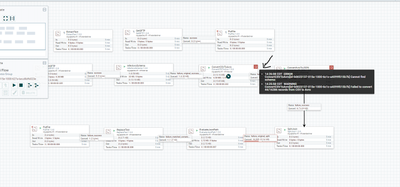

Hi man. I was following your answer step by step. I am getting error. Can you please help me fix it.

I am getting error "Convert CSV to AVRO" and "Convert "AVRO to JSON "processors.(Ignore the 3 processors in the 1st row)

Screenshot - 1 shows the errors.

At Convert CSV to AVRO it says cannot find Schema.

My Configuration of "CSV to AVRO" processor.

At "AVRO to JSON "processor.

"AVRO to JSON " configuration

I was trying to get a text file which has CSV's from filezilla. That part worked. and then the second step infer Avro schema also worked.

Is it because my CSV's are in text file, these errors occur.?

The only difference between your input and mine is. You hard coded the headers($is,$ name..) and I set take header from the first line of the file. Is it because of that.?

Please have a look man. Thanks

Created 09-24-2017 02:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

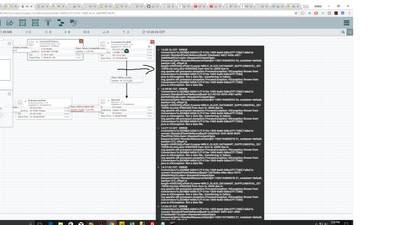

i don't think header is causing these issues as i tried the same way as you and its working as expected.

But as i have observed in your flow you have connected all the available relations on each processor to the next processor, that's causing issues for you and i have seen PutFile processor instead of PutSQL in your flow.

Are you storing the prepared records to file? i thought you are pushing the prepared records to SQL server, is my assumption is wrong?. Connect only the following Relationships to the Next Processors:-

GetFile(Sucputsql.xmlcess)-->InferAvroSchema(Success)-->ConvertCSVToAvro(Success)-->ConvertAvroToJSON(Success)-->SplitJson(Split)-->EvaluateJsonPath(Matched)-->ReplaceText(Success)-->PutSQL(Autoterminate Success).

I have attached my .xml file use this make changes to that as per you needs.

putsql.xml

input :-

name,adresse,zip 1,hi,8000InferAvroSchema configs:-

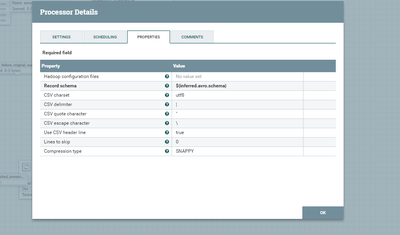

Convertcsvtoavro configs:-

csvtoavro.png

Output:-

{

"name" : 1,

"adresse" : "hi",

"zip" : 8000

}

Created on 10-04-2017 02:25 PM - edited 08-18-2019 12:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been hard-coding the replacement value property in REPLACE TEXT processor ( eg - "insert into table_name values (..)" ).

Is there a way to do it dynamically.? Can I use reg-ex.? I tried a couple if things. It did not work. Can you please tell me what Search Value is.?

Created on 10-04-2017 10:52 PM - edited 08-18-2019 12:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shailesh Nookala, Keep Search Value config as is (?s)(^.*$)

ReplaceText Configs:-

We are preparing insert statement in this processor and change

Change ReplacementStrategy property to

AlwaysReplace

Use insert statement and give destination table name and use the extracted attributes to replace the contents of values dynamically.

change Replacement Value property to

insert into sqlserver_tablename (id,name,salary) values (${id},${name},${salary})above statement will work dynamically with the attributes of the ff if we are having

id attribute value as 1 and

name as abc

salary as 1000

then this insert statement will be

insert into sqlserver_tablename (id,name,salary) values (1,abc,1000)

the same way this replace text processor prepares insert statements according to the attributes associated with the ff.

ReplaceText Configs:-

let me know if you still facing issues and please share more screenshots with configs and flow...!!!

Created 09-24-2017 04:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.. Your understanding is right..I am trying to insert it into the table only. I just wanted to see what the output is at the end of the flow..That's why I added PUTFILE. I'll change it. Again thank you so much for replying. I'll make the changes and try again.