Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: ImportError: No module named numpy

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ImportError: No module named numpy

- Labels:

-

Apache Spark

-

Apache YARN

-

Cloudera Hue

Created on 05-13-2019 02:51 AM - edited 09-16-2022 07:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Befor I post this issue, we have already readed all the same issue's solutions that we can find.

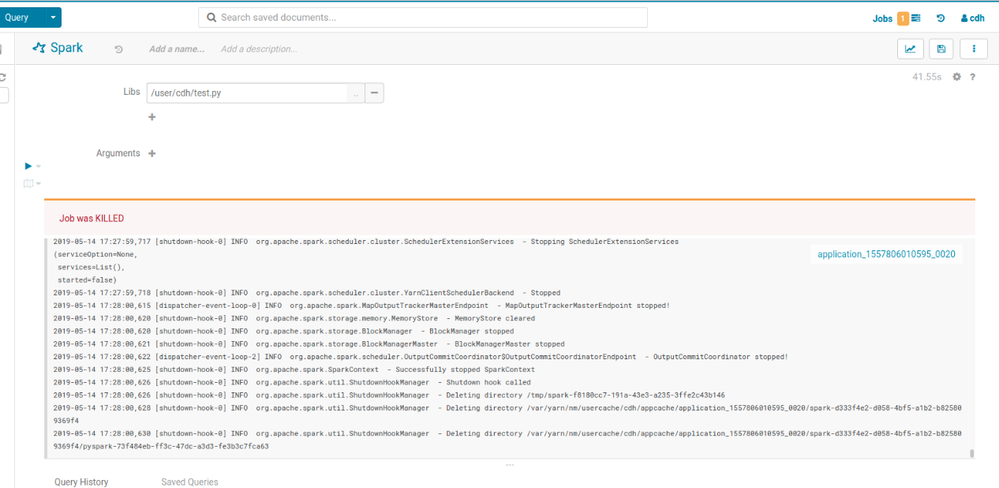

Our cluster is installed with cdh6.2, after install we use HUE to use the cluster. Job is submited via Hue.

When spark code need to import numpy, got error below:

Traceback (most recent call last):

File "/var/yarn/nm/usercache/admin/appcache/application_1557739482535_0001/container_1557739482535_0001_01_000001/test.py", line 79, in <module>

from pyspark.ml.linalg import Vectors

File "/var/yarn/nm/usercache/admin/appcache/application_1557739482535_0001/container_1557739482535_0001_01_000001/python/lib/pyspark.zip/pyspark/ml/__init__.py", line 22, in <module>

File "/var/yarn/nm/usercache/admin/appcache/application_1557739482535_0001/container_1557739482535_0001_01_000001/python/lib/pyspark.zip/pyspark/ml/base.py", line 24, in <module>

File "/var/yarn/nm/usercache/admin/appcache/application_1557739482535_0001/container_1557739482535_0001_01_000001/python/lib/pyspark.zip/pyspark/ml/param/__init__.py", line 26, in <module>

ImportError: No module named numpy

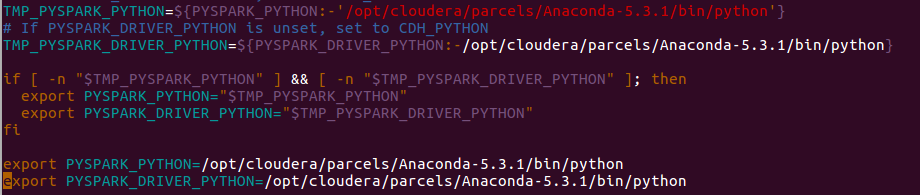

We followed office guied to install anaconda parcel, And setup the Spark Service Advanced Configuration Snippet (Safety Valve) for spark-conf/spark-env.sh

export PYSPARK_PYTHON=/opt/cloudera/parcels/Anaconda/bin/python export PYSPARK_DRIVER_PYTHON=/opt/cloudera/parcels/Anaconda/bin/python

Setup the Spark Client Advanced Configuration Snippet (Safety Valve) for spark-conf/spark-defaults.conf

spark.yarn.appMasterEnv.PYSPARK_PYTHON=/opt/cloudera/parcels/Anaconda/bin/python spark.yarn.appMasterEnv.PYSPARK_DRIVER_PYTHON=/opt/cloudera/parcels/Anaconda/bin/python

Also, setup the YARN (MR2 Included) Service Environment Advanced Configuration Snippet (Safety Valve)

PYSPARK_PYTHON=/opt/cloudera/parcels/Anaconda/bin/python PYSPARK_DRIVER_PYTHON=/opt/cloudera/parcels/Anaconda/bin/python

But non of these can help to solve the import issue.

Thanks for any help.

Created 05-14-2019 08:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 05-14-2019 02:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please check if numpy is actually installed on all of the nodemanagers, if not, install it using below command (for python2.x) :

pip install numpy

If already installed, let us know the following:

1) Can you execute the same command outside of hue i.e. using Spark2-submit ? Mention the full command here.

2) What spark command you use in Hue?

Created 05-14-2019 03:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

use command below, the job can be executed successfully.

export SPARK_HOME=/opt/cloudera/parcels/CDH/lib/spark export HADOOP_CONF_DIR=/etc/alternatives/hadoop-conf PYSPARK_PYTHON=/opt/cloudera/parcels/Anaconda/bin/python spark-submit --master yarn --deploy-mode cluster test.py

In Hue, open a spark snippet , select the py file, then run it. And the same code can also be executed in Hue's nodebook with yarn model.

Created 05-14-2019 03:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

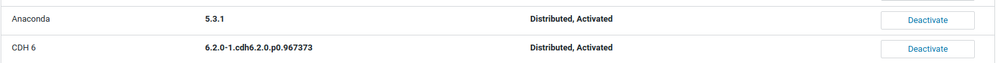

We installed anaconda vir cdh. which is already actived.

In the below file:

/run/cloudera-scm-agent/process/895-spark_on_yarn-SPARK_YARN_HISTORY_SERVER/spark-conf/spark-env.sh

we can see:

Created on 07-31-2020 05:54 AM - edited 07-31-2020 07:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In a CDH 6.3.2 cluster have an Anaconda parcel distributed and activated, which of course has the numpy module installed. However the Spark nodes seem to ignore the CDH configuration and keep using the system wide Python from /usr/bin/python.

Nevertheless I have installed numpy in system wide Python across all cluster nodes. However I still experience the "ImportError: No module named numpy". Would appreciate any further advice how to solve the problem.

Not sure how to implement the solution referred in https://stackoverflow.com/questions/46857090/adding-pyspark-python-path-in-oozie.

Created 05-14-2019 08:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

find solution here https://stackoverflow.com/questions/46857090/adding-pyspark-python-path-in-oozie.

Created 07-31-2020 05:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@kernel8liang Could you please explain how to implement the solution?