Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Increasing the HDFS Disk size in HDP 2.3 3 nod...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Increasing the HDFS Disk size in HDP 2.3 3 node cluster

- Labels:

-

Apache Hadoop

Created on 02-25-2016 11:45 AM - edited 08-18-2019 05:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the 3 node cluster installation for POC, My 3rd note is datanode, it has a disk space of about 200 GB.

As per the widget, my current HDFS Usage is as follows:

DFS Used: 512.8 MB (1.02%); non DFS used 8.1 GB (16.52%); remaining 40.4GB (82.46 %)

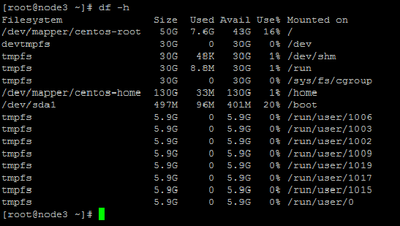

When I do df -h to check the disk size i can see a lot of space is taken by tmpfs as shown in the following screenshot:

How can I increase my HDFS disk size?

Created 02-25-2016 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

tmpfs is out of pic.

You need to increase /home space ...Is it a vm? If yes then you can reach out to system admin to allocate more space to /home

If it's bare metal then attach another disk and make it part of your cluster.

Created 02-25-2016 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

tmpfs is out of pic.

You need to increase /home space ...Is it a vm? If yes then you can reach out to system admin to allocate more space to /home

If it's bare metal then attach another disk and make it part of your cluster.

Created 02-25-2016 11:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 02-25-2016 11:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes the cluster is on VM Vsphere

Created 02-25-2016 12:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kunal Gaikwad That's easy then. See the link that I shared above.

Created 02-25-2016 12:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My /home partition is already 130 GB, but I am not able to use it for HDFS as metioned above as per the gadget. My concern is it should not hamper my HDP installation which I have already done on it

Created 02-25-2016 12:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kunal Gaikwad I believe you are using / for hadoop..Is that correct?

Generally, extending / is complicated than non-root

Install will be fine as long as there is no human error

If possible then you can add 4th nodes in the cluster and decomsission the 3rd one

Created 02-25-2016 12:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes i am using / for hadoop. This partitions are done automatically by ambari, so want to increase the HDFS size, for the POC wanted to import a table of size 70 GB, but because of the current HDFS size, I am able to import only 30+ GB's and the job gets hanged with alerts all over the ambari about the disk usage.

Created 02-25-2016 12:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kunal Gaikwad that's why you have to be careful about giving mounts during the install. If possible then add new nodes and make sure to add new disk mounts in the configs.

or extend the existing LUNS

Created 02-26-2016 09:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to upgrade the storage i have taken the snapshot of the cluster. Just to be sure I need to increase /dev/mappper/centos-root storage size right?