Hello all,

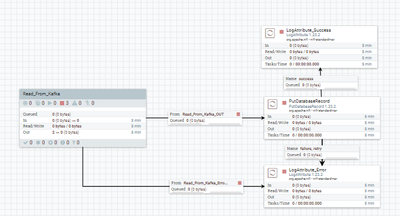

I am creating a processor group that read from kafka topic and write it to the clickhouse database. I am using stateless mechanism to ensure that when there is a problem during execution, nifi crash, or nifi restarted or clickhouse database return error, kafka offset will not be committed and process will be retry.

Unfortunately clickhouse will create a new row for duplicated message. In order to avoid duplicate message, i would like to check first the database and see if i have duplicated message before processing. Have someone create similiar use case as this one?