Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: InvokeHTTP randomly hangs

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

InvokeHTTP randomly hangs

- Labels:

-

Apache NiFi

Created 05-19-2020 03:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

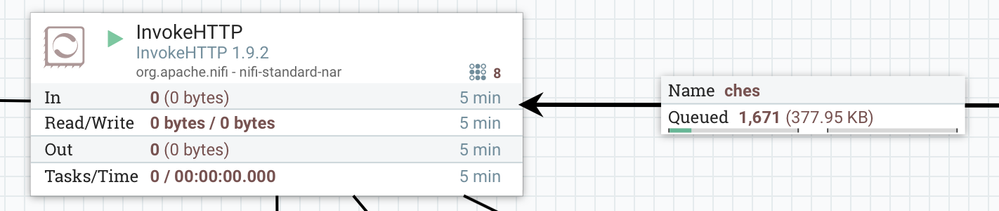

I have a NiFi flow [on a 2 processor machine using 8/5 thread counts] where the InvokeHTTP block is no longer processing files from the queue [shown below]:

As you can see there are 8 processes running but these seem to be stuck for hours and the only way out of it is manually stopping and terminating the block. This results in the following log message:

2020-05-18 08:31:06,228 ERROR [Timer-Driven Process Thread-12 <Terminated Task>] o.a.nifi.processors.standard.InvokeHTTP InvokeHTTP[id=f2f13d29-016b-1000-293d-c0cb04835881] Routing to Failure due to exception: java.net.SocketTimeoutException: timeout: java.net.SocketTimeoutException: timeout

java.net.SocketTimeoutException: timeout

at okhttp3.internal.http2.Http2Stream$StreamTimeout.newTimeoutException(Http2Stream.java:593)

at okhttp3.internal.http2.Http2Stream$StreamTimeout.exitAndThrowIfTimedOut(Http2Stream.java:601)

at okhttp3.internal.http2.Http2Stream.takeResponseHeaders(Http2Stream.java:146)

at okhttp3.internal.http2.Http2Codec.readResponseHeaders(Http2Codec.java:120)

at okhttp3.internal.http.CallServerInterceptor.intercept(CallServerInterceptor.java:75)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.connection.ConnectInterceptor.intercept(ConnectInterceptor.java:45)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:67)

at okhttp3.internal.cache.CacheInterceptor.intercept(CacheInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:67)

at okhttp3.internal.http.BridgeInterceptor.intercept(BridgeInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.http.RetryAndFollowUpInterceptor.intercept(RetryAndFollowUpInterceptor.java:120)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:67)

at okhttp3.RealCall.getResponseWithInterceptorChain(RealCall.java:185)

at okhttp3.RealCall.execute(RealCall.java:69)

at org.apache.nifi.processors.standard.InvokeHTTP.onTrigger(InvokeHTTP.java:791)

at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27)

at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1162)

at org.apache.nifi.controller.tasks.ConnectableTask.invoke(ConnectableTask.java:209)

at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:117)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Before stopping the process, I had a look into the nifi-bootstrap.log file and noticed a number of similar messages. I'm not sure whether these are related since no direct reference to InvokeHTTP is made.

"NiFi Web Server-556239" Id=556239 TIMED_WAITING on java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject@2f7a83f7

at sun.misc.Unsafe.park(Native Method)

at java.util.concurrent.locks.LockSupport.parkNanos(LockSupport.java:215)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(AbstractQueuedSynchronizer.java:2078)

at org.eclipse.jetty.util.BlockingArrayQueue.poll(BlockingArrayQueue.java:392)

at org.eclipse.jetty.util.thread.QueuedThreadPool.idleJobPoll(QueuedThreadPool.java:653)

at org.eclipse.jetty.util.thread.QueuedThreadPool.access$800(QueuedThreadPool.java:48)

at org.eclipse.jetty.util.thread.QueuedThreadPool$2.run(QueuedThreadPool.java:717)

at java.lang.Thread.run(Thread.java:748)

"OkHttp ConnectionPool" Id=213155 TIMED_WAITING on okhttp3.ConnectionPool@72d6189

at java.lang.Object.wait(Native Method)

at java.lang.Object.wait(Object.java:460)

at okhttp3.ConnectionPool$1.run(ConnectionPool.java:67)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Number of Locked Synchronizers: 1

- java.util.concurrent.ThreadPoolExecutor$Worker@7b6a1485

"Okio Watchdog" Id=556238 TIMED_WAITING on java.lang.Class@2b4512c0

at java.lang.Object.wait(Native Method)

at java.lang.Object.wait(Object.java:460)

at okio.AsyncTimeout.awaitTimeout(AsyncTimeout.java:361)

at okio.AsyncTimeout$Watchdog.run(AsyncTimeout.java:312)

Any help/insights on this would be greatly appreciated.

Created 10-13-2020 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To update - the combination of NiFi 1.12.1 and OpenJDK 1.8u242 works fine - left it running overnight with no hanging. I expect NiFi 1.11.4 to also work with this version, and avoid the bleeding edge!

Created 05-19-2020 04:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@keithg Can you update the post with the scheduling settings for InvokeHttp? Also would nice to see some specific details about the nifi environment (min/max memory settings, nifi node spec, disk config, etc) and setting for min/max threads?

Without taking those things in consideration, I would assume you are overloading capability resulting in instability you are experiencing. If you slow down the scheduling and consume the incoming queue at a slower rate, does the timeout persist?

Created on 05-19-2020 08:40 AM - edited 05-19-2020 10:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

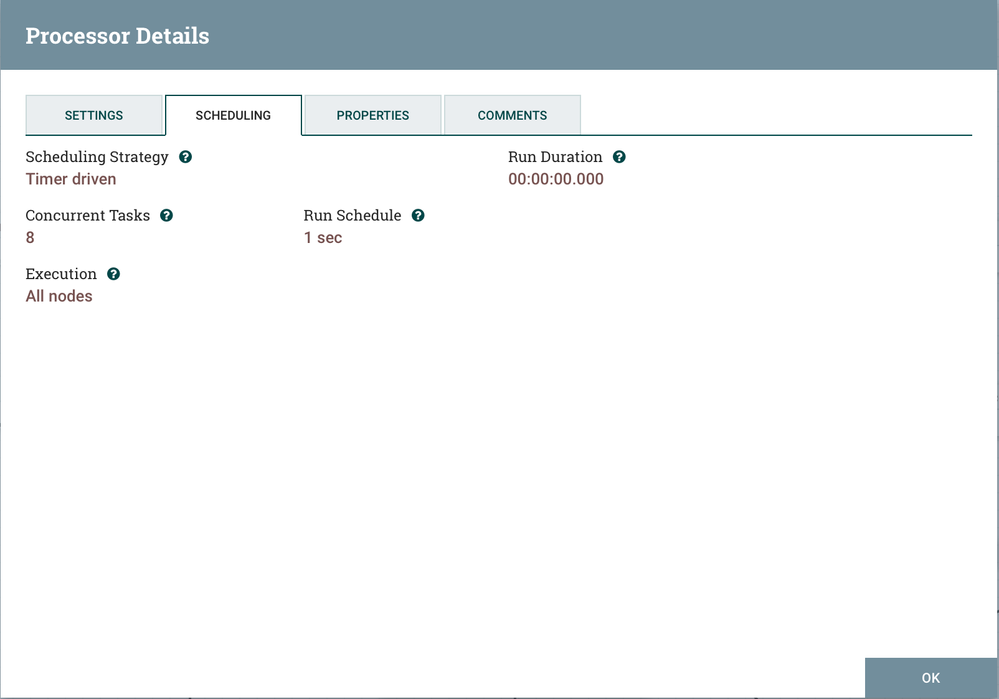

These are the scheduling settings:

NiFi 1.9.2

2 cores

4GB RAM [available to docker container running NiFi]

8 timer driver threads

5 event driven threads

Created 05-20-2020 07:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@keithg If you change the 1 sec to 15 sec or 30 sec does it become more stable?

With the schedule set to 1 sec, invokeHttp will execute 1600 connections as fast as possible. This opens up quite a bit of room for either exceeding the capabilities of your environment (not enough nodes, ram or cores) or the upstream service you are connecting too (actual network issues with the workload).

Since you only have 2 cores, you need to sensitive to tuning the flow, and most likely need to go slower (ie not 1 sec). You should also investigate documented NiFi Performance Tuning Steps, adding a lot more cores, adding more ram to each node including min ram: 2-6 gb max ram: 4-8-16 gb, and/or adjusting Garbage Collection.

Created on 06-03-2020 09:23 AM - edited 06-03-2020 09:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately the issue is still happening even after drastically reducing the scheduled execution time. What is strange is that replacing this block with an ExecuteStreamCommand block (which calls a Python function that does this same thing) does not seem to exhibit the same problem. Can you think of any reason as to why this is the case?

@stevenmatison

Created on 06-04-2020 05:40 AM - edited 06-04-2020 05:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have the concurrency of the python set to 8 too?

With only 2 cores, and concurrency set to 8, you are still in realms of pushing the limits of stability with the number of active threads.

When I am building a flow, i never touch concurrency until I am ready to run very specific unit tests against each processor where I am adjusting scheduling, queue properties, and concurrency. This is done with nifi clusters with 10s,50s,100s of cores. With a dev system of 1,2, or 3 nodes and very small number of cores I do not do any tuning, just logical testing without time or speed expectations because it will break....

Created 06-06-2020 02:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It has the same amount of threads and it does not hang. I did notice however that there were a number of timeouts, is it possible that InvokeHTTP is not timing out as intended? (the InvokeHTTP block was left using the default timeouts).

There seems to be something more to this when a different process block with identical load and similar functionality does not exhibit this behaviour. Limiting the InvokeHTTP block to a single thread with a lower execution rate also exhibits the problem.

Created 06-19-2020 07:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can reliably reproduce the exact same problem on InvokeHTTP 1.11.4 processor when performing a GET on an internal API. We run Nifi in a VM in GCloud.

We tried various number of threads, run schedule, etc.

Once in a while, it gets stuck forever and we need to terminate the thread or stop/start Nifi.

Nifi dumps shows the wait on some http2 streaming method.

2020-06-18 14:20:49,214 INFO [main] org.apache.nifi.bootstrap.RunNiFi "Timer-Driven Process Thread-5" Id=57 WAITING on okhttp3.internal.http2.Http

2Stream@740df261

It really feels like the timeout properties are not taken into account.

The solution described by @keithg is a reliable workaround. Using Python in an ExecuteScript processor is never hanging/freezing.

Created 10-07-2020 08:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been able to reproduce this same issue in nifi versions 1.9.2, 1.11.4, and even 1.12.1.

In all failure scenarios that I've tested, I've been running nifi on Java version 1.8.0_265 (openjdk version "1.8.0_265").

Are you using the same Java build (or build 262)? If so, upgrading to Java 11 or downgrading to a Java build before 262 may fix this. I haven't been able to reproduce this issue on build 252.

I'm uncertain of the cause, but it may be related to this OpenJDK bug since build 262:

Created 10-07-2020 09:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

openjdk version "1.8.0_265", yes.

I'll try to upgrade Java, thanks!