Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: InvokeHTTP randomly hangs

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

InvokeHTTP randomly hangs

- Labels:

-

Apache NiFi

Created 05-19-2020 03:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

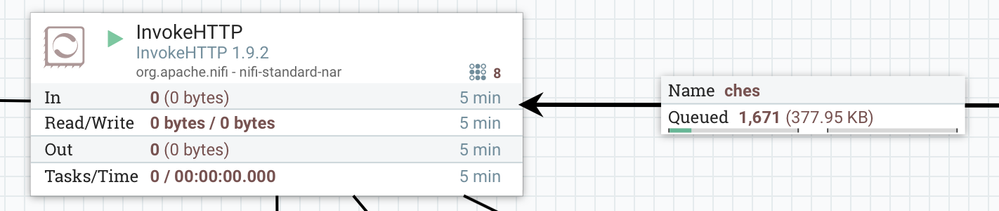

I have a NiFi flow [on a 2 processor machine using 8/5 thread counts] where the InvokeHTTP block is no longer processing files from the queue [shown below]:

As you can see there are 8 processes running but these seem to be stuck for hours and the only way out of it is manually stopping and terminating the block. This results in the following log message:

2020-05-18 08:31:06,228 ERROR [Timer-Driven Process Thread-12 <Terminated Task>] o.a.nifi.processors.standard.InvokeHTTP InvokeHTTP[id=f2f13d29-016b-1000-293d-c0cb04835881] Routing to Failure due to exception: java.net.SocketTimeoutException: timeout: java.net.SocketTimeoutException: timeout

java.net.SocketTimeoutException: timeout

at okhttp3.internal.http2.Http2Stream$StreamTimeout.newTimeoutException(Http2Stream.java:593)

at okhttp3.internal.http2.Http2Stream$StreamTimeout.exitAndThrowIfTimedOut(Http2Stream.java:601)

at okhttp3.internal.http2.Http2Stream.takeResponseHeaders(Http2Stream.java:146)

at okhttp3.internal.http2.Http2Codec.readResponseHeaders(Http2Codec.java:120)

at okhttp3.internal.http.CallServerInterceptor.intercept(CallServerInterceptor.java:75)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.connection.ConnectInterceptor.intercept(ConnectInterceptor.java:45)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:67)

at okhttp3.internal.cache.CacheInterceptor.intercept(CacheInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:67)

at okhttp3.internal.http.BridgeInterceptor.intercept(BridgeInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.http.RetryAndFollowUpInterceptor.intercept(RetryAndFollowUpInterceptor.java:120)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:92)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:67)

at okhttp3.RealCall.getResponseWithInterceptorChain(RealCall.java:185)

at okhttp3.RealCall.execute(RealCall.java:69)

at org.apache.nifi.processors.standard.InvokeHTTP.onTrigger(InvokeHTTP.java:791)

at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27)

at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1162)

at org.apache.nifi.controller.tasks.ConnectableTask.invoke(ConnectableTask.java:209)

at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:117)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Before stopping the process, I had a look into the nifi-bootstrap.log file and noticed a number of similar messages. I'm not sure whether these are related since no direct reference to InvokeHTTP is made.

"NiFi Web Server-556239" Id=556239 TIMED_WAITING on java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject@2f7a83f7

at sun.misc.Unsafe.park(Native Method)

at java.util.concurrent.locks.LockSupport.parkNanos(LockSupport.java:215)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(AbstractQueuedSynchronizer.java:2078)

at org.eclipse.jetty.util.BlockingArrayQueue.poll(BlockingArrayQueue.java:392)

at org.eclipse.jetty.util.thread.QueuedThreadPool.idleJobPoll(QueuedThreadPool.java:653)

at org.eclipse.jetty.util.thread.QueuedThreadPool.access$800(QueuedThreadPool.java:48)

at org.eclipse.jetty.util.thread.QueuedThreadPool$2.run(QueuedThreadPool.java:717)

at java.lang.Thread.run(Thread.java:748)

"OkHttp ConnectionPool" Id=213155 TIMED_WAITING on okhttp3.ConnectionPool@72d6189

at java.lang.Object.wait(Native Method)

at java.lang.Object.wait(Object.java:460)

at okhttp3.ConnectionPool$1.run(ConnectionPool.java:67)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Number of Locked Synchronizers: 1

- java.util.concurrent.ThreadPoolExecutor$Worker@7b6a1485

"Okio Watchdog" Id=556238 TIMED_WAITING on java.lang.Class@2b4512c0

at java.lang.Object.wait(Native Method)

at java.lang.Object.wait(Object.java:460)

at okio.AsyncTimeout.awaitTimeout(AsyncTimeout.java:361)

at okio.AsyncTimeout$Watchdog.run(AsyncTimeout.java:312)

Any help/insights on this would be greatly appreciated.

Created 10-13-2020 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To update - the combination of NiFi 1.12.1 and OpenJDK 1.8u242 works fine - left it running overnight with no hanging. I expect NiFi 1.11.4 to also work with this version, and avoid the bleeding edge!

Created 10-07-2020 10:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@DavidNa I've also tested this on Java 11.0.8 (openjdk version "11.0.8" 2020-07-14 LTS) and have found the same issue. But I'm having some success with previous versions of Java 8 (build 232, 252)

Created 10-12-2020 07:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We've also run into exactly this problem. So far we've tested a combination of NiFi and Java versions with mixed success: seems like the issue is a Java one and is present in a few different versions/distributions of Java.

Same symptoms: InvokeHTTP slowly locks up until all threads are locked and the queues back up.

Couldn't reproduce on a simple AWS deployment, involing one EC2 instance running an HTTP server serving a small binary file, and another running a single node 2-core nifi cluster. Haven't tested with a larger instance yet.

| NiFi 1.9.2 | NiFi 1.11.4 | NiFi 1.12.1 | |

| Oracle Java 1.8u144 | works | works | not tested |

| OpenJDK 1.8u242 | n/t | n/t | about to test |

| OpenJDK 1.8u252 | fails | fails | fails |

| OpenJDK 11.0.7 | n/t | n/t | fails |

| Amazon Corretto 11.0.8 | n/t | n/t | fails |

Created 10-13-2020 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To update - the combination of NiFi 1.12.1 and OpenJDK 1.8u242 works fine - left it running overnight with no hanging. I expect NiFi 1.11.4 to also work with this version, and avoid the bleeding edge!

Created 11-12-2020 02:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've had some success in stabilising InvokeHTTP by adding a "Connection" property and setting its value to "close". As I understand it, this will effectively disable HTTP keepalives in the underlying libraries.

Created 12-07-2020 12:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We've been running JDK 1.8.0_242 and Nifi 1.12.1 for almost a month now without incidents.

Created on 12-08-2020 12:47 AM - edited 12-08-2020 12:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same, since reverting to 242 in October we've been fine on both 1.11.4 and 1.12.1. (prod/test clusters).

EDIT: Not tried the "Connection: close" option mentioned above!

Created 04-14-2021 10:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We had the hanging concurrent tasks problem running Nifi 1.11.4. Upgrading to 1.13.2 resolved it for us.

- « Previous

-

- 1

- 2

- Next »