Support Questions

- Cloudera Community

- Support

- Support Questions

- Is there a easy way to test spark applications wit...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Is there a easy way to test spark applications within Eclipse instead of running the jars from the terminal?

- Labels:

-

Apache Spark

Created 09-12-2016 06:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using Eclipse to build spark applications and every time I need to export the jar and run it from the shell to test the application. I am using a VM running CDH5.5.2 quick start vm in it. I have my eclipse installed in my windows (Host) and I create spark applications which is then exported as Jar file from Eclipse and copied over to Linux(Guest) and then, I run the spark application using spark-submit. This is very annoying sometimes because if you miss something in your program and the build was successful, the application will fail to execute and I need to fix the code and again export the Jar to run and so on. I am wondering if there is a much simpler way to run the job right from eclipse(Please note that I don't want to run spark in local mode) where the input file will be in HDFS? Is this a better way of doing? What are the Industry standards that are followed to develop. test and deploying spark applications in Production?

Created 09-12-2016 07:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Take a look at this article here: http://www.coding-daddy.xyz/node/7 Very detailed instructions.

You can also run a local Spark server and that's easy.

You could also use Zeppelin or Spark REPL so you can test as you develop.

Or use the Spark testing framework https://github.com/holdenk/spark-testing-base

Created on 09-13-2016 06:37 PM - edited 08-18-2019 06:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have done the following:

In my main method:

public static void main(String[] args) {

SparkConf conf = new SparkConf().setAppName("Simple Application").setMaster("local"); JavaSparkContext sc = new JavaSparkContext(conf);

...My app needs a json file, so in my run configuration, I just put the following on the Arguments > Program arguments tab:

/Users/bhagan/Documents/jsonfile.json

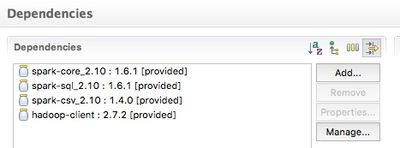

And make sure you have all the dependencies you need in your pom.xml:

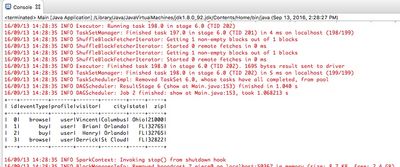

Run it and see the output:

Give it a shot and let us know if you get it working.

Created 09-13-2016 06:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply however I wanted to run it in a cluster directly and not in local mode.

Created 09-15-2016 02:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh, sorry I missed that.

Created 09-15-2016 05:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Currently Spark does not support the deployment to YARN from a SparkContext. Use spark-submit instead. For unit testing it is recommended to use [local] runner.

The problem is that you can not set the Hadoop conf from outside the SparkContext, it is received from *-site.xml config under HADOOP_HOME during the spark-submit. So you can not point to your remote cluster in Eclipse unless you setup the correct *-site.conf on your laptop and use spark-submit.

SparkSubmit is available as a Java class, but I doubt that you will achieve what your are looking for with it. But you would be able to launch a spark job from Eclipse to a remote cluster, if this is sufficient for you. Have a look at the Oozie Spark launcher as an example.

SparkContext is dramatically changing in Spark 2 in favor I think of SparkClient to support multiple SparkContexts. I am not sure what the situation is with that.