Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Issue with ConvertRecord or ConvertJSONToAvro ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Issue with ConvertRecord or ConvertJSONToAvro when an element is missing.

- Labels:

-

Apache NiFi

Created 03-20-2018 03:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am reading a JSON file , and splitting in to rows by using SplitJSON processor and then i am trying to convert then to AVRO using ConvertRecord or ConvertJSONToAvro. I gave the JSON schema with all the possible columns. It is converting the records when it contains all the fields\elements. But if it misses an element its routing to failures.

how do you solve this.?? all the incoming records wont have all the fields in schema.

Created 03-20-2018 07:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's easy to use ConvertRecord processor because this processor even work with Array of Json objects, so you don't need to use split json processor.

I think the issue that you are facing is because of there are no default values specified for the json fields, if the default values are specified for all the fields then if the value is not present for the field/element then conversion to avro doesn't fail it will have the default value that was defined for the field.

Example:-

Case1:-

i'm having the below json array with 3 json objects in it

[{"year" : "2018","mon":"01","mday":"02"},

{"year" : "2018","mon":"01","mday":"02","hour":"01"},

{"year" : "2018","mon":"01","mday":"02","hour":"01"}]trying to convert json to avro using convert record processor and my avro schema looks like

{

"type": "record",

"name": "nifi_logs",

"fields": [

{ "name": "year", "type": ["null","string"] },

{ "name": "mon", "type": ["null","string"] },

{ "name": "mday", "type": ["null","string"] },

{ "name": "hour", "type": ["null","string"] }

]

}now i'm having all the default values for each field/element as null, as if you see our input json array i'm missing hour filed in first json object, even though we are missing the field with the above avro schema we are going to have null for hour field.

Case2:-

{

"type": "record",

"name": "nifi_logs",

"fields": [

{ "name": "year", "type": ["null","string"] },

{ "name": "mon", "type": ["null","string"] },

{ "name": "mday", "type": ["null","string"] },

{ "name": "hour", "type": "string" }

]if our avro schema like above then we don't have any default value for the hour field so if the incoming data doesn't having hour field then that record goes to failure because there is no default value defined for the hour field.

Define all the default values for the all fields/elements that you are having then run the processor again,

Let us know if you are having any issues..!!

Created 03-20-2018 07:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's easy to use ConvertRecord processor because this processor even work with Array of Json objects, so you don't need to use split json processor.

I think the issue that you are facing is because of there are no default values specified for the json fields, if the default values are specified for all the fields then if the value is not present for the field/element then conversion to avro doesn't fail it will have the default value that was defined for the field.

Example:-

Case1:-

i'm having the below json array with 3 json objects in it

[{"year" : "2018","mon":"01","mday":"02"},

{"year" : "2018","mon":"01","mday":"02","hour":"01"},

{"year" : "2018","mon":"01","mday":"02","hour":"01"}]trying to convert json to avro using convert record processor and my avro schema looks like

{

"type": "record",

"name": "nifi_logs",

"fields": [

{ "name": "year", "type": ["null","string"] },

{ "name": "mon", "type": ["null","string"] },

{ "name": "mday", "type": ["null","string"] },

{ "name": "hour", "type": ["null","string"] }

]

}now i'm having all the default values for each field/element as null, as if you see our input json array i'm missing hour filed in first json object, even though we are missing the field with the above avro schema we are going to have null for hour field.

Case2:-

{

"type": "record",

"name": "nifi_logs",

"fields": [

{ "name": "year", "type": ["null","string"] },

{ "name": "mon", "type": ["null","string"] },

{ "name": "mday", "type": ["null","string"] },

{ "name": "hour", "type": "string" }

]if our avro schema like above then we don't have any default value for the hour field so if the incoming data doesn't having hour field then that record goes to failure because there is no default value defined for the hour field.

Define all the default values for the all fields/elements that you are having then run the processor again,

Let us know if you are having any issues..!!

Created on 03-20-2018 08:57 PM - edited 08-18-2019 12:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shu ,

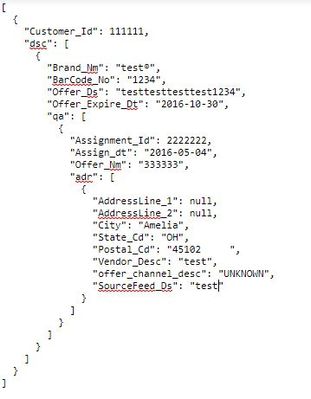

Thank you. It helped. i was able to supply nulls and it came out like below..

one another question along the same lines.

what is the best way to split the this JSON in to multiple files based on some columns so that i can create Hive tables on them.

for example files with

Customer_Id,Brand_Nm,BarCode_No,Offer_Ds,Offer_Expire_Dt

Customer_Id,Assignment_id,Assign_Dt,Offer_Nm

Customer_Id,Address_Line_1,Address_Line_2,City,State_Cd,Postal_Cd,Vendor_Desc,Offer,Source

Created on 03-20-2018 10:24 PM - edited 08-18-2019 12:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

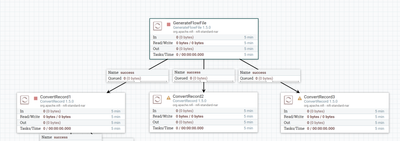

I think it's better to go with 3 convert record processors in parallel with same JsonReader(as the json content is same for all) and different JsonSetWriters(we need different elements in each file) to get 3 different files i.e

Customer_Id,Brand_Nm,BarCode_No,Offer_Ds,Offer_Expire_Dt(convertrecord1)

Customer_Id,Assignment_id,Assign_Dt,Offer_Nm(convertrecord2)

Customer_Id,Address_Line_1,Address_Line_2,City,State_Cd,Postal_Cd,Vendor_Desc,Offer,Source(convertrecord3)

Flow:-

As input json object/message having nested arrays i have used jsonpathreader controller service and added avro controller service and added all the user defined properties with matching json expressions. this reader will be same for all the three Convertrecord processors.

in JsonSetwriter controller service i have used schema text property to define our required schema.

i tried with one ConvertRecord processor and attached .xml for reference, you can save and add your required elements as per your requirements.

Let us know if you have any issues..!!

Created 03-21-2018 03:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shu,

I am not able to use your template as it gave me this error Invalid UUID string: 03701c5912a831d30000000000000000.

anyway will it work if the incoming file have many records (330K in this case) , at the end it will split in to 3 files.? That would be awesome.

also I can have multiple addresses for a customer , if we hard code with 0 will it get all the rows .?

Regards,

Sai

Created 03-21-2018 04:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried a load test of 500k records

input:-

{

"Customer_Id": 11111,

"dsc": [{

"Brand_Nm": "test",

"qa": [{

"Assignment_Id": 22,

"addr": [{

"AddressLine_1": null,

"City": "Amelia"

}]

}]

}]

}I repeated the above message for 500k records and used convert record processor it got completed in 5 sec.

In your case you will have record size is more but still if you are having enough heap space, convert record will work without any issues..!!

Could you please share 10 records that having multiple addresses for a customer and the expected output so that i can try on my end.

For reference i created new template please try to save this template.

Created on 03-21-2018 05:57 PM - edited 08-18-2019 12:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shu,

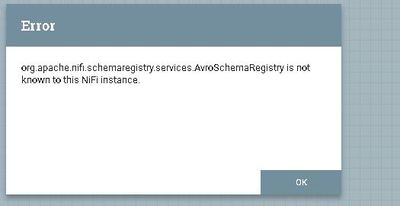

i am still not able to use the template now getting the error (screen shot attached).

but here is a test json..basically all the arrays can repeat multiple times..how to flatten those..??

{ "Customer_Id": 11111, "dsc": [ { "Brand_Nm": "test", "qa": [ { "Assignment_Id": 222, "addr": [ { "AddressLine_1": "stl", "City": "stl" } ] },{ "Assignment_Id": 223, "addr": [ { "AddressLine_1": "stc", "City": "stc" } ] } ] }, { "Brand_Nm": "test2", "qa": [ { "Assignment_Id": 333, "addr": [ { "AddressLine_1": "ofl", "City": "ofl" } ] },{ "Assignment_Id": 334, "addr": [ { "AddressLine_1": "estl", "City": "estl" }, { "AddressLine_1": "estl1", "City": "estl1" } ] } ] } ] }

i like it break it as 3 files like

Customer_Id,Brand_Nm

11111,test

11111,test2

Customer_Id,qa_Assignment_Id

11111,222

11111,223

11111,333

11111,334

Customer_Id,Assignment_Id,AddressLine_1,City

11111,222,stc,stc

11111,223,ofl,ofl

11111,333,estl,estl

11111,334,estl,estl

11111,334,estl1,estl1

and also is it possible to flatten the entire structure..

Customer_Id,Brand_Nm,assignment_id,addressline_1,city 1

1111,test,222,stl,sttl

11111,test,223,stc,stc

11111,test2,333,ofl,ofl

11111,test2,334,estl,estl

11111,test2,334,estl1,estl1

Created 04-18-2020 12:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can anyone please tell me why the value is returning null when I converting xml to json?

[ {

"trackTopology" : {

"trackBegin" : {

"connection" : null

},

"trackEnd" : {

"connection" : null

},

"connections" : {

"switch" : [ {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

}, {

"connection" : null

} ]

},

"vertexesWithKP" : null,

"trackTopologyInfos" : {

"trackTopologyInfo" : {

"UEVOL_Territory" : true,

"type" : "Mainline",

"Driverless_Horn_Activation" : false,

"Loop" : false

}

}

Created 04-19-2020 05:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DarkStar

As this thread was marked 'Solved' in March of 2018 you would have a better chance of receiving a resolution by starting a new thread. This will also provide the opportunity to provide details specific to your XML source that could aid others in providing a more targeted answer to your question.

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.