Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Issue with Nifi 1.1.1 cluster and Process set ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Issue with Nifi 1.1.1 cluster and Process set to "Primary node"

- Labels:

-

Apache NiFi

-

Apache Zookeeper

Created on 10-02-2017 05:26 PM - edited 09-16-2022 05:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am new to Nifi and trying to learn how to use it. I have set up two servers with Nifi and configured them to be a cluster. I am currently having two issues that I think are related.

1 - If I take one server off line I can not access Nifi.

2 - If I set a work process to execute on "Primary node", it dose not run. If I set it to "All nodes" then I get two copes of the work.

Any help would be appreciated. I have included what I think is the pertinent data from both servers for /conf/nifi.properties, /conf/zookeep.properties, and /state/zookeeper/myid.

Thank you.

For Node1.com

My /conf/nifi.properties

####################

# State Management #

####################

nifi.state.management.configuration.file=./conf/state-management.xml

# The ID of the local state provider

nifi.state.management.provider.local=local-provider

# The ID of the cluster-wide state provider. This will be ignored if NiFi is not clustered but must be populated if running in a cluster.

nifi.state.management.provider.cluster=zk-provider

# Specifies whether or not this instance of NiFi should run an embedded ZooKeeper server

nifi.state.management.embedded.zookeeper.start=true

# Properties file that provides the ZooKeeper properties to use if <nifi.state.management.embedded.zookeeper.start> is set to true

nifi.state.management.embedded.zookeeper.properties=./conf/zookeeper.properties

# web properties #

nifi.web.war.directory=./lib

nifi.web.http.host=Node1.com

nifi.web.http.port=8181

nifi.web.https.host=

nifi.web.https.port=

nifi.web.jetty.working.directory=./work/jetty

nifi.web.jetty.threads=200

# cluster node properties (only configure for cluster nodes) #

nifi.cluster.is.node=true

nifi.cluster.node.address=Node1.com

nifi.cluster.node.protocol.port=12000

nifi.cluster.node.protocol.threads=10

nifi.cluster.node.event.history.size=25

nifi.cluster.node.connection.timeout=5 sec

nifi.cluster.node.read.timeout=5 sec

nifi.cluster.firewall.file=

nifi.cluster.flow.election.max.wait.time=5 mins

nifi.cluster.flow.election.max.candidates=2

# zookeeper properties, used for cluster management #

nifi.zookeeper.connect.string=Node1.com:2181,Node2.com:2182

nifi.zookeeper.connect.timeout=3 secs

nifi.zookeeper.session.timeout=3 secs

nifi.zookeeper.root.node=/nifi

My /conf/zookeep.properties

clientPort=2181

initLimit=10

autopurge.purgeInterval=24

syncLimit=5

tickTime=2000

dataDir=./state/zookeeper

autopurge.snapRetainCount=30

server.1=Node1.com:2888:3888

server.2=Node2.com:2888:3888

My /state/zookeeper/myid

1

For Node2.com

My /conf/nifi.properties

####################

# State Management #

####################

nifi.state.management.configuration.file=./conf/state-management.xml

# The ID of the local state provider

nifi.state.management.provider.local=local-provider

# The ID of the cluster-wide state provider. This will be ignored if NiFi is not clustered but must be populated if running in a cluster.

nifi.state.management.provider.cluster=zk-provider

# Specifies whether or not this instance of NiFi should run an embedded ZooKeeper server

nifi.state.management.embedded.zookeeper.start=true

# Properties file that provides the ZooKeeper properties to use if <nifi.state.management.embedded.zookeeper.start> is set to true

nifi.state.management.embedded.zookeeper.properties=./conf/zookeeper.properties

# web properties #

nifi.web.war.directory=./lib

nifi.web.http.host=Node2.com

nifi.web.http.port=8181

nifi.web.https.host=

nifi.web.https.port=

nifi.web.jetty.working.directory=./work/jetty

nifi.web.jetty.threads=200

# cluster node properties (only configure for cluster nodes) #

nifi.cluster.is.node=true

nifi.cluster.node.address=Node2.com

nifi.cluster.node.protocol.port=12000

nifi.cluster.node.protocol.threads=10

nifi.cluster.node.event.history.size=25

nifi.cluster.node.connection.timeout=5 sec

nifi.cluster.node.read.timeout=5 sec

nifi.cluster.firewall.file=

nifi.cluster.flow.election.max.wait.time=5 mins

nifi.cluster.flow.election.max.candidates=2

# zookeeper properties, used for cluster management #

nifi.zookeeper.connect.string=Node1.com:2181,Node2.com:2182

nifi.zookeeper.connect.timeout=3 secs

nifi.zookeeper.session.timeout=3 secs

nifi.zookeeper.root.node=/nifi

My /conf/zookeep.properties

clientPort=2181

initLimit=10

autopurge.purgeInterval=24

syncLimit=5

tickTime=2000

dataDir=./state/zookeeper

autopurge.snapRetainCount=30

server.1=Node1.com:2888:3888

server.2=Node2.com:2888:3888

My /state/zookeeper/myid

2

Created 10-02-2017 05:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. NiFi is designed to prevent changes while a node is disconnected. Each node runs its own copy of the flow.xml.gz. When a node is disconnected, the cluster coordinator has not means to determine the state of components on that disconnected node. If changes were allowed to be made, that node would be unable to automatically rejoin the cluster without manual intervention to bring them back in sync. To regain control of the canvas you can access the cluster UI and drop the disconnected node from the cluster. Keep in mind that if you want to later rejoin that node, you will need to make sure the flow.xml.gz, users.xml (if used when NiFi is secured), and authorizations.xml (if used when NiFi is secured) are all in-sync with what is currently be used in cluster (you can copy these from other active cluster node). *** Be mindful that if a change you made removed a connection that contained data on your disconnected node, that data will be lost on startup once you replace the flow.xml.gz. If Nifi cannot find the connection to place the queued data it is lost.

2. If you set a Processor component to execute on "Primary node", it dose not run. What component and how do you know it did not run? If have not see this happen before. Processors that use no cluster friendly protocols should be run on Primary Node only to prevent data duplication as you noted above. If you are consuming a lot of data using one of these protocols, ti is suggested you use the List/Fetch (example: listSFTP/FetchSFTP) processors along with NiFi's Site-To-Site (S2S) capability to redistribute the listed FlowFiles to all nodes in your cluster before the Fetch.

Also note that you need an odd number of Zookeeper nodes in order to have Quorum. (3 minimum). Using the embedded zookeeper means you lose that ZK node anytime you restart that NiFi instance. I don't recommend using embedded ZK in production.

Thanks,

Matt

Created 10-02-2017 05:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. NiFi is designed to prevent changes while a node is disconnected. Each node runs its own copy of the flow.xml.gz. When a node is disconnected, the cluster coordinator has not means to determine the state of components on that disconnected node. If changes were allowed to be made, that node would be unable to automatically rejoin the cluster without manual intervention to bring them back in sync. To regain control of the canvas you can access the cluster UI and drop the disconnected node from the cluster. Keep in mind that if you want to later rejoin that node, you will need to make sure the flow.xml.gz, users.xml (if used when NiFi is secured), and authorizations.xml (if used when NiFi is secured) are all in-sync with what is currently be used in cluster (you can copy these from other active cluster node). *** Be mindful that if a change you made removed a connection that contained data on your disconnected node, that data will be lost on startup once you replace the flow.xml.gz. If Nifi cannot find the connection to place the queued data it is lost.

2. If you set a Processor component to execute on "Primary node", it dose not run. What component and how do you know it did not run? If have not see this happen before. Processors that use no cluster friendly protocols should be run on Primary Node only to prevent data duplication as you noted above. If you are consuming a lot of data using one of these protocols, ti is suggested you use the List/Fetch (example: listSFTP/FetchSFTP) processors along with NiFi's Site-To-Site (S2S) capability to redistribute the listed FlowFiles to all nodes in your cluster before the Fetch.

Also note that you need an odd number of Zookeeper nodes in order to have Quorum. (3 minimum). Using the embedded zookeeper means you lose that ZK node anytime you restart that NiFi instance. I don't recommend using embedded ZK in production.

Thanks,

Matt

Created 10-03-2017 12:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tip: try to avoid creating new answers for every correspondence. Add comments to existing answers to continue that discussion thread.

1. It is possible to have a one node cluster, but not very efficient. By setting it up as a cluster you are adding the overhead of zookeeper that is not needed with a standalone Nifi install. With regards to your "An unexpected error has occurred", this has nothing to do with there only being one node. Something else is going on in that would need to be investigated via the nifi logs (nifi-app.log, nifi-user.log, and/or nifi-bootstrap.log).

2. ExecuteScript->ExecuteStreamCommand->ExecuteScript-> ExecuteScript->InvokeHTTP. (That is a lot of custom code in a dataflow.) Do you really need so many script/command based processors to satisfy your use case? You also must remember that each node runs its own copy of the dataflow and with the exception of the cluster coordinator, has no notion of the existence of other nodes. A node simply run the dataflow independently and works with it sown set of files and NiFi repos. So make sure that your scripts exist on every node in your cluster in the same directory locations with proper permissions.

There is nothing fancy about zookeeper needed by NiFi. Zookeeper is just another Apache project and has its own documentation ( https://zookeeper.apache.org/ ). You do not need a zookeeper node for every NiFi node. A 3 node ZK is perfect for most NiFi clusters.

If you found this answer addressed teh question asked in this forum, please click "accept" .

Thanks,

Matt

Created 02-11-2020 11:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

Am trying to run Nifi as a 3 node cluster on Windows 10, with same properties set in all 3 conf files as mentioned below, but still its failing to start nifi as a 3 node cluster. Please help in creating this cluster configuration

Below are the settings done on my files

state-management.xml file

<cluster-provider>

<id>zk-provider</id>

<class>org.apache.nifi.controller.state.providers.zookeeper.ZooKeeperStateProvider</class>

<property name="Connect String">node1:2181,node2:2181,node3:2181</property>

<property name="Root Node">/nifi</property>

<property name="Session Timeout">10 seconds</property>

<property name="Access Control">Open</property>

</cluster-provider>

zookeper properties

dataDir=./state/zookeeper

autopurge.snapRetainCount=30

server.1=node1:2888:3888

server.2=node2:2888:3888

server.3=node3:2888:3888

nifi properties:

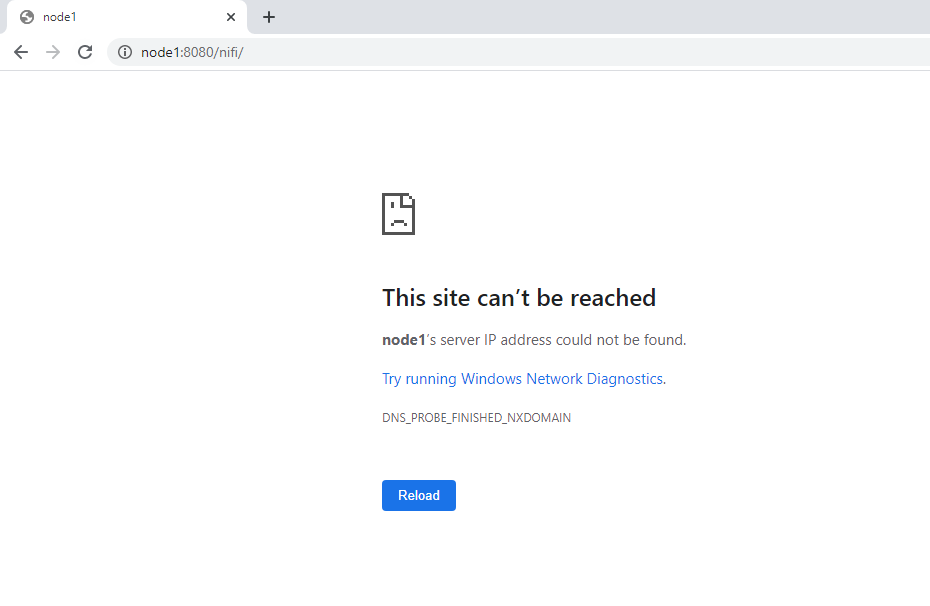

nifi.web.http.host=node1

nifi.web.http.port=8080

nifi.cluster.is.node=true

nifi.cluster.node.address=node1

nifi.cluster.node.protocol.port=11122

nifi.zookeeper.connect.string=node1:2181,node2:2181,node3:2181

nifi.zookeeper.root.node=/nifi

In other 2 nodes the host name are placed respectively as (node2 , node3)

C:\nifi-1.11.0-bin\Node1\bin>run-nifi.bat

2020-02-11 20:29:20,237 INFO [main] org.apache.nifi.bootstrap.Command Starting Apache NiFi...

2020-02-11 20:29:20,238 INFO [main] org.apache.nifi.bootstrap.Command Working Directory: C:\NIFI-1~1.0-B\Node1

2020-02-11 20:29:20,239 INFO [main] org.apache.nifi.bootstrap.Command Command: C:\Program Files\Java\jdk1.8.0_241\bin\java.exe -classpath C:\NIFI-1~1.0-B\Node1\.\conf;C:\NIFI-1~1.0-B\Node1\.\lib\javax.servlet-api-3.1.0.jar;C:\NIFI-1~1.0-B\Node1\.\lib\jcl-over-slf4j-1.7.26.jar;C:\NIFI-1~1.0-B\Node1\.\lib\jetty-schemas-3.1.jar;C:\NIFI-1~1.0-B\Node1\.\lib\jul-to-slf4j-1.7.26.jar;C:\NIFI-1~1.0-B\Node1\.\lib\log4j-over-slf4j-1.7.26.jar;C:\NIFI-1~1.0-B\Node1\.\lib\logback-classic-1.2.3.jar;C:\NIFI-1~1.0-B\Node1\.\lib\logback-core-1.2.3.jar;C:\NIFI-1~1.0-B\Node1\.\lib\nifi-api-1.11.0.jar;C:\NIFI-1~1.0-B\Node1\.\lib\nifi-framework-api-1.11.0.jar;C:\NIFI-1~1.0-B\Node1\.\lib\nifi-nar-utils-1.11.0.jar;C:\NIFI-1~1.0-B\Node1\.\lib\nifi-properties-1.11.0.jar;C:\NIFI-1~1.0-B\Node1\.\lib\nifi-runtime-1.11.0.jar;C:\NIFI-1~1.0-B\Node1\.\lib\slf4j-api-1.7.26.jar -Dorg.apache.jasper.compiler.disablejsr199=true -Xmx512m -Xms512m -Djavax.security.auth.useSubjectCredsOnly=true -Djava.security.egd=file:/dev/urandom -Dsun.net.http.allowRestrictedHeaders=true -Djava.net.preferIPv4Stack=true -Djava.awt.headless=true -Djava.protocol.handler.pkgs=sun.net.www.protocol -Dzookeeper.admin.enableServer=false -Dnifi.properties.file.path=C:\NIFI-1~1.0-B\Node1\.\conf\nifi.properties -Dnifi.bootstrap.listen.port=53535 -Dapp=NiFi -Dorg.apache.nifi.bootstrap.config.log.dir=C:\NIFI-1~1.0-B\Node1\bin\..\\logs org.apache.nifi.NiFi

2020-02-11 20:29:20,554 WARN [main] org.apache.nifi.bootstrap.Command Failed to set permissions so that only the owner can read pid file C:\NIFI-1~1.0-B\Node1\bin\..\run\nifi.pid; this may allows others to have access to the key needed to communicate with NiFi. Permissions should be changed so that only the owner can read this file

2020-02-11 20:29:20,561 WARN [main] org.apache.nifi.bootstrap.Command Failed to set permissions so that only the owner can read status file C:\NIFI-1~1.0-B\Node1\bin\..\run\nifi.status; this may allows others to have access to the key needed to communicate with NiFi. Permissions should be changed so that only the owner can read this file

2020-02-11 20:29:20,573 INFO [main] org.apache.nifi.bootstrap.Command Launched Apache NiFi with Process ID 4284

______________________________

C:\nifi-1.11.0-bin\Node1\bin>status-nifi.bat

20:31:57.265 [main] DEBUG org.apache.nifi.bootstrap.NotificationServiceManager - Found 0 service elements

20:31:57.271 [main] INFO org.apache.nifi.bootstrap.NotificationServiceManager - Successfully loaded the following 0 services: []

20:31:57.272 [main] INFO org.apache.nifi.bootstrap.RunNiFi - Registered no Notification Services for Notification Type NIFI_STARTED

20:31:57.273 [main] INFO org.apache.nifi.bootstrap.RunNiFi - Registered no Notification Services for Notification Type NIFI_STOPPED

20:31:57.274 [main] INFO org.apache.nifi.bootstrap.RunNiFi - Registered no Notification Services for Notification Type NIFI_DIED

20:31:57.277 [main] DEBUG org.apache.nifi.bootstrap.Command - Status File: C:\NIFI-1~1.0-B\Node1\bin\..\run\nifi.status

20:31:57.278 [main] DEBUG org.apache.nifi.bootstrap.Command - Status File: C:\NIFI-1~1.0-B\Node1\bin\..\run\nifi.status

20:31:57.279 [main] DEBUG org.apache.nifi.bootstrap.Command - Properties: {pid=4284, port=53536}

20:31:57.280 [main] DEBUG org.apache.nifi.bootstrap.Command - Pinging 53536

20:31:58.343 [main] DEBUG org.apache.nifi.bootstrap.Command - Process with PID 4284 is not running

20:31:58.345 [main] INFO org.apache.nifi.bootstrap.Command - Apache NiFi is not running

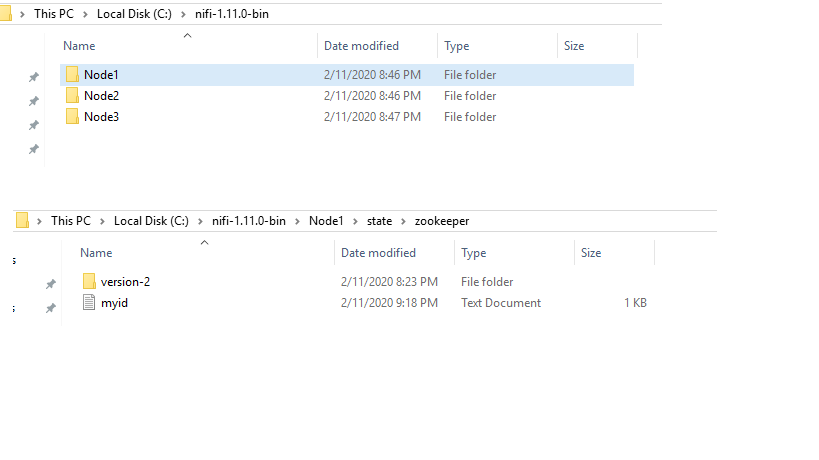

This is how my folder structure is:

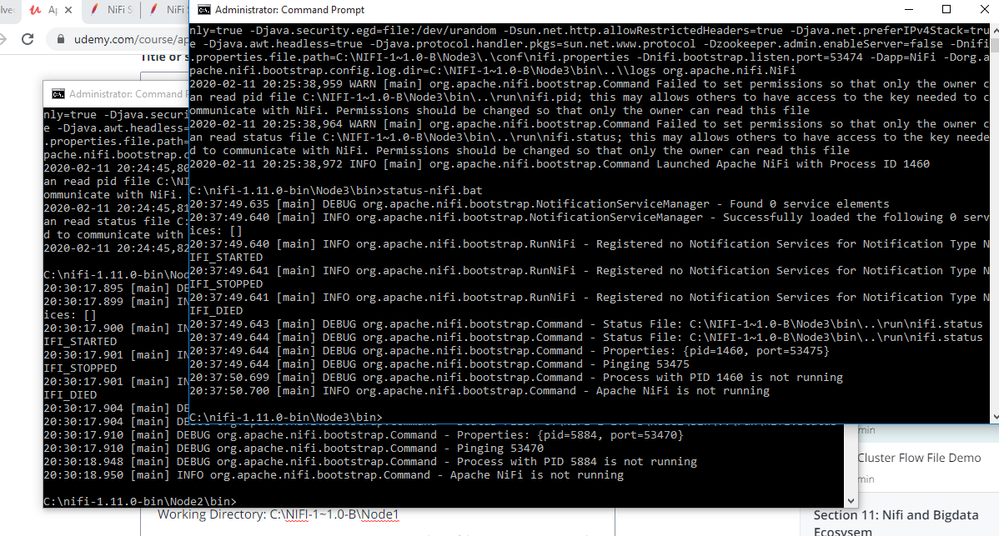

below is the image of Node 2 and node 3 cmd prompt.

Created 02-11-2020 08:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @Sush217 As this is an older thread which was marked 'Solved' in the Fall of 2017, you would have a better chance of receiving a resolution by starting a new thread. This will also provide you an opportunity to provide details specific to your environment that could aid others in providing a helpful answer to your question, as the configuration at issue in the original question here is not the same as the one you are working with.

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-12-2020 01:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This thread/topic is very old and has an accepted answer. Please start a new question/topic wit your query.

We'll be happy to assist you.

Matt

Created 10-03-2017 12:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Another tip: use the @<username> in your responses/comments so that the user is notified when you add a response/comment.

Created 10-04-2017 01:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Than you Matt. I will be making changes to my environment will hopefully improve with every new chalage.

Created 10-03-2017 04:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Matt,

Thank you for your reply. It shows me that I still have a lot to learn about Nifi.

1. This explanation helped me. You mention the Cluster UI. Is that different than http://Node1:8181/nifi? I ask because when I have only one node running I only get a screen telling me "An unexpected error has occurred".

2. For my testing I have it set to run every 5 min. I have created a simple flow. Consisting of ExecuteScript->ExecuteStreamCommand->ExecuteScript-> ExecuteScript->InvokeHTTP. I have tried setting just the first one to “Primary node only”, I have also set them all to “Primary node only”. In these two tests after waiting 30 min. and not getting any data, I simply switch them to “All nodes”, and I start to get data within minutes. I think I should first get my cluster set up properly then come back to this issue. As they say baby steps.

I see that you are recommending against using the embedded zookeeper. Do you know of a good link explaining where to get the zookeeper and how to configure it with Nifi? Do you need to have one nifi for each zookeeper?

Thank you for all your help.

Andre