Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Issue with Nifi Merge Content : Files stay in ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Issue with Nifi Merge Content : Files stay in the queue infinitely !

- Labels:

-

Apache NiFi

Created on 03-10-2017 02:05 PM - edited 08-18-2019 05:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a flow where I am using the Merge Content Processor. I noticed lately that some flowfiles stay infinitely in the queue just before the Merge Content. I can't figure out the issue so I am asking for your help !

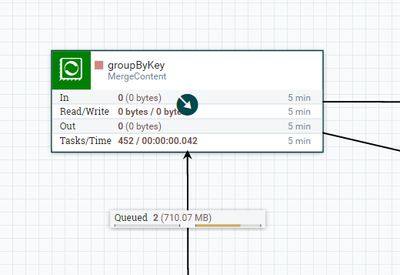

This is the part of the flow that I am talking about :

The configuration of the merge content processor is here (merging in the attribute called "cle" and its value is the same for the 2 flowfiles in the queue ! But still they don't merge ) :

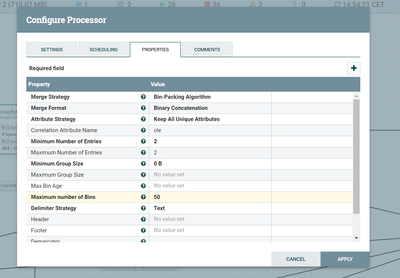

Finally here is the content of the queue :

Is this due to the first flowfile size (710 MB) ? is there a maximum size for a bin ? If yes why isn't it merged after reaching that size ?

Thank you for your help !

Created on 03-14-2017 05:48 PM - edited 08-18-2019 05:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Each Node in a NiFi cluster runs its own copy of the dataflow and works on its own set of FlowFiles.

Looking at the screenshot you have above of your queue list, you can see that the two FlowFiles are not on the same node. So each node is running a MergeContent processor and each node is waiting for another FlowFile to complete their bins. You will need to look back earlier in your dataflow to see how your data is being ingested by your nodes to make sure that the matching sets of files end up on the same node for merging.

Thanks,

Matt

Created 03-14-2017 06:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you... Sometimes the most important piece of information is in the fine details. Other give away that it was clustered was that both FlowFiles in that queue had same position "1". Two FlowFiles in the same queue on the same node cannot occupy the same position.

Created on 03-15-2017 08:36 AM - edited 08-18-2019 05:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Matt Clarke This is an excellent answer, thank you very much. I am indeed using a cluster of nifi nodes, and my dataflow starts with a list/fetch as described by the answer of @Pierre Villard on this question : https://community.hortonworks.com/questions/52112/nifi-load-distribution-in-getfile-processor.html

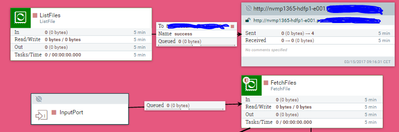

So the beginning of my dataflow looks like this :

I am using the list/fetch pattern to take advantage of the cluster and improve the performance of the ingestion.

This leads me to ask the following question which is probably beyond the scope of the initial question and should be asked in the different post, but I am putting it here so that everyone in the same situation profits from your beautiful answers : does this mean that I can't use the merge content processor in these kind of dataflows (dataflows thar run on all nodes), as I don't have a way to control the node that will ingest a pair of matching flowfiles (flowfiles that have the same "cle" attribute) ? or could you think of a trick to handle this ?

Thanks again for your help !

Created on 03-17-2017 03:03 PM - edited 08-18-2019 05:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mohammed El Moumni Here is one possible dataflow design that can be used to make sure both FlowFiles in a pair end up on the same node after being distributed via the Remote Process Group (RPG):

While it requires adding 5 additional processor to you flow, overhead is relatively light since you are dealing with very small FlowFiles all the way up to the point of the FetchFile processor. You are still only fetching the ~700 MB content after cluster distribution.

Thanks,

Matt

Created 03-17-2017 04:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great answer like usual ! Just tested your suggestion and it works perfectly ! Thank you so much !

- « Previous

-

- 1

- 2

- Next »