Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: KUDU Couldn't send request to peer Status: Re...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

KUDU Couldn't send request to peer Status: Remote error: Service unavailable: UpdateConsensus request on kudu.consensus.ConsensusService from IP dropped due to backpressure. The service queue is full

- Labels:

-

Apache Kudu

Created on 05-23-2021 08:08 PM - edited 05-23-2021 08:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

KUDU version: 1.9.0+cdh6.2.0

1、memory_limit_hard_bytes: 100G

2、memory.soft_limit_in_bytes: -1

3、memory.limit_in_bytes: -1

4、maintenance_manager_num_threads: 4

5、block_cache_capacity_mb : 2G

The cluster has 4 tablet-server,and three yarn nodemanager are in the same node with tablet-server.

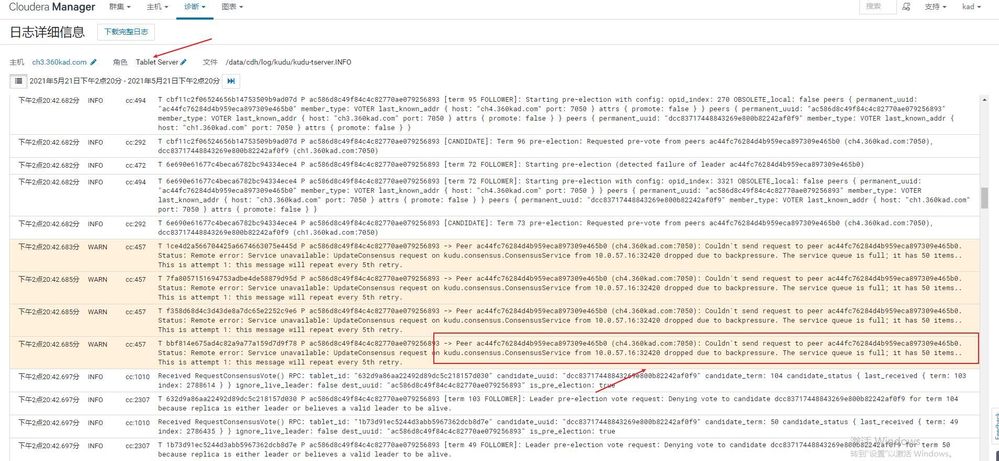

When I running a MR job in Yarn,just hive sql, and kudu tablet server will quit random, with fowlling logs:

T 5e5cdb8cf25d4c93aeaf013781419109 P ac586d8c49f84c4c82770ae079256893 -> Peer ac44fc76284d4b959eca897309e465b0 (ch4.360kad.com:7050): Couldn't send request to peer ac44fc76284d4b959eca897309e465b0. Status: Remote error: Service unavailable: UpdateConsensus request on kudu.consensus.ConsensusService from 10.0.57.16:26274 dropped due to backpressure. The service queue is full; it has 50 items.. This is attempt 1: this message will repeat every 5th retry.

I dont know how sole this problem

Created 05-26-2021 07:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

Those warning messages about dropped RPC requests due to backpressure is a sign that particular tablet server is likely overloaded. Consider the following remedies:

- Upgrade to the recent version of Kudu (1.14 as of now). Since Kudu 1.9.0 there have been many fixes which might help to reduce memory pressure for write-intensive workloads (e.g. see KUDU-2727, KUDU-2929), read-only workloads (KUDU-2836), and bunch of other improvements. BTW, if you are using CDH, then upgrading to CDH6.3.4 is a good first step in that direction: CDH6.3.4 contains fixes for KUDU-2727, KUDU-2929, KUDU-2836 (those were back-ported into CDH6.3.4).

- Make sure the tablet replica distribution is even across tablet servers: run the 'kudu cluster rebalance' CLI tool.

- If you suspect replica hot-spotting, consider re-creating the table in question to fan out the write stream across multiple tablets. I guess reading this guide might be useful: https://kudu.apache.org/docs/schema_design.html

- If nothing from the above helps, consider adding a few more tablet server nodes into your cluster. Once new nodes are added into the cluster, don't forget to run the 'kudu cluster rebalance' CLI tool.

Kind regards,

Alexey

Created on 05-26-2021 10:58 PM - edited 05-26-2021 11:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks youe reply. Unfortunately, an upgrade is not available at this time in my company.

I have rebalance my tablet server and modified the config 【maintenance_manager_num_threads】to 8 , 【block_cache_capacity_mb】 to 512MB, 【memory_limit_hard_bytes】to 60G。

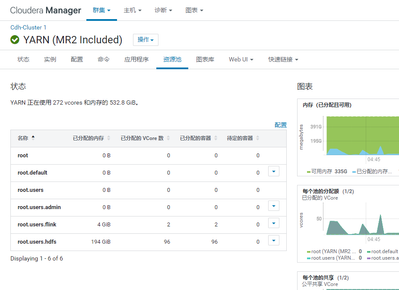

AND then I try to run MR job on yarn, when get map counts with 96, and memory with 194G on yarn, kudu server is stable. So I continue to run a few job on yarn to to observe Kudu, and kudu server is still stable. So I think it is ok and set up scheduling tasks.

But when today, a job run 179 maps, the kudu server is random quit...

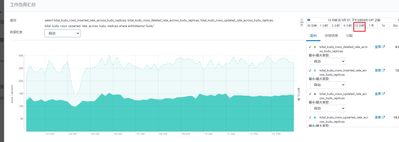

this is the memory detail one of tablet server