Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Kerberos issue while setting up with Ambari 2....

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Kerberos issue while setting up with Ambari 2.7.5

Created on

07-22-2021

07:23 AM

- last edited on

07-22-2021

08:31 AM

by

ask_bill_brooks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

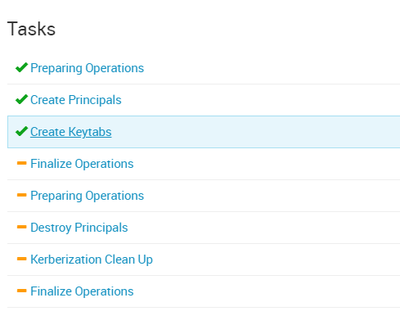

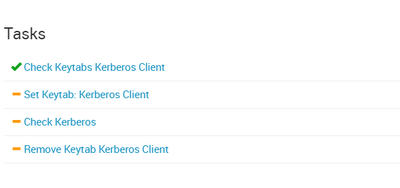

Failing at 38%

NAMENODE : AMBARI server

NAMENODE : AMBARI server

stderr: errors-440.txt

stdout: output-440.txt

2021-07-22 09:41:36,191 - Processing identities...

2021-07-22 09:41:36,222 - Creating keytab file for hdpcluster-072221@ on host myserver.com

2021-07-22 09:41:36,230 - Processing identities completed.

DATANODE : HDP CLUSTER

DATANODE : HDP CLUSTER

stdout: /var/lib/ambari-agent/data/output-438.txt

2021-07-22 09:41:34,474 - Missing keytabs:

Keytab: /etc/security/keytabs/kerberos.service_check.072221.keytab Principal: hdpcluster-072221

Command completed successfully!

This is the krb5.conf file placed in ambari server (name node) and hdpcluster 3.1.5 (datanode)

# Configuration snippets may be placed in this directory as well

includedir /etc/krb5.conf.d/

# output settings

[logging]

default = FILE:/tmp/krb5libs.log

kdc = FILE:/tmp/krb5kdc.log

admin_server = FILE:/tmp/kadmind.log

#Connection default configuration

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

default_realm = EXAMPLE.COM

default_ccache_name = KEYRING:persistent:%{uid}

udp_preference_limit= 1

[realms]

EXAMPLE.COM = {

kdc = myserver.com:88

admin_server = myserver.com

}

# domain to realm relationship (optional)

[domain_realm]

.example.com = EXAMPLE.COM

example.com = EXAMPLE.COM

Created 07-26-2021 01:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ambari275

These are the steps to follow see below

Assumptions

logged as root

clustername=test

REALM= DOMAIN.COM

Hostname = host1

logged in as root

[root@host1]#Switch to user HDFS the HDFS superuser

[root@host1]# su - hdfsCheck the HDFS associated keytab generated

[hdfs@host1 ~]$ cd /etc/security/keytabs/

[hdfs@host1 keytabs]$ lsSample output

atlas.service.keytab hdfs.headless.keytab knox.service.keytab oozie.service.keytabNow use the hdfs.headless.keytab to get the associated principal

[hdfs@host1 keytabs]$ klist -kt /etc/security/keytabs/hdfs.headless.keytabExpected output

Keytab name: FILE:/etc/security/keytabs/hdfs.headless.keytab

KVNO Timestamp Principal

---- ------------------- ------------------------------------------------------

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COM

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COM

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COM

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COM

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COMGrab a Kerberos ticket by using the keytab+ principal like username/pèassword to authenticate to KDC

[hdfs@host1 keytabs]$ kinit -kt /etc/security/keytabs/hdfs.headless.keytab hdfs-test@DOMAIN.COMCheck you no have a valid Kerberos ticket

[hdfs@host1 keytabs]$ klistSample output

Ticket cache: FILE:/tmp/krb5cc_1013

Default principal: hdfs-test@DOMAIN.COM

Valid starting Expires Service principal

07/26/2021 10:03:17 07/27/2021 10:03:17 krbtgt/DOMAIN.COM@DOMAIN.COMNow you can list successfully the HDFS directories, remember to -ls it seems you forgot it in your earlier command

[hdfs@host1 keytabs]$ hdfs dfs -ls /

Found 9 items

drwxrwxrwx - yarn hadoop 0 2018-09-24 00:31 /app-logs

drwxr-xr-x - hdfs hdfs 0 2018-09-24 00:22 /apps

drwxr-xr-x - yarn hadoop 0 2018-09-24 00:12 /ats

drwxr-xr-x - hdfs hdfs 0 2018-09-24 00:12 /hdp

drwxr-xr-x - mapred hdfs 0 2018-09-24 00:12 /mapred

drwxrwxrwx - mapred hadoop 0 2018-09-24 00:12 /mr-history

drwxrwxrwx - spark hadoop 0 2021-07-26 10:04 /spark2-history

drwxrwxrwx - hdfs hdfs 0 2021-07-26 00:57 /tmp

drwxr-xr-x - hdfs hdfs 0 2018-09-24 00:23 /userVoila happy hadooping and remember to accept the best response so other users could reference it

Created 07-22-2021 12:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From the onset, I see you left the defaults and I doubt whether that really maps to your cluster.

Here is a list of outputs I need to validate

$ hostname -f [Where you installed the kerberos server]

/etc/hosts

/var/kerberos/krb5kdc/kadm5.acl

/var/kerberos/krb5kdc/kdc.confOn the Kerberos server can you run

# kadmin.localThen

list_principalsq to quit

The hostname -f output on the Kerberos server should replace kdc and admin_server in krb5.conf

Here is an example

OS: Centos 7

Cluster Realm

HOTEL.COMMy hosts entry is for a class C network so yours could be different but your host name must be resolved by DNS

[root@test ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.153 test.hotel.com test[root@test ~]# hostname -f

test.hotel.com[root@test ~]# cat /var/kerberos/krb5kdc/kadm5.acl

*/admin@HOTEL.COM *[root@test ~]# cat /etc/krb5.conf

# Configuration snippets may be placed in this directory as well

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

default_realm = HOTEL.COM

default_ccache_name = KEYRING:persistent:%{uid}

[realms]

HOTEL.COM = {

kdc = test.hotel.com

admin_server =test.hotel.com

}

[domain_realm]

.hotel.com = HOTEL.COM

hotel.com = HOTEL.COM[root@test ~]# cat /var/kerberos/krb5kdc/kdc.conf

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

HOTEL.COM = {

#master_key_type = aes256-cts

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

[realms]

HOTEL.COM = {

master_key_type = des-cbc-crc

database_name = /var/kerberos/krb5kdc/principal

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = des-cbc-crc:normal des3-cbc-raw:normal des3-cbc-sha1:norm

al des-cbc-crc:v4 des-cbc-crc:afs3

kadmind_port = 749

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/dict/words

}Once you share the above then I could figure out where the issue could be.

Happy hadooping

Created on 07-22-2021 11:27 PM - edited 07-23-2021 08:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

(I setup kerberos in different server , not on namenode or datanode !! )

Is this a good practice!!!

After changing the krb5.config and kdbc.conf , tried to enable kerberos in ambari GUI by providing admin credentials and stuck at same place .

logs from ambari-server :

2021-07-23 02:15:18,218 WARN [ambari-action-scheduler] ActionScheduler:353 - Exception received

org.apache.ambari.server.AmbariException: Could not inject keytab into command

at org.apache.ambari.server.events.publishers.AgentCommandsPublisher.populateExecutionCommandsClusters(AgentCommandsPublisher.java:134)

at org.apache.ambari.server.events.publishers.AgentCommandsPublisher.sendAgentCommand(AgentCommandsPublisher.java:92)

at org.apache.ambari.server.actionmanager.ActionScheduler.doWork(ActionScheduler.java:557)

at org.apache.ambari.server.actionmanager.ActionScheduler.run(ActionScheduler.java:347)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.ambari.server.AmbariException: Could not inject keytabs to enable kerberos

at org.apache.ambari.server.events.publishers.AgentCommandsPublisher$KerberosCommandParameterProcessor.process(AgentCommandsPublisher.java:261)

at org.apache.ambari.server.events.publishers.AgentCommandsPublisher.injectKeytab(AgentCommandsPublisher.java:184)

at org.apache.ambari.server.events.publishers.AgentCommandsPublisher.populateExecutionCommandsClusters(AgentCommandsPublisher.java:132)

... 4 more

2021-07-23 02:15:31,409 WARN [ambari-client-thread-194] Errors:173 - The following warnings have been detected with resource and/or provider classes:

WARNING: A HTTP GET method, public javax.ws.rs.core.Response org.apache.ambari.server.api.services.TaskService.getComponents(java.lang.String,javax.ws.rs.core.HttpHeaders,javax.ws.rs.core.UriInfo), should not consume any entity.

WARNING: A HTTP GET method, public javax.ws.rs.core.Response org.apache.ambari.server.api.services.TaskService.getTask(java.lang.String,javax.ws.rs.core.HttpHeaders,javax.ws.rs.core.UriInfo,java.lang.String), should not consume any entity.

2021-07-23 02:15:31,409 WARN [ambari-client-thread-194] Errors:173 - The following warnings have been detected with resource and/or provider classes:

WARNING: A HTTP GET method, public javax.ws.rs.core.Response org.apache.ambari.server.api.services.TaskService.getComponents(java.lang.String,javax.ws.rs.core.HttpHeaders,javax.ws.rs.core.UriInfo), should not consume any entity.

WARNING: A HTTP GET method, public javax.ws.rs.core.Response org.apache.ambari.server.api.services.TaskService.getTask(java.lang.String,javax.ws.rs.core.HttpHeaders,javax.ws.rs.core.UriInfo,java.lang.String), should not consume any entity.

2021-07-23 02:15:34,388 INFO [Thread-20] AbstractPoolBackedDataSource:212 - Initializing c3p0 pool... com.mchange.v2.c3p0.ComboPooledDataSource [ acquireIncrement -> 3, acquireRetryAttempts -> 30, acquireRetryDelay -> 1000, autoCommitOnClose -> false, automaticTestTable -> null, breakAfterAcquireFailure -> false, checkoutTimeout -> 0, connectionCustomizerClassName -> null, connectionTesterClassName -> com.mchange.v2.c3p0.impl.DefaultConnectionTester, contextClassLoaderSource -> caller, dataSourceName -> 2wkjnfai1m9lw9qg7p0r4|1f15d346, debugUnreturnedConnectionStackTraces -> false, description -> null, driverClass -> org.postgresql.Driver, extensions -> {}, factoryClassLocation -> null, forceIgnoreUnresolvedTransactions -> false, forceSynchronousCheckins -> false, forceUseNamedDriverClass -> false, identityToken -> 2wkjnfai1m9lw9qg7p0r4|1f15d346, idleConnectionTestPeriod -> 50, initialPoolSize -> 3, jdbcUrl -> jdbc:postgresql://localhost/ambari, maxAdministrativeTaskTime -> 0, maxConnectionAge -> 0, maxIdleTime -> 0, maxIdleTimeExcessConnections -> 0, maxPoolSize -> 5, maxStatements -> 0, maxStatementsPerConnection -> 120, minPoolSize -> 1, numHelperThreads -> 3, preferredTestQuery -> select 0, privilegeSpawnedThreads -> false, properties -> {user=******, password=******}, propertyCycle -> 0, statementCacheNumDeferredCloseThreads -> 0, testConnectionOnCheckin -> true, testConnectionOnCheckout -> false, unreturnedConnectionTimeout -> 0, userOverrides -> {}, usesTraditionalReflectiveProxies -> false ]

2021-07-23 02:15:34,452 INFO [Thread-20] JobStoreTX:866 - Freed 0 triggers from 'acquired' / 'blocked' state.

2021-07-23 02:15:34,462 INFO [Thread-20] JobStoreTX:876 - Recovering 0 jobs that were in-progress at the time of the last shut-down.

2021-07-23 02:15:34,462 INFO [Thread-20] JobStoreTX:889 - Recovery complete.

2021-07-23 02:15:34,463 INFO [Thread-20] JobStoreTX:896 - Removed 0 'complete' triggers.

2021-07-23 02:15:34,464 INFO [Thread-20] JobStoreTX:901 - Removed 0 stale fired job entries.

2021-07-23 02:15:34,465 INFO [Thread-20] QuartzScheduler:547 - Scheduler ExecutionScheduler_$_NON_CLUSTERED started.

NAMENODE :

DATANODE:

kadmin.local: list_principals

HTTP/datanode.example.com@EXAMPLE.COM

HTTP/namenode.example.com@EXAMPLE.COM

K/M@EXAMPLE.COM

activity_analyzer/datanode.example.com@EXAMPLE.COM

activity_explorer/datanode.example.com@EXAMPLE.COM

admin/admin@EXAMPLE.COM

ambari-qa-hdpcluster@EXAMPLE.COM

ambari-server-hdpcluster@EXAMPLE.COM

amshbase/datanode.example.com@EXAMPLE.COM

amsmon/datanode.example.com@EXAMPLE.COM

amszk/datanode.example.com@EXAMPLE.COM

atlas/datanode.example.com@EXAMPLE.COM

dn/datanode.example.com@EXAMPLE.COM

hbase-hdpcluster@EXAMPLE.COM

hbase/datanode.example.com@EXAMPLE.COM

hdfs-hdpcluster@EXAMPLE.COM

hdpcluster-072221@EXAMPLE.COM

hdpcluster-072321@EXAMPLE.COM

hive/datanode.example.com@EXAMPLE.COM

infra-solr/datanode.example.com@EXAMPLE.COM

jhs/datanode.example.com@EXAMPLE.COM

kadmin/admin@EXAMPLE.COM

kadmin/changepw@EXAMPLE.COM

kadmin/np-devops-inventory.example.com@EXAMPLE.COM

kafka/datanode.example.com@EXAMPLE.COM

kiprop/np-devops-inventory.example.com@EXAMPLE.COM

krbtgt/EXAMPLE.COM@EXAMPLE.COM

nm/datanode.example.com@EXAMPLE.COM

nn/datanode.example.com@EXAMPLE.COM

rm/datanode.example.com@EXAMPLE.COM

root/admin@EXAMPLE.COM

spark-hdpcluster@EXAMPLE.COM

spark/datanode.example.com@EXAMPLE.COM

spark_atlas@EXAMPLE.COM

yarn-ats-hbase/datanode.example.com@EXAMPLE.COM

yarn-ats-hdpcluster@EXAMPLE.COM

yarn/datanode.example.com@EXAMPLE.COM

zookeeper/datanode.example.com@EXAMPLE.COM

Created 07-23-2021 10:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can set up the kerberos server anywhere on the network provided it can be accessed by the hosts in your cluster. I suspect there is d^something wrong with yor Ambari server. Can you share your /var/log/ambari-server/ambari-server.log

I asked for a couple of files but you only shared the krb5.conf. I will need the rest of the files to be able to understand and determine what could be the issue.

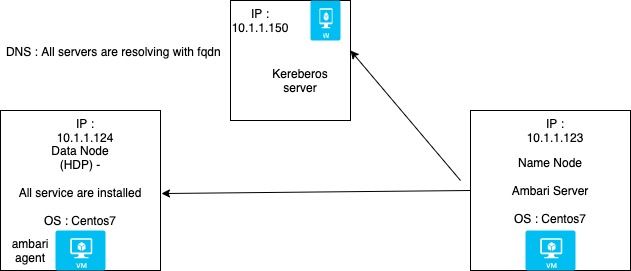

Can describe your setup? Number of Nodes,network, OS etc

Created on 07-23-2021 11:45 AM - edited 07-23-2021 11:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

vim /etc/hosts

#

x.x.x.x localhost localhost.localdomain localhost4 localhost4.localdomain4

#::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

x.x.x.x FQDN server

x.x.x.x ESXI-host1

x.x.x.x ESXI-host2

x.x.x.x ESXI-host3

vim /var/kerberos/krb5kdc/kadm5.acl

*/admin@DOMAIN.COM *

vim /var/kerberos/krb5kdc/kdc.conf

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

DOMAIN.COM= {

#master_key_type = aes256-cts

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

[realms]

DOMAIN.COM= {

master_key_type = des-cbc-crc

database_name = /var/kerberos/krb5kdc/principal

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = des-cbc-crc:normal des3-cbc-raw:normal des3-cbc-sha1:normal des-cbc-crc:v4 des-cbc-crc:afs3

kadmind_port = 749

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/dict/words

list _ Principlas

[root@KERBEROSSERVER~]# kadmin.local

Authenticating as principal root/admin@DOMAIN.COM with password.

kadmin.local: list_principals

HTTP/datanodeFQDN@DOMAIN.COM

HTTP/np-dev1-hdp315-namenode-01.DOMAIN.COM@DOMAIN.COM

K/M@DOMAIN.COM

activity_analyzer/datanodeFQDN@DOMAIN.COM

activity_explorer/datanodeFQDN@DOMAIN.COM

admin/admin@DOMAIN.COM

ambari-qa-hdpcluster@DOMAIN.COM

ambari-server-hdpcluster@DOMAIN.COM

amshbase/datanodeFQDN@DOMAIN.COM

amsmon/datanodeFQDN@DOMAIN.COM

amszk/datanodeFQDN@DOMAIN.COM

atlas/datanodeFQDN@DOMAIN.COM

dn/datanodeFQDN@DOMAIN.COM

hbase-hdpcluster@DOMAIN.COM

hbase/datanodeFQDN@DOMAIN.COM

hdfs-hdpcluster@DOMAIN.COM

hdpcluster-072221@DOMAIN.COM

hdpcluster-072321@DOMAIN.COM

hive/datanodeFQDN@DOMAIN.COM

infra-solr/datanodeFQDN@DOMAIN.COM

jhs/datanodeFQDN@DOMAIN.COM

kadmin/admin@DOMAIN.COM

kadmin/changepw@DOMAIN.COM

kadmin/KERBEROSSERVERDOMAIN.COM@DOMAIN.COM

kafka/datanodeFQDN@DOMAIN.COM

kiprop/KERBEROSSERVERDOMAIN.COM@DOMAIN.COM

krbtgt/DOMAIN.COM@DOMAIN.COM

nm/datanodeFQDN@DOMAIN.COM

nn/datanodeFQDN@DOMAIN.COM

rm/datanodeFQDN@DOMAIN.COM

root/admin@DOMAIN.COM

spark-hdpcluster@DOMAIN.COM

spark/datanodeFQDN@DOMAIN.COM

spark_atlas@DOMAIN.COM

yarn-ats-hbase/datanodeFQDN@DOMAIN.COM

yarn-ats-hdpcluster@DOMAIN.COM

yarn/datanodeFQDN@DOMAIN.COM

zeppelin-hdpcluster@DOMAIN.COM

zookeeper/datanodeFQDN@DOMAIN.COMkadmin.local:

AMBARILOGS

2021-07-23 12:43:42,666 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Pastebin.com

2021-07-23 12:43:50,595 WARN [main] Errors:173 - The following warnings hav - Pastebin.com

Created 07-25-2021 02:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ambari275

I have gone through the logs and here are my observations

Error:

WARNING: A HTTP GET method, public javax.ws.rs.core.Response org.apache.ambari.server.api.services.ExtensionsService.getExtensionVersions(java.lang.String,javax.ws.rs.core.HttpHeaders,javax.ws.rs.core.UriInfo,java.lang.String), should not consume any entity.

Solution:

To fix the issue:

# cat /etc/ambari-server/conf/ambari.properties | grep client.threadpool.size.max

client.threadpool.size.max=25The client.threadpool.size.max property indicates a number of parallel threads servicing client requests. To find the number of cores on the server, issue Linux command nproc

# nproc

251) Edit /etc/ambari-server/conf/ambari.properties file and change the default value of client.threadpool.size.max to have the number of cores on your machine.

client.threadpool.size.max=25

2) Restart ambari-server

# ambari-server restartError

2021-07-23 12:43:42,673 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-3.0 identified by https://archive.cloudera.com/p/HDP/centos7/3.x/3.0.1.0/HDP-3.0.1.0-187.xml.

Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/centos7/3.x/3.0.1.0/HDP-3.0.1.0-187.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/centos7/3.x/3.0.1.0/HDP-3.0.1.0-187.xml

Reason:

401 means "Unauthorized", so there must be something with your credentials this is purely an authorization issue. It seems your access to the HDP repos is an issue.

Your krb5.conf should look something like this

# cat /etc/krb5.conf

# Configuration snippets may be placed in this directory as well

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

default_realm = DOMAIN.COM

default_ccache_name = KEYRING:persistent:%{uid}

[realms]

DOMAIN.COM = {

kdc = [FQDN 10.1.1.150]

admin_server =[FQDN 10.1.1.150]

}

[domain_realm]

.domain.com = DOMAIN.COM

domain.com = DOMAIN.COM

Your /etc/host I think I remember once having issues with hostnames with - try using host1 for ESXI-host2 etc and please don't comment out the IPV6 entry it can cause network connectivity issue so please remove the be # on the second line

x.x.x.x localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

x.x.x.x FQDN server

x.x.x.x host1

x.x.x.x host2

x.x.x.x host3

Kerberos service uses DNS to resolve hostnames. Therefore, DNS must be enabled on all hosts. With DNS, the principal must contain the fully qualified domain name (FQDN) of each host. For example, if the hostname is host1, the DNS domain name is domain.com, and the realm name is DOMAIN.COM, then the principal name for the host would be host/host1.domain.com@DOMAIN.COM. The examples in this guide require that DNS is configured and that the FQDN is used for each host.

Also, ensure ambari agents is installed on all hosts including the ambari-server! Ensure on all the hosts the hostname point to the Ambari server

[server]

hostname=<FQDN_oF_Ambari_server>

url_port=8440

secured_url_port=8441

connect_retry_delay=10

max_reconnect_retry_delay=30Please revert

Created 07-26-2021 12:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

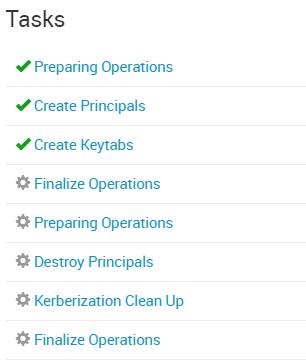

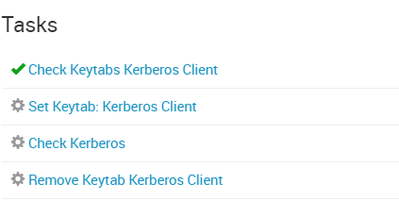

Hello Shelton,

Thanks allot, I followed all the steps and was able to enable Kerberos in ambari GUI , but i want to know how to check that's enabled and i tried to perform few commands in Datanode (hadoop services installed) and that the error i got .

hdfs dfs ls /

Please help me how to verify that cluster is kerberized and how to generate tickets and see the authentication . Thanks allot Shelton. @Shelton

Created 03-24-2023 06:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ambari275

These are the steps to follow assuming you are logged in as root

# su - hdfs

$ klist -kt /etc/security/keytabs/hdfs-headless.keytab

Then the output should give you the principal to use

$ kinit -kt /etc/security/keytabs/hdfs-headless.keytab

Created 07-26-2021 01:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ambari275

These are the steps to follow see below

Assumptions

logged as root

clustername=test

REALM= DOMAIN.COM

Hostname = host1

logged in as root

[root@host1]#Switch to user HDFS the HDFS superuser

[root@host1]# su - hdfsCheck the HDFS associated keytab generated

[hdfs@host1 ~]$ cd /etc/security/keytabs/

[hdfs@host1 keytabs]$ lsSample output

atlas.service.keytab hdfs.headless.keytab knox.service.keytab oozie.service.keytabNow use the hdfs.headless.keytab to get the associated principal

[hdfs@host1 keytabs]$ klist -kt /etc/security/keytabs/hdfs.headless.keytabExpected output

Keytab name: FILE:/etc/security/keytabs/hdfs.headless.keytab

KVNO Timestamp Principal

---- ------------------- ------------------------------------------------------

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COM

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COM

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COM

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COM

1 07/26/2021 00:34:03 hdfs-test@DOMAIN.COMGrab a Kerberos ticket by using the keytab+ principal like username/pèassword to authenticate to KDC

[hdfs@host1 keytabs]$ kinit -kt /etc/security/keytabs/hdfs.headless.keytab hdfs-test@DOMAIN.COMCheck you no have a valid Kerberos ticket

[hdfs@host1 keytabs]$ klistSample output

Ticket cache: FILE:/tmp/krb5cc_1013

Default principal: hdfs-test@DOMAIN.COM

Valid starting Expires Service principal

07/26/2021 10:03:17 07/27/2021 10:03:17 krbtgt/DOMAIN.COM@DOMAIN.COMNow you can list successfully the HDFS directories, remember to -ls it seems you forgot it in your earlier command

[hdfs@host1 keytabs]$ hdfs dfs -ls /

Found 9 items

drwxrwxrwx - yarn hadoop 0 2018-09-24 00:31 /app-logs

drwxr-xr-x - hdfs hdfs 0 2018-09-24 00:22 /apps

drwxr-xr-x - yarn hadoop 0 2018-09-24 00:12 /ats

drwxr-xr-x - hdfs hdfs 0 2018-09-24 00:12 /hdp

drwxr-xr-x - mapred hdfs 0 2018-09-24 00:12 /mapred

drwxrwxrwx - mapred hadoop 0 2018-09-24 00:12 /mr-history

drwxrwxrwx - spark hadoop 0 2021-07-26 10:04 /spark2-history

drwxrwxrwx - hdfs hdfs 0 2021-07-26 00:57 /tmp

drwxr-xr-x - hdfs hdfs 0 2018-09-24 00:23 /userVoila happy hadooping and remember to accept the best response so other users could reference it

Created 07-26-2021 01:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks allot @Shelton , it worked and i'm able to see expected results.