Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Looking for feedback on NiFi Cluster setup

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Looking for feedback on NiFi Cluster setup

- Labels:

-

Apache NiFi

Created on 02-27-2017 09:33 PM - edited 08-19-2019 02:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

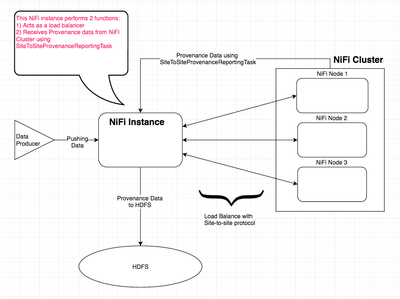

We're working on setting up a NiFi Cluster; additional requirements for us are 1) capture Provenance data and 2) load balance the incoming data that's being pushed to NiFi; so, we're envisioning the below architecture, where a separate NiFi instance will receive the data that's being pushed by the data producer, for load balancing and it will also be used to archive Provenance data (from the Cluster) to HDFS;

I would appreciate your feedback on our design, do you see any flaws, would this work, and any suggestions for better ways of achieving this.

Thanks.

Created 02-28-2017 03:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What protocol is the data producer using to push data to the first NiFi instance?

Created 02-28-2017 03:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What protocol is the data producer using to push data to the first NiFi instance?

Created 02-28-2017 03:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's TCP/IP

and the data is in HL7 format (format used in healthcare industry)

Created 02-28-2017 03:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, I'm going to assume ListenTCP is the entry point then, let me know if that is not true.

My thought is to reverse this a little bit, because right now if your first NiFi instance goes down then your data producer has nowhere to send the data.

Data Producer -> Load Balancer (nginx supports TCP) -> NiFi Cluster with each node having ListenTCP.

Then have this cluster push the provenance data to a standalone NiFi instance that just puts it into HDFS. This way this second NiFi instance is not in the critical path of the real data and is only responsible for the provenance data. Depending how important the provenance data is to you, you could make this a two node cluster to ensure at least a minimum amount of failover.

Created 02-28-2017 08:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, we're using ListenTCP;

I agree with your recommendation.

Our Data Producer is not able to send data to multiple IPs, they can send it to just one IP; so, we're exploring an external load balancer appliance option that sits in front of NiFi Cluster, but I am having this Site-to-Site design as a backup option to the external load balancer (in our case some custom coding needs to be done on our Data Producer side to make load balancing work, so the site-to-site is just a backup in case we have trouble making it work)

Created 02-28-2017 08:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Makes sense, I think haproxy (http://www.haproxy.org/) is a free load balancer that supports TCP, then your data producer can just send to the haproxy address.