Support Questions

- Cloudera Community

- Support

- Support Questions

- LookupRecord queue processor suddenly turns full

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

LookupRecord queue processor suddenly turns full

- Labels:

-

Apache NiFi

Created 03-01-2023 01:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

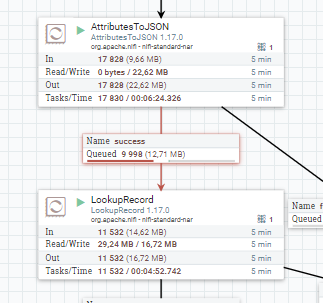

I have a Nifi Flow working to ingest logs messages and send it up to ElasticSearch. Everything worked fine for one month but suddenly some issues appeared without changes about size and volumetry of incoming logs messages:

- I have a lookupRecord processor to enrich data and the queue become full for it so i guess this processor is the problem but it never had this behavior before

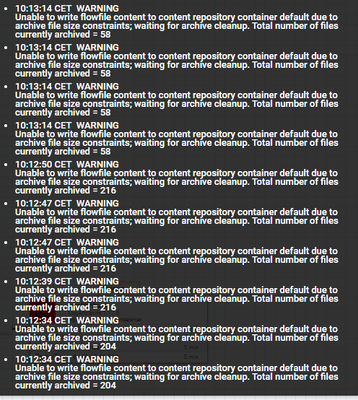

- Nifi tell me it's unable to write flowfile content to content repo due to archive file size constraint

- The input processor of the flow is a listenUDP and warn me that the internal queue is full

I tried to reboot everything, obsioulsy, and also to increase JVM memory settings to Xmx12g to be sure it's not a lack of memory.

I saw that the archive cleanup issue could be a bug from Nifi but I'm actually on 1.17 and even tried to move to 1.20 and everything is same.

Any idea of what could cause those problems ?

Thanks

Created 03-01-2023 04:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First thing you should do is increase the size of the message queue. The default size is quite low (10,000 records and 1gb). It is possible to see this error if the flowfiles have been in the queue for too long. It is also possible to see this error if the file system has other usage outside of nifi. For best performance nifi's backing folder structure (content/flowfile repository) should be dedicated disks that are larger than the demand of the flow (especially during heavy unexpected volume).

You can find more about this in these posts:

Created 03-01-2023 04:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First thing you should do is increase the size of the message queue. The default size is quite low (10,000 records and 1gb). It is possible to see this error if the flowfiles have been in the queue for too long. It is also possible to see this error if the file system has other usage outside of nifi. For best performance nifi's backing folder structure (content/flowfile repository) should be dedicated disks that are larger than the demand of the flow (especially during heavy unexpected volume).

You can find more about this in these posts:

Created 03-01-2023 06:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I increased the size of the ListenUDP processor and turned to false the content.repository.archive property and everything works again.

Thanks for your reply