Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: MR2 Service Check failing after New Installing...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

MR2 Service Check failing after New Installing HDP 2.4 #Container killed on request. Exit code is 143 on VM

- Labels:

-

Apache Hadoop

-

Apache YARN

Created 01-31-2018 10:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

18/01/30 05:36:48 INFO impl.YarnClientImpl: Submitted application application_1517270734071_0001 18/01/30 05:36:49 INFO mapreduce.Job: The url to track the job: http://centos1.test.com:8088/proxy/application_1517270734071_0001/ 18/01/30 05:36:49 INFO mapreduce.Job: Running job: job_1517270734071_0001 18/01/30 05:37:28 INFO mapreduce.Job: Job job_1517270734071_0001 running in uber mode : false 18/01/30 05:37:28 INFO mapreduce.Job: map 0% reduce 0% 18/01/30 05:37:28 INFO mapreduce.Job: Job job_1517270734071_0001 failed with state FAILED due to: Application application_1517270734071_0001 failed 2 times due to AM Container for appattempt_1517270734071_0001_000002 exited with exitCode: -104 For more detailed output, check application tracking page:http://centos1.test.com:8088/cluster/app/application_1517270734071_0001Then, click on links to logs of each attempt. Diagnostics: Container [pid=49027,containerID=container_e03_1517270734071_0001_02_000001] is running beyond physical memory limits. Current usage: 173 MB of 170 MB physical memory used; 1.9 GB of 680 MB virtual memory used. Killing container. Dump of the process-tree for container_e03_1517270734071_0001_02_000001 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 49027 49025 49027 49027 (bash) 0 0 108630016 187 /bin/bash -c /usr/jdk64/jdk1.8.0_60/bin/java -Djava.io.tmpdir=/hadoop/yarn/local/usercache/ambari-qa/appcache/application_1517270734071_0001/container_e03_1517270734071_0001_02_000001/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/hadoop/yarn/log/application_1517270734071_0001/container_e03_1517270734071_0001_02_000001 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dhdp.version=2.4.3.0-227 -Xmx136m -Dhdp.version=2.4.3.0-227 org.apache.hadoop.mapreduce.v2.app.MRAppMaster 1>/hadoop/yarn/log/application_1517270734071_0001/container_e03_1517270734071_0001_02_000001/stdout 2>/hadoop/yarn/log/application_1517270734071_0001/container_e03_1517270734071_0001_02_000001/stderr |- 49041 49027 49027 49027 (java) 1111 341 1950732288 44101 /usr/jdk64/jdk1.8.0_60/bin/java -Djava.io.tmpdir=/hadoop/yarn/local/usercache/ambari-qa/appcache/application_1517270734071_0001/container_e03_1517270734071_0001_02_000001/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/hadoop/yarn/log/application_1517270734071_0001/container_e03_1517270734071_0001_02_000001 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dhdp.version=2.4.3.0-227 -Xmx136m -Dhdp.version=2.4.3.0-227 org.apache.hadoop.mapreduce.v2.app.MRAppMaster Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143 Failing this attempt. Failing the application. 18/01/30 05:37:28 INFO mapreduce.Job: Counters: 0

Created on 02-13-2018 02:00 AM - edited 08-17-2019 10:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

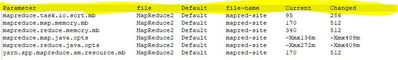

After further Analysis found following things and changed , then "MR2 service check/Container killed on request Exit code is 143"

went fine.

1) yarn-site.xml :-

=>Initial container not able to allocate the memory and size was yarn.scheduler.minimum-allocation-mb(178 MB) and yarn.scheduler.maximum-allocation-mb (512 MB) only.

=>Checked HDFS Block size =128 MB, as initial container not able to allocate, increased the minimum/maximum to multiple of 128 MB block size as below .

=> Changed the following initial container size from yarn.scheduler.minimum-allocation-mb(178 to 512 MB) and yarn.scheduler.maximum-allocation-mb (512 to 1024 MB) in yarn-site.xml.

2) mapred-site.xml:-

Once above parameter changed in yarn-site.xml, below parameter required to change in mapred-site.xml

=> mapreduce.task.io.sort.mb from 95 to 286 MB,mapreduce.map.memory.mb/mapreduce.reduce.memory.mb

to 512 MB

=>yarn.app.mapreduce.am.resource.mb from 170 to 512 MB. increase these parameter value

multiple of 128 MB block size to get out of container killed error .

As above we required to change parameter in yarn-site.xml, mapred-site.xml through ambari due resource constraint on existing till we get the out of error "Container killed on request. Exit code is 143.

We can apply same rule to get out of below error

Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143 Failing this attempt. Failing the application. INFO mapreduce.Job: Counters: 0

Created 01-31-2018 12:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We see the message like following"

Container ..... is running beyond physical memory limits. Current usage: 173 MB of 170 MB physical memory used

.

So it looks like the mapreduce tuning is not done properly. Please check the value of the following parameters, looks like the value is set to small value.

mapreduce.reduce.memory.mb mapreduce.map.memory.mb mapreduce.reduce.java.opts mapreduce.map.java.opts

.

Created 01-31-2018 02:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Jay for reply.

Currently parameter has been set and values as below.

mapreduce.reduce.memory.mb: 400 MB

mapreduce.map.memory.mb:- 350 MB

mapreduce.reduce.java.opts : 240 MB

mapreduce.map.java.opts:- 250 MB

Created 01-31-2018 11:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, initially those values looks OK, however as they are tuning parameters so it depends on what kind of intense job are you running and accordingly you might have to increase the value based on requirement.

Do you see any improvement after making those changes? If not then may be we will need bit larger values.

Created 02-01-2018 02:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

missed to update even after set above parameter service check getting failed with same error.

Please advise

Created 02-06-2018 01:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Service check getting fail

Created on 02-13-2018 02:00 AM - edited 08-17-2019 10:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After further Analysis found following things and changed , then "MR2 service check/Container killed on request Exit code is 143"

went fine.

1) yarn-site.xml :-

=>Initial container not able to allocate the memory and size was yarn.scheduler.minimum-allocation-mb(178 MB) and yarn.scheduler.maximum-allocation-mb (512 MB) only.

=>Checked HDFS Block size =128 MB, as initial container not able to allocate, increased the minimum/maximum to multiple of 128 MB block size as below .

=> Changed the following initial container size from yarn.scheduler.minimum-allocation-mb(178 to 512 MB) and yarn.scheduler.maximum-allocation-mb (512 to 1024 MB) in yarn-site.xml.

2) mapred-site.xml:-

Once above parameter changed in yarn-site.xml, below parameter required to change in mapred-site.xml

=> mapreduce.task.io.sort.mb from 95 to 286 MB,mapreduce.map.memory.mb/mapreduce.reduce.memory.mb

to 512 MB

=>yarn.app.mapreduce.am.resource.mb from 170 to 512 MB. increase these parameter value

multiple of 128 MB block size to get out of container killed error .

As above we required to change parameter in yarn-site.xml, mapred-site.xml through ambari due resource constraint on existing till we get the out of error "Container killed on request. Exit code is 143.

We can apply same rule to get out of below error

Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143 Failing this attempt. Failing the application. INFO mapreduce.Job: Counters: 0