Support Questions

- Cloudera Community

- Support

- Support Questions

- Multiple ReplaceText Processors

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Multiple ReplaceText Processors

- Labels:

-

Apache NiFi

Created 08-27-2023 02:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cloudera,

I am working on a NiFi flow in which incoming dates are treated as follows in my input schema:

{ "name": "Launch_Date", "type": ["null",{ "type" : "int", "logicalType" : "date"}] },

And my CSVReader uses the following date format: MM/dd/yy

When records are being ingested, I am getting Java errors on the month and day portion of dates that are single digit, such as 6/23/2019 and 11/3/2019, so I used the ReplaceText processor to add leading zeroes (06./23/2019, 11/03/2019). What I was not able to figure out was if/how I can do three date conversions in one ReplaceText processor ( I also have to add leading zeroes to dates like 6/3/2019 so they become dates like 06/03/2019).

So, I have three ReplaceText processors, that do Regex replacements on the following cases:

- dates w/ single month digit

- dates w/ single day digit

- dates w/ single month and single day digits

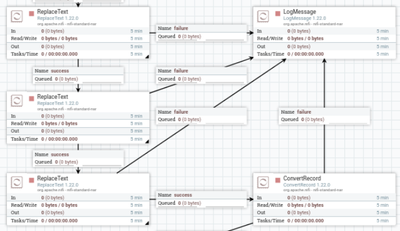

In all three cases, zeroes are prepended to the single digits. The portion of my flow with the three ReplaceText processors is shown below.

Is there a way to do all three cases in one ReplaceText processor? Or should I be handling the dates a different way so that I do not get Java errors on dates with single MM/dd digits? What I have done works fine, it just seems like I should be able to use one processor instead of three.

Thanks,

JohnnyRocks

Created 08-27-2023 03:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JohnnyRocks ,

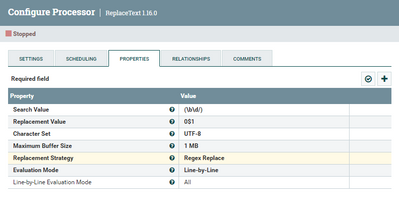

Its hard for me to suggest a solution without seeing how the input looks like, however based on the schema you provided and assuming that you are dealing with dates only values, I tested the following config in the ReplaceText Processor and it appears to work on all cases:

Search Value: (\b\d/)

Replacement Value: 0$1

If that doesnt help can you provide sample input and the different cases for search and replace values in the three replaceText processors.

If that helps please accept solution.

Thanks

Created on 08-28-2023 05:29 AM - edited 08-28-2023 05:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@JohnnyRocks ReplaceText more than once is something you want to avoid entirely. You need to look at how to solve the schema concerns within the record based processors. It should be possible to avoid ReplaceText all together. If your upstream data is that different (3 different formats) within the same pipeline, consider how to address that upstream or in separate nifi flows. Alternatively multiple pipelines can be built with separate top branch that pipes into the same record based processor. This would be something like 3 single routes through a ReplaceText then all going to ConvertRecord. However i would still try to optimize without ReplaceText in the manner described here.

Created 08-28-2023 08:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@JohnnyRocks, as @steven-matison said, you should avoid linking so many ReplaceText.

I am not quite sure I understood your flow exactly, but something tells me that before reaching ReplaceText, something is not properly configured in your NiFi Flow.

First of all, when using the classic Java Data Format, MM will always transpose in a two digit month, meaning that month from 1 to 9 will be automatically appended with a leading zero. "dd" will do the same trick but for days. As I see in your post, you said that your CSV reader is configured to read the data as MM/dd/yy, which should be fine, but somehow something is missing here ---> How do you reach the format of dd/MM/yyyy?

What I would personally try to do is to convert all those date values in the same format. So instead of all those ReplaceText, I would try to insert an UpdateRecord Processor, where I would define my RecordReader and my RecordWritter with the desired schemas (make sure that your column is type int with logicaly type date). Next, in that processor, I would change the Replacement Value Strategy into "Record Path Value" and I would press on + and add a new property. I would call it "/Launch_Date" (pay attention to the leading slash) and I would assign it the value " format( /Launch_Date, "dd/MM/yyyy", "Europe/Bucharest") " (or any other timezone you require -- if you require your data in UTC, just remove the coma and the timezone).