Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NIFI 1.0 can't empty queues

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NIFI 1.0 can't empty queues

- Labels:

-

Apache NiFi

Created on 09-30-2016 02:22 PM - edited 08-19-2019 04:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

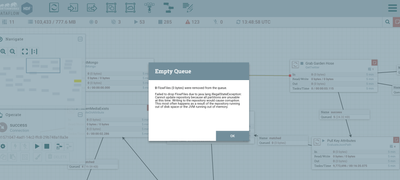

I had a lot of queue up messages in a few place on a single 8G Node of HDF 2.0 and couldn't empty the queues.

Errors

java.net.SocketException: Too many open files at java.net.PlainSocketImpl.socketAccept(Native Method) ~[na:1.8.0_101] at java.net.AbstractPlainSocketImpl.accept(AbstractPlainSocketImpl.java:409) ~[na:1.8.0_101] at java.net.ServerSocket.implAccept(ServerSocket.java:545) ~[na:1.8.0_101] at java.net.ServerSocket.accept(ServerSocket.java:513) ~[na:1.8.0_101] at org.apache.nifi.BootstrapListener$Listener.run(BootstrapListener.java:158) ~[nifi-runtime-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_101] 2016-09-30 13:52:42,479 ERROR [Listen to Bootstrap] org.apache.nifi.BootstrapListener Failed to process request from Bootstrap due to java.net.SocketException: Too many open files java.net.SocketException: Too many open files at java.net.PlainSocketImpl.socketAccept(Native Method) ~[na:1.8.0_101] at java.net.AbstractPlainSocketImpl.accept(AbstractPlainSocketImpl.java:409) ~[na:1.8.0_101] at java.net.ServerSocket.implAccept(ServerSocket.java:545) ~[na:1.8.0_101] at java.net.ServerSocket.accept(ServerSocket.java:513) ~[na:1.8.0_101] at org.apache.nifi.BootstrapListener$Listener.run(BootstrapListener.java:158) ~[nifi-runtime-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_101]

bootstrap.conf

# JVM memory settings java.arg.2=-Xms4096m java.arg.3=-Xmx8092m # Enable Remote Debugging #java.arg.debug=-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=8000 java.arg.4=-Djava.net.preferIPv4Stack=true # allowRestrictedHeaders is required for Cluster/Node communications to work properly java.arg.5=-Dsun.net.http.allowRestrictedHeaders=true java.arg.6=-Djava.protocol.handler.pkgs=sun.net.www.protocol # The G1GC is still considered experimental but has proven to be very advantageous in providing great # performance without significant "stop-the-world" delays. java.arg.13=-XX:+UseG1GC

Created 09-30-2016 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I shut down the server, made sure the memory was clear. I upped the memory in bootstrap.conf and made sure all the heavy duty processors (Twitter) were stopped. Then on restart I went to the ones I wanted to empty and was able to do so. Once all that junk was out memory, everything worked flawless. I think my server was a bit undersized for what I was doing. I was running all the tweets from Strata through TensorFlow, Python Vader, saving images, maybe 50 processors plus a few demos I was running simultaneously.

Created 09-30-2016 02:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like you do not have enough file handles

The following command will show your current open file limits:

# ulimit -a

This should be min 10000, but may need to be even higher depending on the dataflow.

Matt

Created 09-30-2016 02:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it was unlimited!?!??! I restarted NIFI and was able to do it. Probably too much in JVM memory and a wrong message?

Created 09-30-2016 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I shut down the server, made sure the memory was clear. I upped the memory in bootstrap.conf and made sure all the heavy duty processors (Twitter) were stopped. Then on restart I went to the ones I wanted to empty and was able to do so. Once all that junk was out memory, everything worked flawless. I think my server was a bit undersized for what I was doing. I was running all the tweets from Strata through TensorFlow, Python Vader, saving images, maybe 50 processors plus a few demos I was running simultaneously.

Created 03-02-2017 03:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

i have 5 separate queues for 5 different processors, everytime i'm going to each processor and clearing the each queue its taking me lot of time, is there any way to clear all the queue's at same time ?

please help me with this

thanks

Ravi