Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NIFI Cluster Node Restarts

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NIFI Cluster Node Restarts

- Labels:

-

Apache NiFi

-

Cloudera DataFlow (CDF)

Created 05-14-2021 09:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have a new 1.13.2 NIFI Cluster. The cluster has nodes randomly restarting and we are unable to determine the cause. We do see 'connection refused' errors intermittantly without explanation in the logs (attached). We don't know what additional steps are to take at this point. nifi.properties and latest nifi-app.log.

Cluster Details

12 x 8 cores - 32gb of Memory

These servers leverage a 5 node Zookeeper Quorum, we have not seen latency or file handler issues there.

NIFI Properties File (redacted to protect the innocent)

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Core Properties #

nifi.flow.configuration.file=./conf/flow.xml.gz

nifi.flow.configuration.archive.enabled=true

nifi.flow.configuration.archive.dir=./conf/archive/

nifi.flow.configuration.archive.max.time=30 days

nifi.flow.configuration.archive.max.storage=500 MB

nifi.flow.configuration.archive.max.count=

nifi.flowcontroller.autoResumeState=true

nifi.flowcontroller.graceful.shutdown.period=10 sec

nifi.flowservice.writedelay.interval=500 ms

nifi.administrative.yield.duration=30 sec

# If a component has no work to do (is "bored"), how long should we wait before checking again for work?

nifi.bored.yield.duration=5 millis

nifi.queue.backpressure.count=20000

nifi.queue.backpressure.size=1 GB

nifi.authorizer.configuration.file=./conf/authorizers.xml

nifi.login.identity.provider.configuration.file=./conf/login-identity-providers.xml

nifi.templates.directory=./conf/templates

nifi.ui.banner.text=

nifi.ui.autorefresh.interval=30 sec

nifi.nar.library.directory=./lib

nifi.nar.library.autoload.directory=./extensions

nifi.nar.working.directory=./work/nar/

nifi.documentation.working.directory=./work/docs/components

####################

# State Management #

####################

nifi.state.management.configuration.file=./conf/state-management.xml

# The ID of the local state provider

nifi.state.management.provider.local=local-provider

# The ID of the cluster-wide state provider. This will be ignored if NiFi is not clustered but must be populated if running in a cluster.

nifi.state.management.provider.cluster=zk-provider

# Specifies whether or not this instance of NiFi should run an embedded ZooKeeper server

nifi.state.management.embedded.zookeeper.start=false

# Properties file that provides the ZooKeeper properties to use if <nifi.state.management.embedded.zookeeper.start> is set to true

nifi.state.management.embedded.zookeeper.properties=./conf/zookeeper.properties

# H2 Settings

nifi.database.directory=./database_repository

nifi.h2.url.append=;LOCK_TIMEOUT=25000;WRITE_DELAY=0;AUTO_SERVER=FALSE

# FlowFile Repository

nifi.flowfile.repository.implementation=org.apache.nifi.controller.repository.WriteAheadFlowFileRepository

nifi.flowfile.repository.wal.implementation=org.apache.nifi.wali.SequentialAccessWriteAheadLog

nifi.flowfile.repository.directory=/opt/nifi/data/flow

nifi.flowfile.repository.checkpoint.interval=20 secs

nifi.flowfile.repository.always.sync=false

nifi.flowfile.repository.encryption.key.provider.implementation=

nifi.flowfile.repository.encryption.key.provider.location=

nifi.flowfile.repository.encryption.key.id=

nifi.flowfile.repository.encryption.key=

nifi.flowfile.repository.retain.orphaned.flowfiles=true

nifi.swap.manager.implementation=org.apache.nifi.controller.FileSystemSwapManager

nifi.queue.swap.threshold=50000

# Content Repository

nifi.content.repository.implementation=org.apache.nifi.controller.repository.FileSystemRepository

nifi.content.claim.max.appendable.size=20 MB

nifi.content.repository.directory.default=/opt/nifi/data/content

nifi.content.repository.archive.max.retention.period=9 hours

nifi.content.repository.archive.max.usage.percentage=95%

nifi.content.repository.archive.enabled=true

nifi.content.repository.always.sync=false

nifi.content.viewer.url=../nifi-content-viewer/

nifi.content.repository.encryption.key.provider.implementation=

nifi.content.repository.encryption.key.provider.location=

nifi.content.repository.encryption.key.id=

nifi.content.repository.encryption.key=

# Provenance Repository Properties

nifi.provenance.repository.implementation=org.apache.nifi.provenance.WriteAheadProvenanceRepository

nifi.provenance.repository.encryption.key.provider.implementation=

nifi.provenance.repository.encryption.key.provider.location=

nifi.provenance.repository.encryption.key.id=

nifi.provenance.repository.encryption.key=

# Persistent Provenance Repository Properties

nifi.provenance.repository.directory.default=/opt/nifi/data/provenance

nifi.provenance.repository.max.storage.time=6 hours

nifi.provenance.repository.max.storage.size=595 GB

nifi.provenance.repository.rollover.time=1 mins

nifi.provenance.repository.rollover.size=1 GB

nifi.provenance.repository.query.threads=2

nifi.provenance.repository.index.threads=1

nifi.provenance.repository.compress.on.rollover=true

nifi.provenance.repository.always.sync=false

nifi.provenance.repository.journal.count=16

# Comma-separated list of fields. Fields that are not indexed will not be searchable. Valid fields are:

# EventType, FlowFileUUID, Filename, TransitURI, ProcessorID, AlternateIdentifierURI, Relationship, Details

nifi.provenance.repository.indexed.fields=EventType, FlowFileUUID, Filename, ProcessorID, Relationship

# FlowFile Attributes that should be indexed and made searchable. Some examples to consider are filename, uuid, mime.type

nifi.provenance.repository.indexed.attributes=

# Large values for the shard size will result in more Java heap usage when searching the Provenance Repository

# but should provide better performance

nifi.provenance.repository.index.shard.size=8 GB

# Indicates the maximum length that a FlowFile attribute can be when retrieving a Provenance Event from

# the repository. If the length of any attribute exceeds this value, it will be truncated when the event is retrieved.

nifi.provenance.repository.max.attribute.length=65536

nifi.provenance.repository.concurrent.merge.threads=2

# Volatile Provenance Respository Properties

nifi.provenance.repository.buffer.size=100000

# Component Status Repository

nifi.components.status.repository.implementation=org.apache.nifi.controller.status.history.VolatileComponentStatusRepository

nifi.components.status.repository.buffer.size=1440

nifi.components.status.snapshot.frequency=1 min

# Site to Site properties

nifi.remote.input.host=ip-xx-xxx-xxx-xxx.us-gov-west-1.compute.internal

nifi.remote.input.secure=true

nifi.remote.input.socket.port=10443

nifi.remote.input.http.enabled=true

nifi.remote.input.http.transaction.ttl=30 sec

nifi.remote.contents.cache.expiration=30 secs

# web properties #

nifi.web.http.host=

nifi.web.http.port=

nifi.web.http.network.interface.default=

nifi.web.https.host=nifi-tf-01.bogus-dns.pvt

nifi.web.https.port=9443

nifi.web.https.network.interface.default=

nifi.web.jetty.working.directory=./work/jetty

nifi.web.jetty.threads=500

nifi.web.max.header.size=16 KB

nifi.web.proxy.context.path=

nifi.web.proxy.host=

nifi.web.max.content.size=

nifi.web.max.requests.per.second=30000

nifi.web.should.send.server.version=true

# security properties #

nifi.sensitive.props.key=

nifi.sensitive.props.key.protected=

nifi.sensitive.props.algorithm=PBEWITHMD5AND256BITAES-CBC-OPENSSL

nifi.sensitive.props.provider=BC

nifi.sensitive.props.additional.keys=

nifi.security.keystore=/etc/nifi/keystore.jks

nifi.security.keystoreType=jks

nifi.security.keystorePasswd=pIAF20A4YrqZSkU+BOGUS

nifi.security.keyPasswd=pIAF20A4YrqZSkU+BOGUS

nifi.security.truststore=/etc/nifi/truststore.jks

nifi.security.truststoreType=jks

nifi.security.truststorePasswd=notreal+8Q

nifi.security.user.authorizer=file-provider

nifi.security.allow.anonymous.authentication=false

nifi.security.user.login.identity.provider=

nifi.security.ocsp.responder.url=

nifi.security.ocsp.responder.certificate=

# OpenId Connect SSO Properties #

nifi.security.user.oidc.discovery.url=

nifi.security.user.oidc.connect.timeout=5 secs

nifi.security.user.oidc.read.timeout=5 secs

nifi.security.user.oidc.client.id=

nifi.security.user.oidc.client.secret=

nifi.security.user.oidc.preferred.jwsalgorithm=

nifi.security.user.oidc.additional.scopes=

nifi.security.user.oidc.claim.identifying.user=

# Apache Knox SSO Properties #

nifi.security.user.knox.url=

nifi.security.user.knox.publicKey=

nifi.security.user.knox.cookieName=hadoop-jwt

nifi.security.user.knox.audiences=

# Identity Mapping Properties #

# These properties allow normalizing user identities such that identities coming from different identity providers

# (certificates, LDAP, Kerberos) can be treated the same internally in NiFi. The following example demonstrates normalizing

# DNs from certificates and principals from Kerberos into a common identity string:

#

# nifi.security.identity.mapping.pattern.dn=^CN=(.*?), OU=(.*?), O=(.*?), L=(.*?), ST=(.*?), C=(.*?)$

# nifi.security.identity.mapping.value.dn=$1@$2

# nifi.security.identity.mapping.transform.dn=NONE

# nifi.security.identity.mapping.pattern.kerb=^(.*?)/instance@(.*?)$

# nifi.security.identity.mapping.value.kerb=$1@$2

# nifi.security.identity.mapping.transform.kerb=UPPER

# Group Mapping Properties #

# These properties allow normalizing group names coming from external sources like LDAP. The following example

# lowercases any group name.

#

# nifi.security.group.mapping.pattern.anygroup=^(.*)$

# nifi.security.group.mapping.value.anygroup=$1

# nifi.security.group.mapping.transform.anygroup=LOWER

# cluster common properties (all nodes must have same values) #

nifi.cluster.protocol.heartbeat.interval=15 sec

nifi.cluster.protocol.heartbeat.missable.max=20

nifi.cluster.protocol.is.secure=true

# cluster node properties (only configure for cluster nodes) #

nifi.cluster.is.node=true

#nifi.cluster.node.address=ip-xx-xxx-xxx-xxx.us-gov-west-1.compute.internal

nifi.cluster.node.address=192.170.108.140

nifi.cluster.node.protocol.port=11443

nifi.cluster.node.protocol.threads=100

nifi.cluster.node.protocol.max.threads=800

nifi.cluster.node.event.history.size=25

nifi.cluster.node.connection.timeout=60 sec

nifi.cluster.node.read.timeout=60 sec

nifi.cluster.node.max.concurrent.requests=800

nifi.cluster.firewall.file=

nifi.cluster.flow.election.max.wait.time=5 mins

nifi.cluster.flow.election.max.candidates=7

# cluster load balancing properties #

nifi.cluster.load.balance.host=192.170.108.140

nifi.cluster.load.balance.port=6342

nifi.cluster.load.balance.connections.per.node=50

nifi.cluster.load.balance.max.thread.count=600

nifi.cluster.load.balance.comms.timeout=45 sec

# zookeeper properties, used for cluster management #

nifi.zookeeper.connect.string=192.170.108.37:2181,192.170.108.67:2181,192.170.108.120:2181,192.170.108.104:2181,192.170.108.106:2181

nifi.zookeeper.connect.timeout=30 secs

nifi.zookeeper.session.timeout=30 secs

nifi.zookeeper.root.node=/nifi_tf

# Zookeeper properties for the authentication scheme used when creating acls on znodes used for cluster management

# Values supported for nifi.zookeeper.auth.type are "default", which will apply world/anyone rights on znodes

# and "sasl" which will give rights to the sasl/kerberos identity used to authenticate the nifi node

# The identity is determined using the value in nifi.kerberos.service.principal and the removeHostFromPrincipal

# and removeRealmFromPrincipal values (which should align with the kerberos.removeHostFromPrincipal and kerberos.removeRealmFromPrincipal

# values configured on the zookeeper server).

nifi.zookeeper.auth.type=

nifi.zookeeper.kerberos.removeHostFromPrincipal=

nifi.zookeeper.kerberos.removeRealmFromPrincipal=

# kerberos #

nifi.kerberos.krb5.file=

# kerberos service principal #

nifi.kerberos.service.principal=

nifi.kerberos.service.keytab.location=

# kerberos spnego principal #

nifi.kerberos.spnego.principal=

nifi.kerberos.spnego.keytab.location=

nifi.kerberos.spnego.authentication.expiration=12 hours

# external properties files for variable registry

# supports a comma delimited list of file locations

nifi.variable.registry.properties=

# analytics properties #

nifi.analytics.predict.enabled=false

nifi.analytics.predict.interval=3 mins

nifi.analytics.query.interval=5 mins

nifi.analytics.connection.model.implementation=org.apache.nifi.controller.status.analytics.models.OrdinaryLeastSquares

nifi.analytics.connection.model.score.name=rSquared

nifi.analytics.connection.model.score.threshold=.90

nifi-app.log (excluding INFO entries)

2021-05-14 13:00:11,927 ERROR [Load-Balanced Client Thread-356] o.a.n.c.q.c.c.a.n.NioAsyncLoadBalanceClient Unable to connect to nifi-tf-11.bogus-dns.pvt:9443 for load balancing

java.net.ConnectException: Connection refused

at sun.nio.ch.Net.connect0(Native Method)

at sun.nio.ch.Net.connect(Net.java:482)

at sun.nio.ch.Net.connect(Net.java:474)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:647)

at sun.nio.ch.SocketAdaptor.connect(SocketAdaptor.java:107)

at sun.nio.ch.SocketAdaptor.connect(SocketAdaptor.java:92)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.createChannel(NioAsyncLoadBalanceClient.java:456)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.establishConnection(NioAsyncLoadBalanceClient.java:399)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.communicate(NioAsyncLoadBalanceClient.java:211)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClientTask.run(NioAsyncLoadBalanceClientTask.java:81)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2021-05-14 13:00:15,592 ERROR [Load-Balanced Client Thread-160] o.a.n.c.q.c.c.a.n.NioAsyncLoadBalanceClient Unable to connect to nifi-tf-11.bogus-dns.pvt:9443 for load balancing

java.net.ConnectException: Connection refused

at sun.nio.ch.Net.connect0(Native Method)

at sun.nio.ch.Net.connect(Net.java:482)

at sun.nio.ch.Net.connect(Net.java:474)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:647)

at sun.nio.ch.SocketAdaptor.connect(SocketAdaptor.java:107)

at sun.nio.ch.SocketAdaptor.connect(SocketAdaptor.java:92)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.createChannel(NioAsyncLoadBalanceClient.java:456)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.establishConnection(NioAsyncLoadBalanceClient.java:399)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.communicate(NioAsyncLoadBalanceClient.java:211)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClientTask.run(NioAsyncLoadBalanceClientTask.java:81)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2021-05-14 13:00:15,619 ERROR [Load-Balanced Client Thread-498] o.a.n.c.q.c.c.a.n.NioAsyncLoadBalanceClient Unable to connect to nifi-tf-11.bogus-dns.pvt:9443 for load balancing

java.net.ConnectException: Connection refused

at sun.nio.ch.Net.connect0(Native Method)

at sun.nio.ch.Net.connect(Net.java:482)

at sun.nio.ch.Net.connect(Net.java:474)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:647)

at sun.nio.ch.SocketAdaptor.connect(SocketAdaptor.java:107)

at sun.nio.ch.SocketAdaptor.connect(SocketAdaptor.java:92)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.createChannel(NioAsyncLoadBalanceClient.java:456)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.establishConnection(NioAsyncLoadBalanceClient.java:399)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.communicate(NioAsyncLoadBalanceClient.java:211)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClientTask.run(NioAsyncLoadBalanceClientTask.java:81)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2021-05-14 13:00:16,217 ERROR [Load-Balanced Client Thread-207] o.a.n.c.q.c.c.a.n.NioAsyncLoadBalanceClient Unable to connect to nifi-tf-11.bogus-dns.pvt:9443 for load balancing

java.net.ConnectException: Connection refused

at sun.nio.ch.Net.connect0(Native Method)

at sun.nio.ch.Net.connect(Net.java:482)

at sun.nio.ch.Net.connect(Net.java:474)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:647)

at sun.nio.ch.SocketAdaptor.connect(SocketAdaptor.java:107)

at sun.nio.ch.SocketAdaptor.connect(SocketAdaptor.java:92)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.createChannel(NioAsyncLoadBalanceClient.java:456)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.establishConnection(NioAsyncLoadBalanceClient.java:399)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.communicate(NioAsyncLoadBalanceClient.java:211)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClientTask.run(NioAsyncLoadBalanceClientTask.java:81)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2021-05-14 13:00:16,357 ERROR [Load-Balanced Client Thread-238] o.a.n.c.q.c.c.a.n.NioAsyncLoadBalanceClient Failed to communicate with Peer nifi-tf-11.bogus-dns.pvt:9443

java.io.IOException: Failed to decrypt data from Peer nifi-tf-11.bogus-dns.pvt:9443 because Peer unexpectedly closed connection

at org.apache.nifi.controller.queue.clustered.client.async.nio.PeerChannel.decrypt(PeerChannel.java:269)

at org.apache.nifi.controller.queue.clustered.client.async.nio.PeerChannel.read(PeerChannel.java:159)

at org.apache.nifi.controller.queue.clustered.client.async.nio.PeerChannel.read(PeerChannel.java:80)

at org.apache.nifi.controller.queue.clustered.client.async.nio.LoadBalanceSession.confirmTransactionComplete(LoadBalanceSession.java:177)

at org.apache.nifi.controller.queue.clustered.client.async.nio.LoadBalanceSession.communicate(LoadBalanceSession.java:154)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClient.communicate(NioAsyncLoadBalanceClient.java:242)

at org.apache.nifi.controller.queue.clustered.client.async.nio.NioAsyncLoadBalanceClientTask.run(NioAsyncLoadBalanceClientTask.java:81)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Created 01-14-2022 08:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kilynn

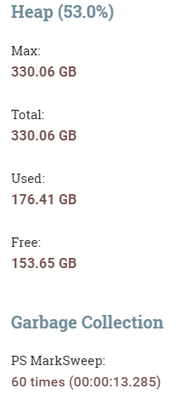

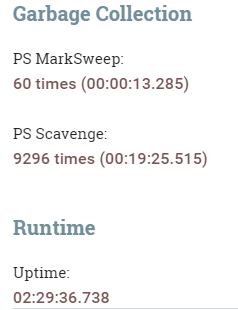

I see that you have set some very high values (330 GB) for your NiFi heap space. This is really not recommended. Can also see from what you have shared that the particular NiFi instance has been up for ~2.5 hours and within that 2.5 hour timeframe it has been in a stop-the-world state due to Java Garbage Collection (GC) for ~20 minutes. This means that your NiFi is spending ~13% of its uptime doing nothing by stop-the-world garbage collection. Is there a reason you set your heap this high? Setting large heap simply because your server has the memory available is not a good reason. Java GC kicks in when heap usage is around 80% usage. When an object in heap is no longer being used it is not actually cleaned out of heap and space reclaimed at that time. Space is only reclaimed via GC. The larger the heap the longer the GC events are going to take. Seeing as how you current heap usage is ~53%, I am guessing with each GC event a considerable amount of heap space is being released. Long GC pauses (stop-the-world) can result in node disconnection because NiFi node heartbeats are not being sent.

When NiFi seems to be restarting with no indication at all in the nifi-app.log, you may want to take a look at your systems /var/log/messages file for any indications of the Out Of Memory (OOM) killer being executed. With your NiFi instance being the largest consumer of memory on the host, it would be the top pick process by the OS OOM Killer when available OS memory gets too low.

In most case you should not need more the 16GB of heap to run most dataflows. If you are building dataflows that utilize a lot of heap, I'd recommend taking a close look at your dataflow designs to see if there are better design choices. This that can lead to large heap usage is creating very large FlowFile attributes (for example, extracting large amount of a FlowFile's content in to a FlowFile attribute). FlowFile attributes all live inside the JVM heap. Some other dataflow designs elements that can lead to high heap usage include:

- Merging a very large number of FlowFiles in a single Merge processor. Better to use multiple merge processors in series with first merging up 10,000 to 20,000 FlowFiles and then second merging those in to even larger files

- Splitting a very large file in a single split processor resulting in a lot of FlowFiles produced in a single transaction. Better to use multiple or even look at ways of processing the dat without needing to split the file in to multiple files (look at using the available "record" based processors)

- Reading in entire content of a large FlowFile in to memory to perform action on it. Like ExtractText processor configured for entire text instead of line-by-line mode.

While I 100% agree that you should be looking in to your "thread" allocation choices in your nifi.properties file. Many of them seem unnecessarily high for a 7 node cluster. You should understand that each node in your cluster executes independently of the others, so the settings applied pertain to each node individually. Following the guidance in the NiFi app guide (can be found in NIFi UI under help or on Apache NiFi site) is strongly advised.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 01-16-2022 08:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At the end of the day we had allocated more memory than was available on the server when under stress. I recommend reducing memory and restarting the cluster.

Created 05-16-2021 07:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

Since it is related to Load Balancer, you can take a look of your configurations

# cluster load balancing properties #

nifi.cluster.load.balance.host=192.170.108.140

nifi.cluster.load.balance.port=6342

nifi.cluster.load.balance.connections.per.node=50

nifi.cluster.load.balance.max.thread.count=600

nifi.cluster.load.balance.comms.timeout=45 sec

For example

nifi.cluster.load.balance.connections.per.node=50

The maximum number of connections to create between this node and each other node in the cluster. For example, if there are 5 nodes in the cluster and this value is set to 4, there will be up to 20 socket connections established for load-balancing purposes (5 x 4 = 20). The default value is 4.

Now you set it to 50, not sure how many nodes you got, you can do the math

The rest of the configuration details you can refer here:

Created 01-14-2022 06:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you find a fix for this issue ? what was the cause ? im experiencing the same issue

Created 01-16-2022 08:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At the end of the day we had allocated more memory than was available on the server when under stress. I recommend reducing memory and restarting the cluster.

Created 01-18-2022 08:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kilynn

So as i mentioned in my last response, once memory usage go to high, OS level OOM Killer was most likely killing the NiFi service to protect the OS. The NiFi bootstrap process would have detected the main process died and started it again assuming OOM killer did not kill the parent process.

Created 01-14-2022 08:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kilynn

I see that you have set some very high values (330 GB) for your NiFi heap space. This is really not recommended. Can also see from what you have shared that the particular NiFi instance has been up for ~2.5 hours and within that 2.5 hour timeframe it has been in a stop-the-world state due to Java Garbage Collection (GC) for ~20 minutes. This means that your NiFi is spending ~13% of its uptime doing nothing by stop-the-world garbage collection. Is there a reason you set your heap this high? Setting large heap simply because your server has the memory available is not a good reason. Java GC kicks in when heap usage is around 80% usage. When an object in heap is no longer being used it is not actually cleaned out of heap and space reclaimed at that time. Space is only reclaimed via GC. The larger the heap the longer the GC events are going to take. Seeing as how you current heap usage is ~53%, I am guessing with each GC event a considerable amount of heap space is being released. Long GC pauses (stop-the-world) can result in node disconnection because NiFi node heartbeats are not being sent.

When NiFi seems to be restarting with no indication at all in the nifi-app.log, you may want to take a look at your systems /var/log/messages file for any indications of the Out Of Memory (OOM) killer being executed. With your NiFi instance being the largest consumer of memory on the host, it would be the top pick process by the OS OOM Killer when available OS memory gets too low.

In most case you should not need more the 16GB of heap to run most dataflows. If you are building dataflows that utilize a lot of heap, I'd recommend taking a close look at your dataflow designs to see if there are better design choices. This that can lead to large heap usage is creating very large FlowFile attributes (for example, extracting large amount of a FlowFile's content in to a FlowFile attribute). FlowFile attributes all live inside the JVM heap. Some other dataflow designs elements that can lead to high heap usage include:

- Merging a very large number of FlowFiles in a single Merge processor. Better to use multiple merge processors in series with first merging up 10,000 to 20,000 FlowFiles and then second merging those in to even larger files

- Splitting a very large file in a single split processor resulting in a lot of FlowFiles produced in a single transaction. Better to use multiple or even look at ways of processing the dat without needing to split the file in to multiple files (look at using the available "record" based processors)

- Reading in entire content of a large FlowFile in to memory to perform action on it. Like ExtractText processor configured for entire text instead of line-by-line mode.

While I 100% agree that you should be looking in to your "thread" allocation choices in your nifi.properties file. Many of them seem unnecessarily high for a 7 node cluster. You should understand that each node in your cluster executes independently of the others, so the settings applied pertain to each node individually. Following the guidance in the NiFi app guide (can be found in NIFi UI under help or on Apache NiFi site) is strongly advised.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt