Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NIFI Flow Files stopped , and the processors ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NIFI Flow Files stopped , and the processors are running although

- Labels:

-

Apache NiFi

-

Cloudera DataFlow (CDF)

Created 05-06-2018 09:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dears,

I'm using HFD 3.1.0 ,

I ran into a situation in which the processors are running and there are no processed flowfiles between processors

Created 05-06-2018 02:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please add more details like your flow screenshot,processors scheduling information,JVM memory and logs if you found any issues, So that we can better understand the issue.

Created on 05-06-2018 02:49 PM - edited 08-18-2019 01:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sorry for that I thought that it is a common issue as I'm newby in HDF, Appreciated your respond.

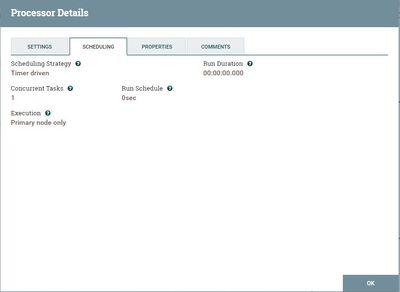

Screenshot: Done,

processors scheduling information: Done,

JVM memory:

logs: Attached

Created 05-06-2018 11:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Couple of questions.

- Your InvokeHTTP is trying a POST?

- On the top right corner, you will see the hamburger menu. Look out for the cluster option and click. Please share the details. Are all your nodes up?

Created 05-07-2018 11:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, @Rahul Soni

thanks for your reply but regarding your questions :

1-It is not about a specific processor I have multiple of processors like "gettwitter", "putHDFS", "getfile" and others the problem persisted on all of them.

2-IT is a cluster from one node.

Created 05-06-2018 03:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think Processor is stuck on running one thread as you can see at top right corner.

Can you once make sure you are able to access and get the response from the URL(mentioned invokehttp processor),

As InvokeHttp processor is running on Primary Node make sure the queued flowfiles are also on the same Primary Node once.

If the flowfiles are on other node(not primary node) then Stop the InvokeHTTP processor and change the Scheduling to All Nodes and if connection/request timeouts values are higher numbers please decrease the value to seconds then start the processor again.

You can run InvokeHttp processor on all nodes(because you are triggering invoke http based on GetFaceBook pages processor group).

I don't see any logs regarding invokehttp processor(there are some 420 status codes for GetTwitter[id=11b02d87-0163-1000-0000-00007f5a45a6] processor).

Created 05-07-2018 12:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-

I noticed that you have this processor configured to run "primary node" only. You should never run processors that operate on incoming FLowfiles from a source connection with "primary node" only. In a NiFi cluster, the zookeeper elected primary node can change at anytime. So it becomes possible that the data queued in that connection feeding your InvokeHTTP processor is sitting on what was previously a primary node. Since your invokeHTTP processor is configured for "Primary node" only it will not be running anymore on the old primary node to work on those queued FlowFiles.

-

Suggestion:

1. From Global menu in upper right corner of NiFi UI, click on "cluster". From the UI that appears take note of who the current Primary node is in your cluster. exit out of that UI.

2. From Global menu in upper right corner of NiFi UI, click on "Summary". With "CONNECTIONS" tab selected form across top, locate the connection with the queued data (You can click on "Queue" column name to sort the rows). To the far right of that row you will see 3 stacked boxes icon. clicking this will open a new UI where you can see exactly which node(s) have these queued FlowFiles. If it is not the current primary node, then the invokeHTTP processor is not going to be running there to consume them.

-

Only processors that ingest new Flowfiles that do not take an incoming connection form another processor and that are using non cluster friendly protocols should be scheduled for "primary node" only. All other processors should be scheduled for all nodes.

-

If queued data is actually on the primary node, you will want to get a thread dump to determine what the invokeHTTP processor thread that is active is waiting on. ( ./nifi.sh dump > <name of your dump file> ). In this case it is likely waiting on some response from your http end-point.

-

Thank you,

Matt

Created 05-07-2018 01:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks Dear a lot, I can see from the dumped data " attached below " that alot of tasks are waiting but I can't understant upon what ?

- how should I manipulate

- "java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject@5bb7c15d"

Created 05-07-2018 01:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

looping @Mohamed Emad

Created 05-07-2018 01:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Having a lot of "WAITING" threads in a nifi dump is very normal and does not indicate an issue.

I only see a couple "BLOCKED" threads and do not see a single "invokeHTTP" thread.

When there is no obvious issue in thread dump, it becomes necessary to take several thread dumps (5 minutes or more between each one) and note what threads persist across all of them. Again some may be expected but others may not. For example web and socket listeners would be expected all the time waiting.

-

Did you run through my suggestion? Was the queued data actually on the node from which you provide thread dump?

How many nodes in your cluster?

-

I do see WAITING threads on Provenance which can contribute to a slow down of your entire flow. There is a much faster provenance implementation available in your HDF release versus the one you are using now based on thread dump:

- Currently you are using default "org.apache.nifi.provenance.PersistentProvenanceRepository"

- Switch to using "org.apache.nifi.provenance.WriteAheadProvenanceRepository"

- You can switch from persistent to writeAhead without needing to delete your existing provenance repository. NiFi will handle the transition. However, you will not be able to switch back without deleting the provenance repository.

- You will need to restart NiFi after making this configuration change.

-

Another thing to consider is the number configured for your "Max Timer thread count" (found in global menu under "controller settings). This setting controls the maximum number of cpu threads that can be used to service all the processor concurrent task requests.

-

Thanks,

Matt