Hi All,

I am newbie to NIFI!

Today I got one problem of duplicate records, Below is the following scenario:

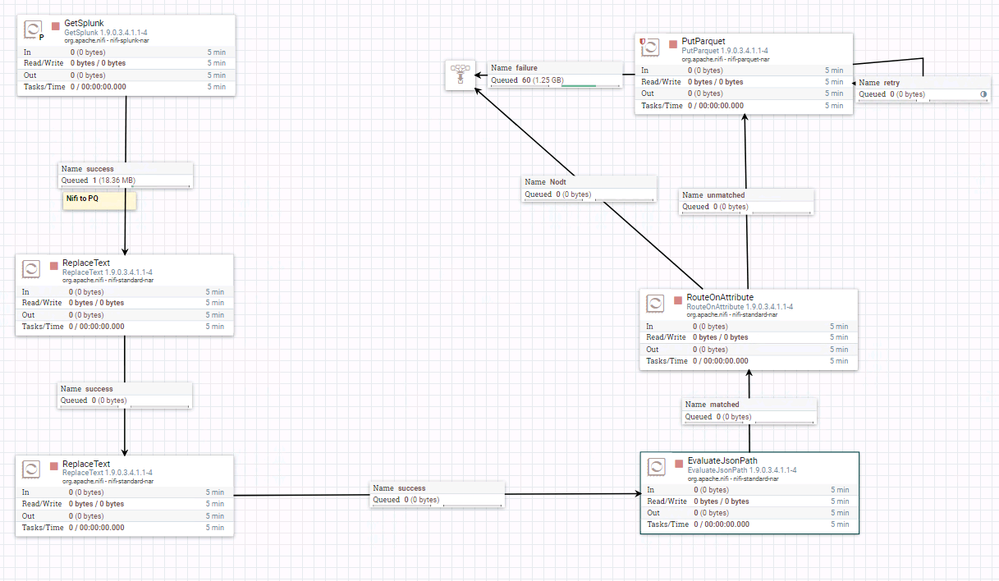

We need to get data from Splunk to HDFS in Parquet format so we created a Data Flow with NIFI

- I am getting data from Splunk using GetSplunk in JSON format and later putting in HDFS using the PutParquet processor with JsonTreeReader, AvroSchema.

- I am successful in this but later I see there are duplicate records of each record, So seeking help here to fix this issue and below is the sample JSON record and PFA for the NIFI DataFlow screenshot.

Thanks In Advance.

Example Data Record:

[ {

"preview" : true,

"offset" : 0,

"result" : {

"action" : "allowed",

"app" : "",

"dest" : "xxxx.xx.xxx.xxx",

"dest_bunit" : "",

"dest_category" : "",

"dest_ip" : "xxx.xx.xxx.xxx",

"dest_port" : "xxx",

"dest_priority" : "",

"direction" : "N/A",

"duration" : "",

"dvc" : "xxx.xx.xxx.xxx",

"dvc_ip" : "xxx.xx.xxx.xxx",

"protocol" : "HTTPS",

"response_time" : "",

"rule" : "/Common/ds_policy_2",

"session_id" : "ad240f0634150d02",

"src" : "xx.xxx.xxx.xx",

"src_bunit" : "",

"src_category" : "",

"src_ip" : "xx.xxx.xxx.xx",

"src_port" : "62858",

"src_priority" : "",

"tag" : "proxy,web",

"usr" : "N/A",

"user_bunit" : "",

"user_category" : "",

"user_priority" : "",

"vendor_product" : "ASM",

"vendor_product_uuid" : "",

"ts" : "",

"description" : "",

"action_reason" : "",

"severity" : "Informational",

"user_type" : "",

"service_type" : "",

"dt" : "20200331",

"hr" : "15"

},

"lastrow" : null

} ]