Support Questions

- Cloudera Community

- Support

- Support Questions

- NIFI RouteText processor taking too long

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NIFI RouteText processor taking too long

- Labels:

-

Apache NiFi

Created on 09-06-2016 01:36 PM - edited 08-19-2019 01:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this performance exhaustive or there is any way to improve the performance further in this standalone server considering the server has 4 cores and 16 GB RAM.

Also as I can observe most the processing time is consumed by the RouteText processor. Is this design suitable for this kind of use case to send the records of a pipe delimited file to different outputs based on some conditions since RouteText processes records line by line. We are using NIFI 0.6.1

Created 09-06-2016 01:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

Is a perfectly fine use case but I'd recommend breaking the input data up a bit so you can take advantage of the parallelism. So given you have a 50M line input I'd recommend running that first through SplitText to break that into files with say 10,000 lines. That would yield about 5,000 splits each with around 10,000 lines. Then feed that into the RouteText processor. This way it can be operated in a far better divide and conquer manner. You should see rates pretty close to the ideal rate of your underlying storage system. In very conservative terms assume that is about 50MB/s so it should take about 5 minutes at most (and that can certainly be improved).

Thanks

Joe

Created 09-06-2016 01:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

Is a perfectly fine use case but I'd recommend breaking the input data up a bit so you can take advantage of the parallelism. So given you have a 50M line input I'd recommend running that first through SplitText to break that into files with say 10,000 lines. That would yield about 5,000 splits each with around 10,000 lines. Then feed that into the RouteText processor. This way it can be operated in a far better divide and conquer manner. You should see rates pretty close to the ideal rate of your underlying storage system. In very conservative terms assume that is about 50MB/s so it should take about 5 minutes at most (and that can certainly be improved).

Thanks

Joe

Created 09-06-2016 04:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

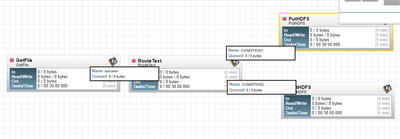

According to you the flow should look like

GetFile->SplitFile->RouteText->PutHDFS

Since we are using only a standalone cluster if we Split the file into 5000 splits do we need to do a UpdateAttribute/MergeContent after the RouteText processor or the flow shown above should be fine?

Also do we need to set the "No of Concurrent Task" in all the processor(GetFile,putHDFS,splitText) or only the RouteText processor?

Regards,

Indranil Roy

Created 09-08-2016 03:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yep what you describe with UpdateAttribute/MergeContent sounds perfectly fine. What you'll want there precisely will depend on how many relationships you have out of RouteText. As for concurrent tasks I'd say it would be

1 for GetFile

1 for SplitFile

2...4 or 5 or so on RouteText. No need to go too high generally.

1 for MergeContent

1 to 2 for PutHDFS

You don't have to stress too much on those numbers out of the gate. You can run it with minimal threads first, find any bottlenecks and increase if necessary.

Created on 09-06-2016 02:03 PM - edited 08-19-2019 01:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have a couple things going on here that are affecting your performance. Based on previous HCC discussions you have a single 50,000,000 line file you are splitting in to 10 files (Each 5,000,000 lines) and then distributing those splits to your NiFi cluster via a RPG (Site-to-Site). You are then using the RouteText processor to read every line of these 5,000,000 line files and route the lines based on two conditions.

1. Most NiFi processors (including RouteText) are multi-thread capable by adding additional concurrent tasks. A single concurrent task can work on a single file or batch of files. Multiple threads will not work on the same file. So by setting your current tasks to 10 on the RouteText you may not actually be using 10. The NiFi controller also has a max number of threads configuration that limits the number of threads available across all components. The max thread setting can be found by clicking on this icon

2. In oder to get better multi-thread throughput on your RouteText processor, try splitting your incoming fie in to many smaller files. Try splitting your 50,000,000 line file in to files with no more then 10,000 lines each. The resulting 5,000 files will be better distributed across your NiFi cluster Nodes and allow the multiple threads to be utilized.

Thanks,

Matt

Created 09-06-2016 02:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"A single concurrent task can work on a single file."

That is worth clarifying. It is actually that a single concurrent task can work on a single process session. When RouteText creates a process session it pulls in a single flow file. Other processors can pull in many more. Just depends on the use case and design but fundamentally a single concurrent task can work on far more than a single file. For "this" use case and "this" processor the recommendation is to spit the input up so that parallelism can be taken advantage of.

Created 09-06-2016 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the clarification on my post.