Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NameNode keeps going down

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NameNode keeps going down

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created 06-01-2017 05:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I am having a problem with the NameNode status ambari shows. The following points are verifiable in the system: - The NameNode keeps going down a few seconds after I start it through ambari (it looks like it never really goes up, but the start process run successfully);

- Despite being DOWN according to ambari, if I run JPS in the server the NameNode is hosted it shows that the service is running:

[hdfs@RHTPINEC008 ~]$ jps 39395 NameNode 4463 Jps

and I can access NameNode UI properly;

- I already restarted both the namenode and ambari-agent the manually but the behavior keeps the same;

- This problem started after some HBase/Phoenix heavy queries that caused the namenode to go down (not sure if this is actually related but the exact same configurations were working well before this episode);

- I've been digging for some hours and I am not being able to find error details in the namenode logs nor in the ambari-agent logs that allows me to understand the problem;

I am using hdp 2.4.0 and no HA options.

Can someone help in this?

Thanks in advance

Created 06-02-2017 09:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please do

ps -ef | grep namenode

On the cluster, and see what all processes comes back. It looks like there is a Namenode process already running, and when you try to start that again it fails to start another one (which is the correct behavior).

I will recommend to stop all the processes returned by the above command, and then restarting the Namenode again.

Created on 06-03-2017 04:29 PM - edited 08-17-2019 11:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Namit,

Thank you for your answer.

Yes, I can run the command:

[nosuser@RHTPINEC008 ~]$ ps -ef | grep namenode nosuser 7201 6867 0 16:01 pts/0 00:00:00 grep --color=auto namenode hdfs 39395 1 5 May31 ? 04:01:49 /usr/jdk64/jdk1.8.0_60/bin/java -Dproc_namenode -Xmx1024m -Dhdp.version=2.4.0.0-169 -Djava.net.preferIPv4Stack=true -Dhdp.version= -Djava.net.preferIPv4Stack=true -Dhdp.version= -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop/hdfs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/usr/hdp/2.4.0.0-169/hadoop -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,console -Djava.library.path=:/usr/hdp/2.4.0.0-169/hadoop/lib/native/Linux-amd64-64:/usr/hdp/2.4.0.0-169/hadoop/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhdp.version=2.4.0.0-169 -Dhadoop.log.dir=/var/log/hadoop/hdfs -Dhadoop.log.file=hadoop-hdfs-namenode-RHTPINEC008.corporativo.pt.log -Dhadoop.home.dir=/usr/hdp/2.4.0.0-169/hadoop -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,RFA -Djava.library.path=:/usr/hdp/2.4.0.0-169/hadoop/lib/native/Linux-amd64-64:/usr/hdp/2.4.0.0-169/hadoop/lib/native:/usr/hdp/2.4.0.0-169/hadoop/lib/native/Linux-amd64-64:/usr/hdp/2.4.0.0-169/hadoop/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -server -XX:ParallelGCThreads=8 -XX:+UseConcMarkSweepGC -XX:ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:NewSize=512m -XX:MaxNewSize=512m -Xloggc:/var/log/hadoop/hdfs/gc.log-201705311529 -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -Xms4096m -Xmx4096m -Dhadoop.security.logger=INFO,DRFAS -Dhdfs.audit.logger=INFO,DRFAAUDIT -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -Dorg.mortbay.jetty.Request.maxFormContentSize=-1 -server -XX:ParallelGCThreads=8 -XX:+UseConcMarkSweepGC -XX:ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:NewSize=512m -XX:MaxNewSize=512m -Xloggc:/var/log/hadoop/hdfs/gc.log-201705311529 -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -Xms4096m -Xmx4096m -Dhadoop.security.logger=INFO,DRFAS -Dhdfs.audit.logger=INFO,DRFAAUDIT -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -Dorg.mortbay.jetty.Request.maxFormContentSize=-1 -server -XX:ParallelGCThreads=8 -XX:+UseConcMarkSweepGC -XX:ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:NewSize=512m -XX:MaxNewSize=512m -Xloggc:/var/log/hadoop/hdfs/gc.log-201705311529 -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -Xms4096m -Xmx4096m -Dhadoop.security.logger=INFO,DRFAS -Dhdfs.audit.logger=INFO,DRFAAUDIT -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -Dorg.mortbay.jetty.Request.maxFormContentSize=-1 -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.namenode.NameNode

As you suggested I killed the process :

[nosuser@RHTPINEC008 ~]$ sudo kill -9 39395

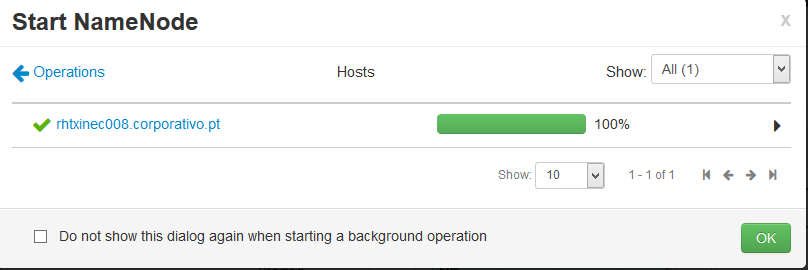

and started it again through Ambari, which took a while but ended successfuly:

A few seconds later the NameNode went down again in the Ambari interface, however I am still able to run:

[hdfs@RHTPINEC008 ~]$ jps 13494 Jps 9832 NameNode

Any ideas?

Could it be the ambari server or agent having problems collecting namenode status?

Thanks

Created 05-03-2018 08:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffrey Shelton Okot Me too getting same error. Could you please suggest?

Created 05-03-2018 08:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you share the NameNode error log?

Created 05-04-2018 10:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Services running in the server but from ambari , it shows and GC logs show following errors. Could you please check?

2018-05-04T06:39:20.038-0400: 130.745: [GC (Allocation Failure) 2018-05-04T06:39:20.038-0400: 130.745: [ParNew: 152348K->17472K(157248K), 0.0294015 secs] 152348K->30350K(506816K), 0.0294737 secs] [Times: user=0.15 sys=0.03, real=0.03 secs]

Created 05-04-2018 12:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While starting the namenode with wedhdfs enabled, getting following errors

File "/usr/lib/python2.6/site-packages/resource_management/libraries/providers/hdfs_resource.py", line 250, in _run_command

raise WebHDFSCallException(err_msg, result_dict)

resource_management.libraries.providers.hdfs_resource.WebHDFSCallException: Execution of 'curl -sS -L -w '%{http_code}' -X GET 'http://node1.test.co:50070/webhdfs/v1/tmp?op=GETFILESTATUS&user.name=thdfs@test.co'' returned status_code=400.

{

"RemoteException": {

"exception": "IllegalArgumentException",

"javaClassName": "java.lang.IllegalArgumentException",

"message": "Invalid value for webhdfs parameter \"user.name\": Invalid value: \"thdfs@test.co\" does not belong to the domain ^[A-Za-z_][A-Za-z0-9._-]*[$]?$"

}

}

Created 05-04-2018 12:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seem you have problems with your Auth-to-local Rules please validate?

""message": "Invalid value for webhdfs parameter"

The conclusion is: the username used with the query is checked against a regular expression and, if not validated, the above exception is returned. The default regular expression being:

^[A-Za-z_][A-Za-z0-9._-]*[$]?$

Can you start the namenode manually,

su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-namenode/../hadoop/sbin/hadoop-daemon.sh start namenode"

Please revert

Created 05-04-2018 01:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you . Let me validate the rules.

while starting the namenode manually ,please find the log

su thdfs@test.co -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/2.6.4.0-91/hadoop/sbin/hadoop-daemon.sh --config /usr/hdp/2.6.4.0-91/hadoop/conf start namenode'

starting namenode, logging to /var/log/hadoop/thdfs@test.co/hadoop-thdfs@test.co-namenode-node1.test.co.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=256m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=256m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=256m; support was removed in 8.0

ulimit -a for user thdfs@test.co

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 127967

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 4096

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

Created 05-04-2018 08:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content