Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Need to convert a hex file to another format u...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Need to convert a hex file to another format using HDF

- Labels:

-

Apache NiFi

-

Cloudera DataFlow (CDF)

Created 11-08-2016 08:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a couple of rather large hex files I need to convert to another format with the intention of then stripping out certain attributes and storing results in SQL Server and/or Hive. The files are 12.7 MB and 3.3 MB. When I use the code from this HCC answer https://community.hortonworks.com/questions/60597/hexdump-nifi-processor-nifi-hexdump-processor.html

import java.io.DataInputStream

def flowFile = session.get()

if(!flowFile) return

def attr = ''

session.read(flowFile, {inputStream ->

dis = new DataInputStream(inputStream)

attr = Long.toHexString(dis.readLong())

attr2 = Long.toHexString(dis.readLong())

} as InputStreamCallback)

flowFile = session.putAttribute(flowFile, 'first16hex', attr+attr2)

session.transfer(flowFile, REL_SUCCESS)

in an ExecuteProcess I get a "File too large" error.

I'm also aware of this JIRA but am looking for a good workaround. https://issues.apache.org/jira/browse/NIFI-2997

Created 11-08-2016 11:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Scott,

Use ExecuteScript processor, as mentioned in the article. Looks like you are putting an embedded script into ExecuteProcess, which is meant to invoke OS shell commands, hence the error.

Created 11-08-2016 08:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you share a stack trace / error log from logs/nifi-app.log? I'm curious to see what part of the code gives a "File too large" error.

Created on 11-08-2016 09:02 PM - edited 08-18-2019 03:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I apologize. The actual error is "File name is too long". The name of the file is COWFILE1.DAT and COWFILE1.ARC.

Created 11-08-2016 09:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

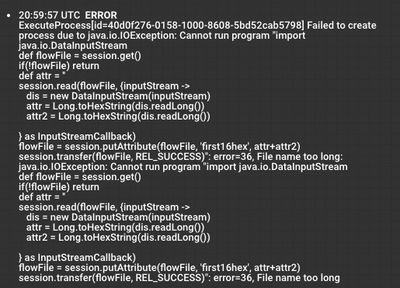

Here is the repeating error in nifi-app.log.nifi-app.txt

Created 11-08-2016 11:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Scott,

Use ExecuteScript processor, as mentioned in the article. Looks like you are putting an embedded script into ExecuteProcess, which is meant to invoke OS shell commands, hence the error.

Created 11-09-2016 02:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Andrew Grande! That worked! I feel like a noob 🙂 but appreciate all the help!